What Are Log Files?

A log file is a document that contains information about every request made to your server. And details about how people and search engines interact with your site.

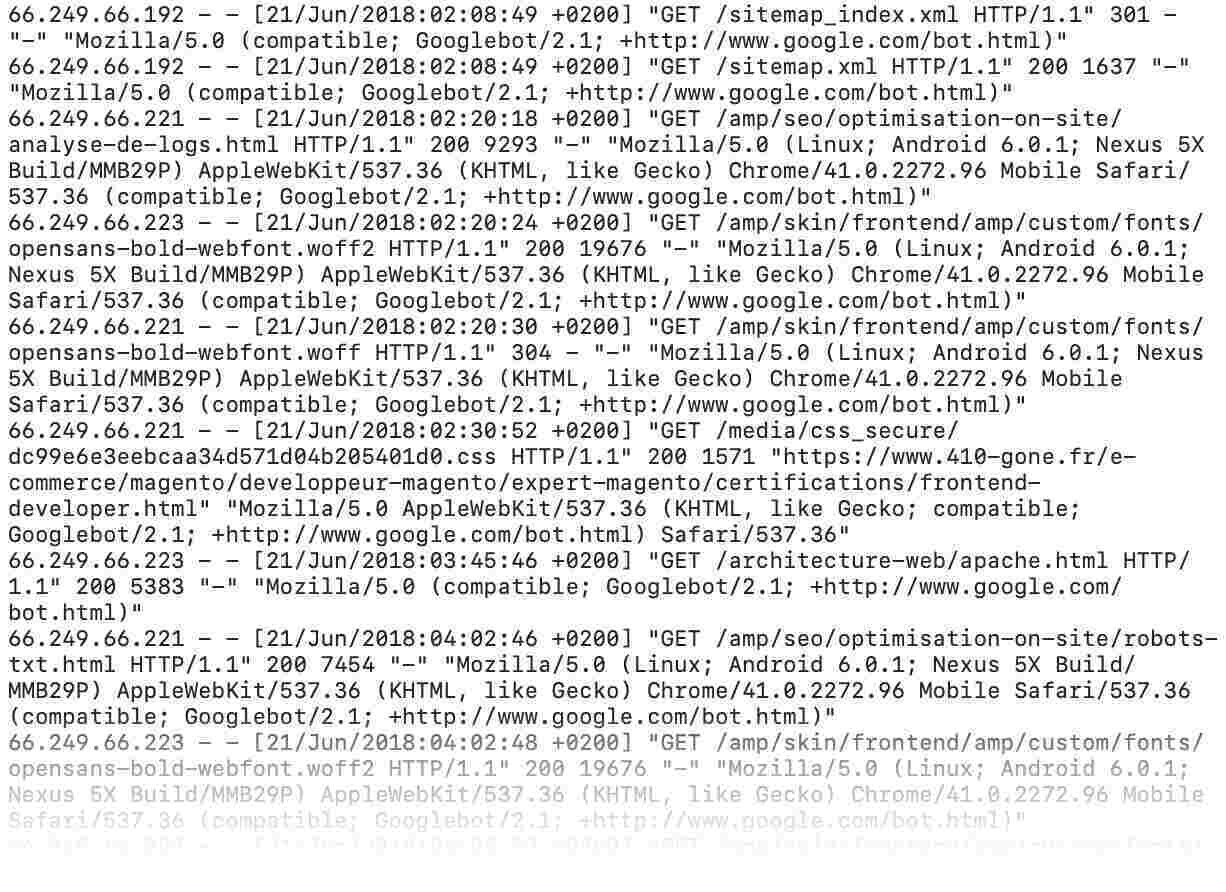

Here’s what a log file looks like:

As you can see, log files contain a wealth of information. So, it’s important to understand them and how to use that information.

In this guide, we’ll look at:

- What log file analysis is

- What log file analysis is used for in SEO

- How to do a log file analysis

- How to make sure crawlability is a priority

Tip: Create a free Semrush account (no credit card needed) to follow along.

What Is Log File Analysis?

Log file analysis is the process of downloading and auditing your site’s log file to proactively identify bugs, crawling issues, and other technical SEO problems.

Your site’s log file is stored on your server. And it records every request it gets from people, search engines, and other bots.

By analyzing these logs, you can see how Google and other search engines interact with your site. And identify and fix any issues that might affect your site’s performance and visibility in search results.

What Is Log File Analysis Used for in SEO?

Log file analysis is a game-changer for improving your technical SEO.

Why?

Because it shows you how Google crawls your site. And when you know how Google crawls your site, you can optimize it for better organic performance.

For example, log file analysis can help you:

- See how often Google crawls your site (and its most important pages)

- Identify the pages Google crawls the most

- Monitor spikes and drops in crawl frequency

- Measure how fast your site loads for Google

- Check the HTTP status codes for every page on your site

- Discover if you have any crawl issues or redirects

In short: Log file analysis gives you data you can use to improve your site’s SEO.

How to Analyze Log Files

Now that we've taken a look at some of the benefits of log file analysis in SEO, let's look at how to do it.

You’ll need:

- Your website's server log file

- Access to a log file analyzer

Note: We’ll be showing you how to do a log file analysis using Semrush’s Log File Analyzer.

Access Log Files

First, you need to obtain a copy of your site’s log file.

Log files are stored on your web server. And you'll need access to it to download a copy. The most common way of accessing the server is through a file transfer protocol (FTP) client like FileZilla.

You can download FileZilla for free on their website.

You’ll need to set a new connection to your server using the FTP client and authorize it by entering your login credentials.

Once you've connected, you’ll need to find the server log file. Where it’s located will depend on the server type.

Here are three of the most common servers and locations where you can find the logs:

- Apache: /var/log/access_log

- Nginx: logs/access.log

- IIS: %SystemDrive%\inetpub\logs\LogFiles

But retrieving your site's log file isn't always so simple.

Common challenges include:

- Finding that log files have been disabled by a server admin and aren’t available

- Huge file sizes

- Log files that only store recent data (based either on a number of days or entries—also called “hits”)

- Partial data if you use multiple servers and content delivery networks (CDNs)

That said, you can easily solve most issues by working with a developer or server admin.

And if you don't have server access, you’ll need to speak with your developer or IT team anyway. To have them share a copy.

Analyze Log Files

Now that you have your log file, it’s time to analyze it.

You can analyze log files manually using Google Sheets and other tools. But it’s tiresome. And it can get messy. Quickly.

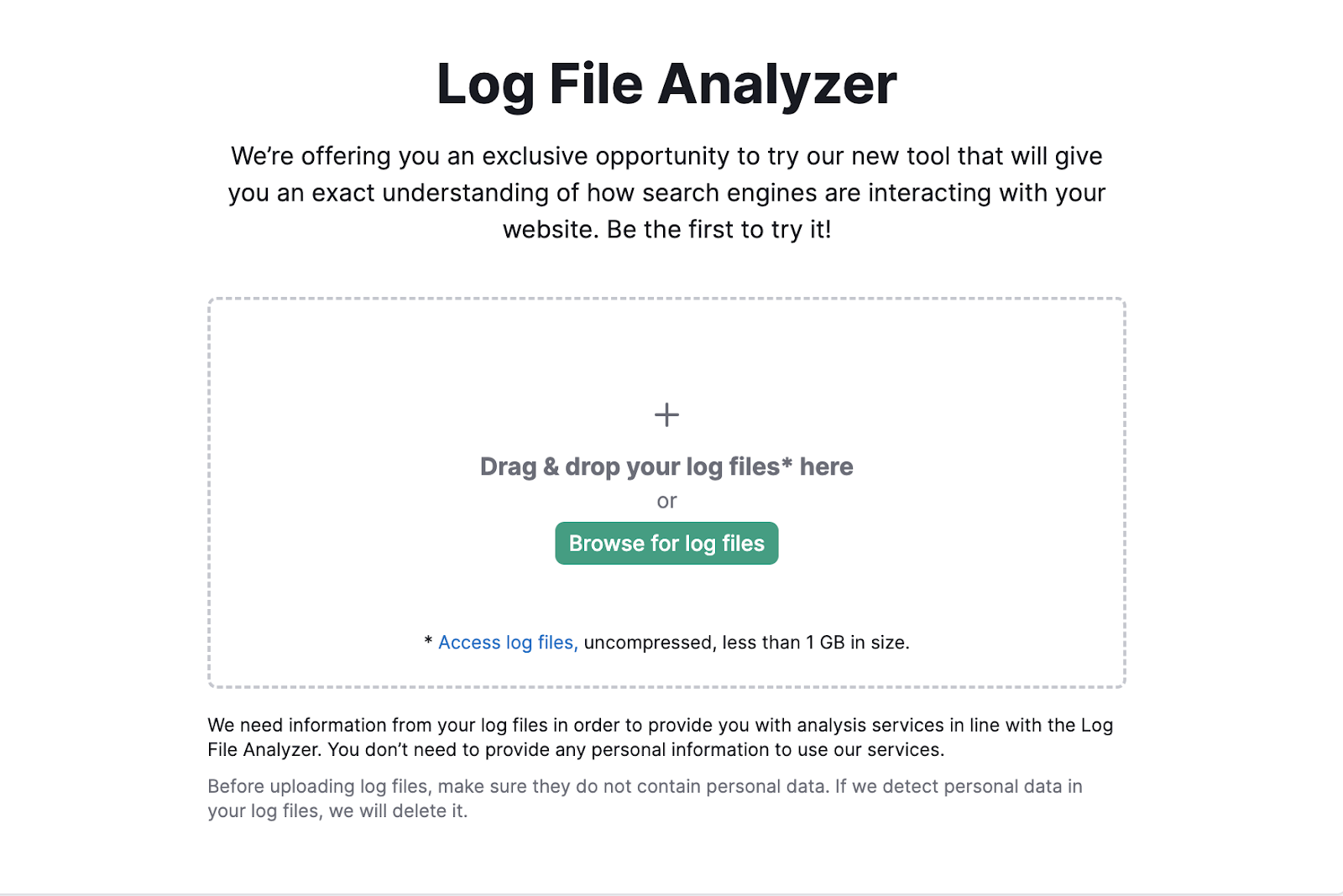

We recommend using our Log File Analyzer.

First, make sure your log file is unarchived and in the access.log, W3C, or Kinsta file format.

Then, drag and drop it into the tool and click “Start Log File Analyzer.”

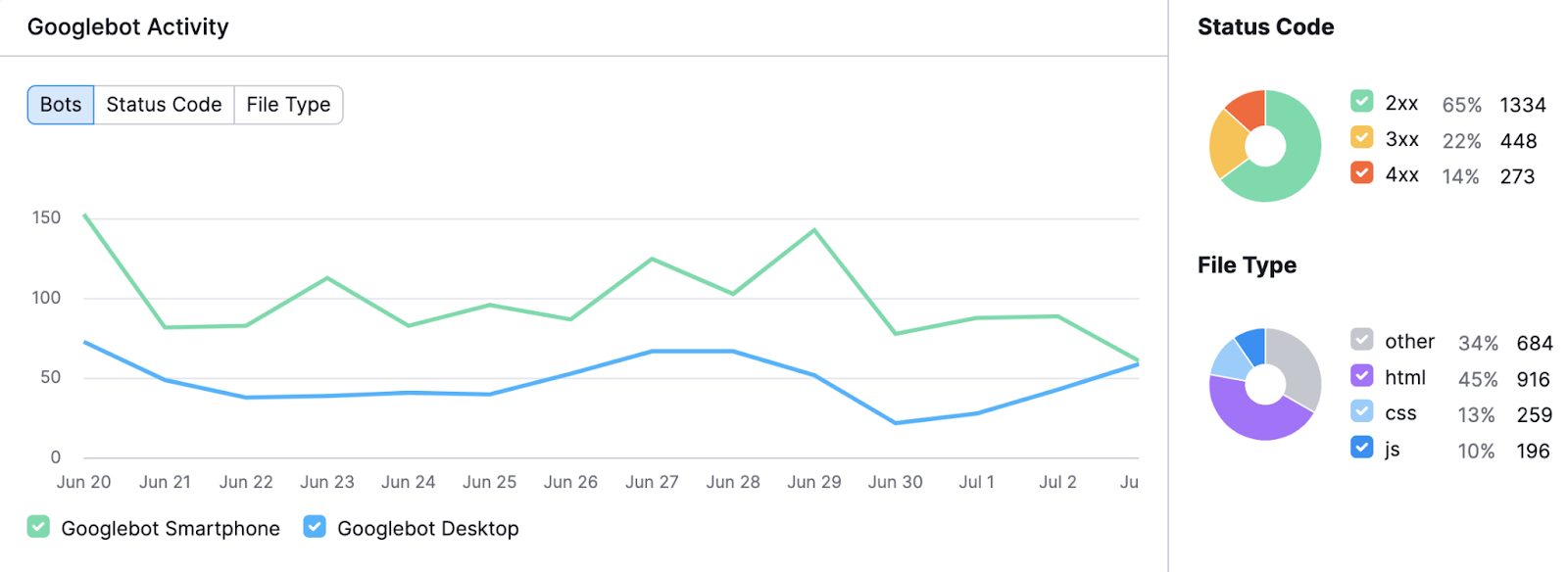

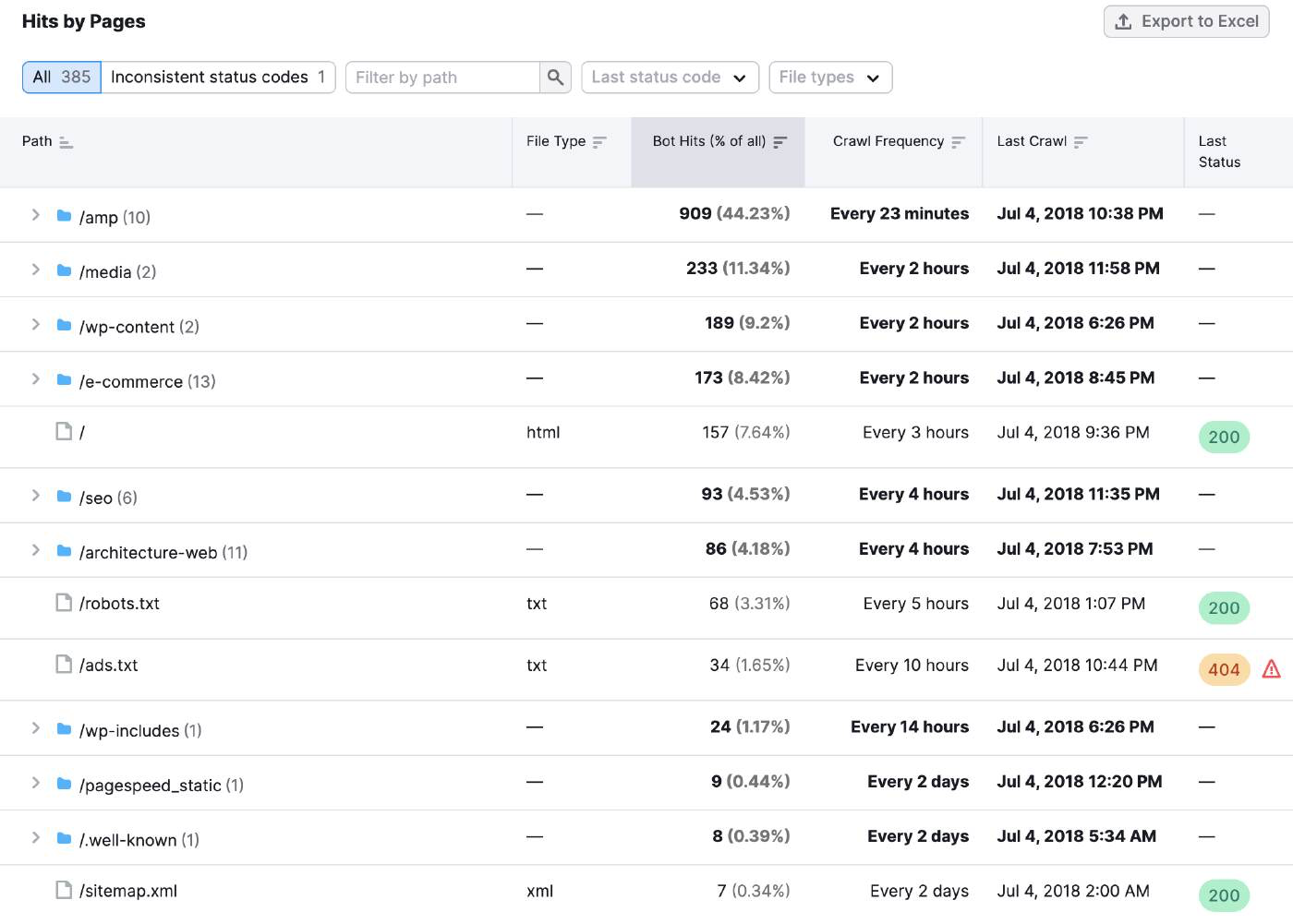

You’ll see a chart displaying Googlebot activity.

It shows daily hits, a breakdown of different status codes, and the different file types it’s requested.

You can use these insights to understand:

- How many requests Google is making to your site each day

- The breakdown of different HTTP status codes found per day

- A breakdown of the different file types crawled each day

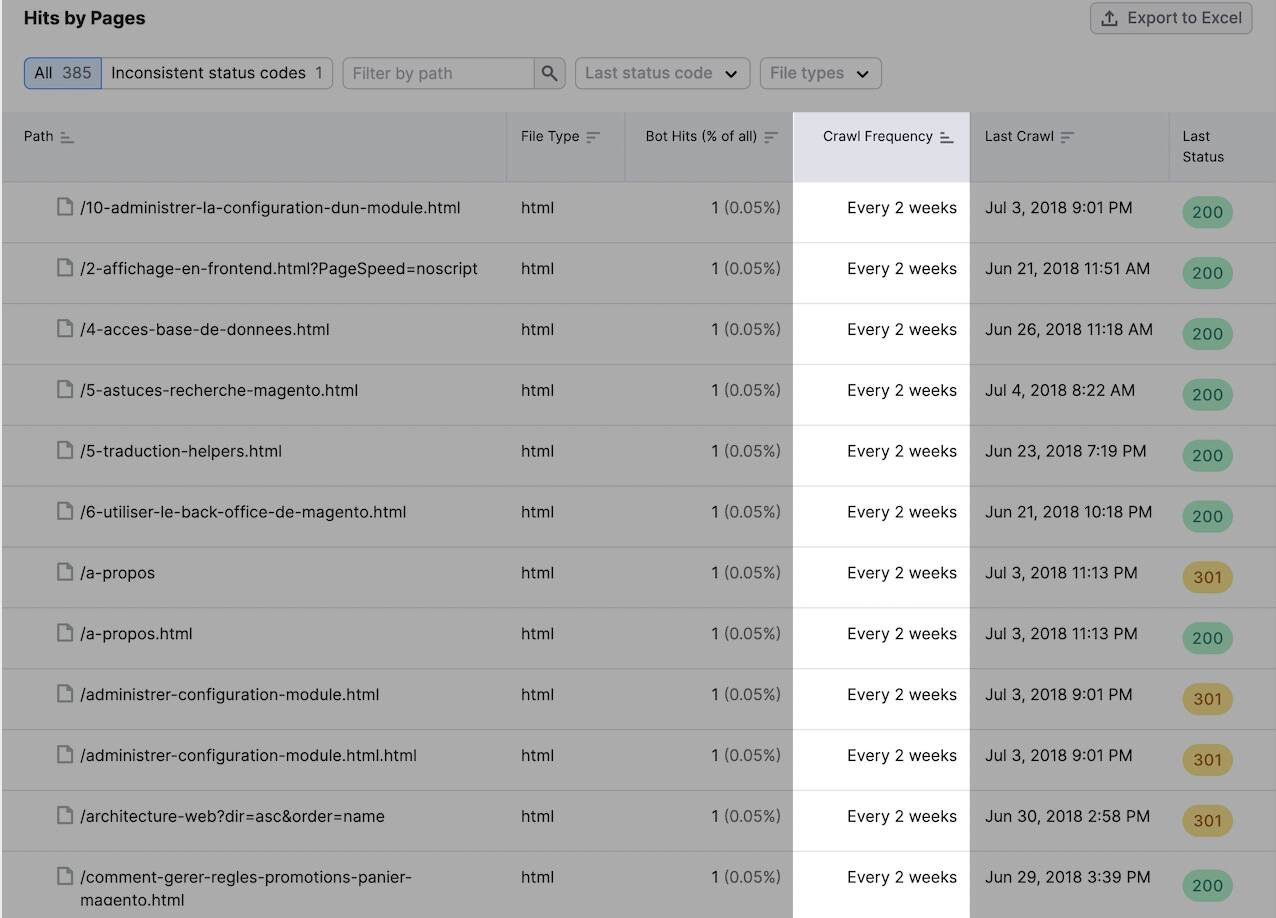

If you scroll down, you’ll see a table with insights for specific pages and folders.

You can sort by the “Crawl Frequency” column to see how Google is spending its crawl budget.

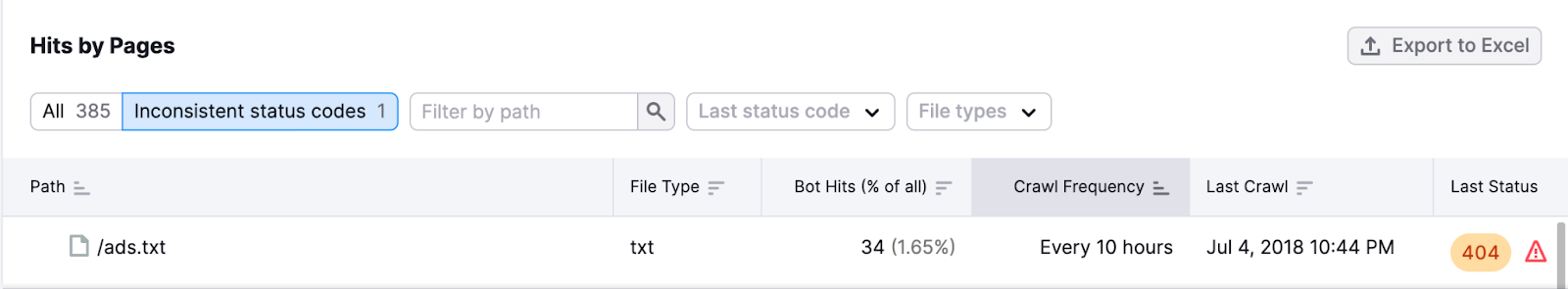

Or, click the “Inconsistent status codes” button to see paths with inconsistent status codes.

Like switching between a 404 status code indicating the page can’t be found and a 301 status code indicating a permanent redirect.

Using the tool makes server log analysis simple and straightforward. So you can spend time optimizing your site, not analyzing data.

Ensure Crawlability Is a Priority

Now you know how to access and analyze your log file. But don’t stop there.

You need to take proactive steps to make sure your site is optimized for crawlability.

This means doing some advanced SEO and auditing your site to get even more data.

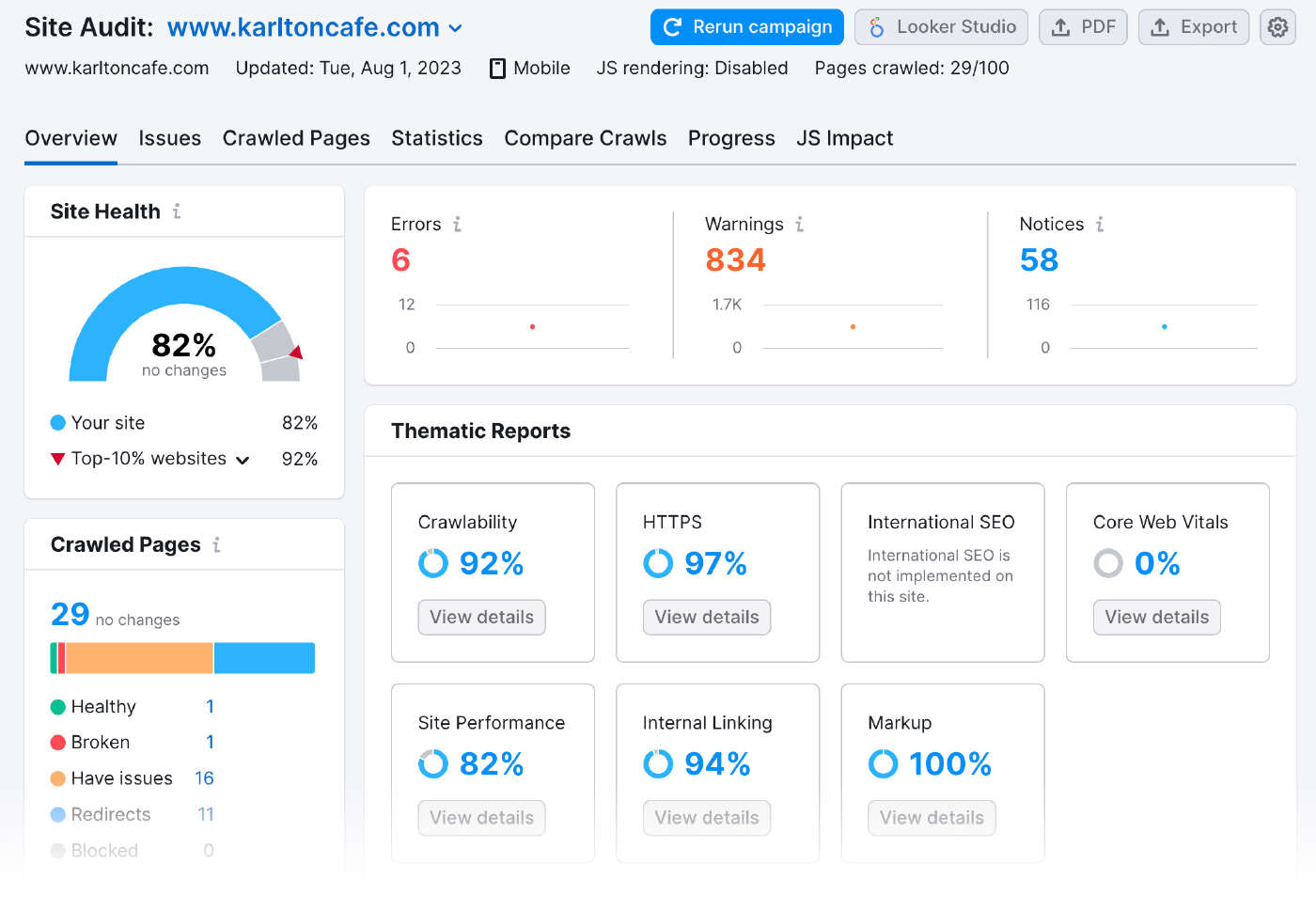

For example, you can run your site through Site Audit to see a dashboard with important recommendations like this one:

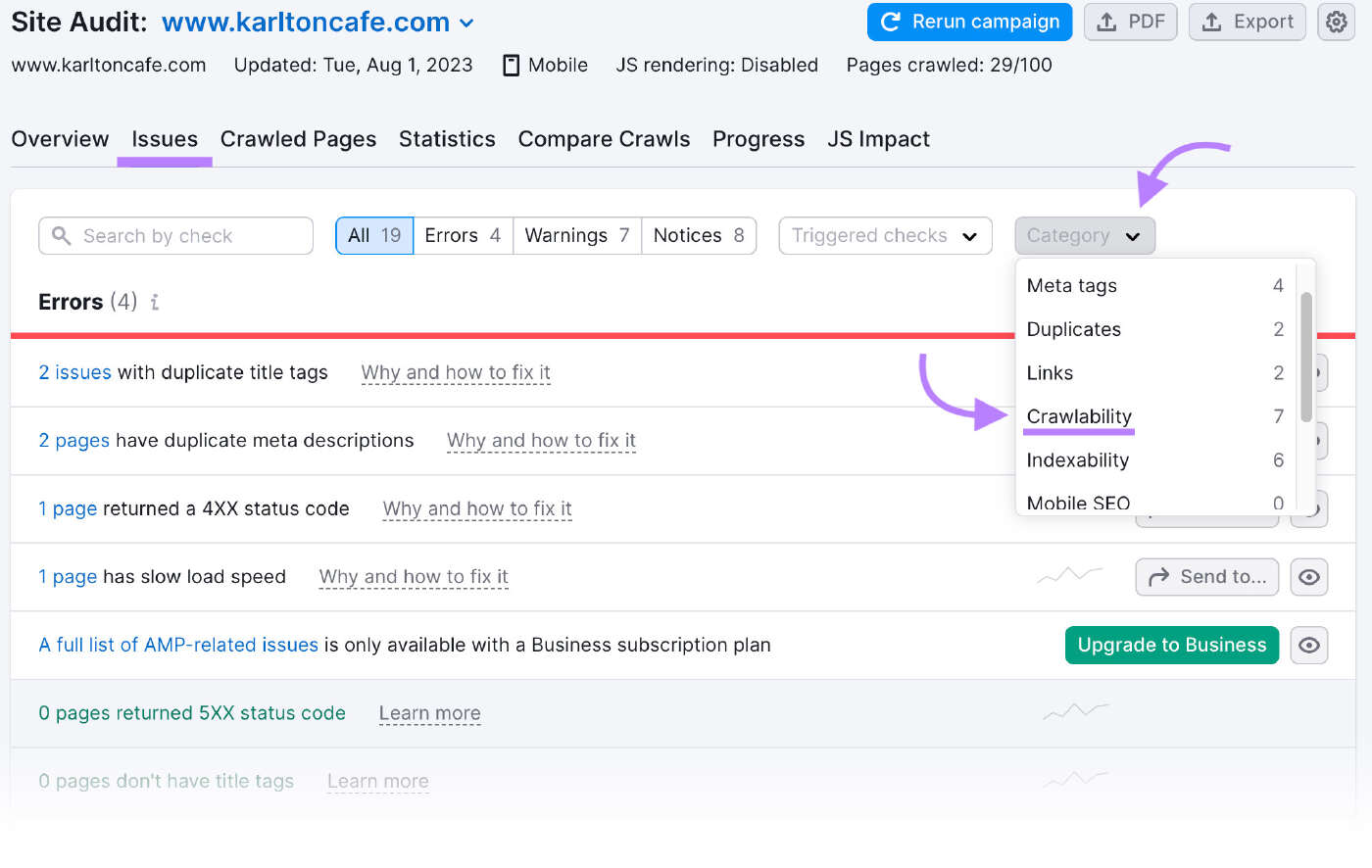

Head to the “Issues” tab and select “Crawlability” in the “Category” drop-down.

These are all the issues affecting your site’s crawlability.

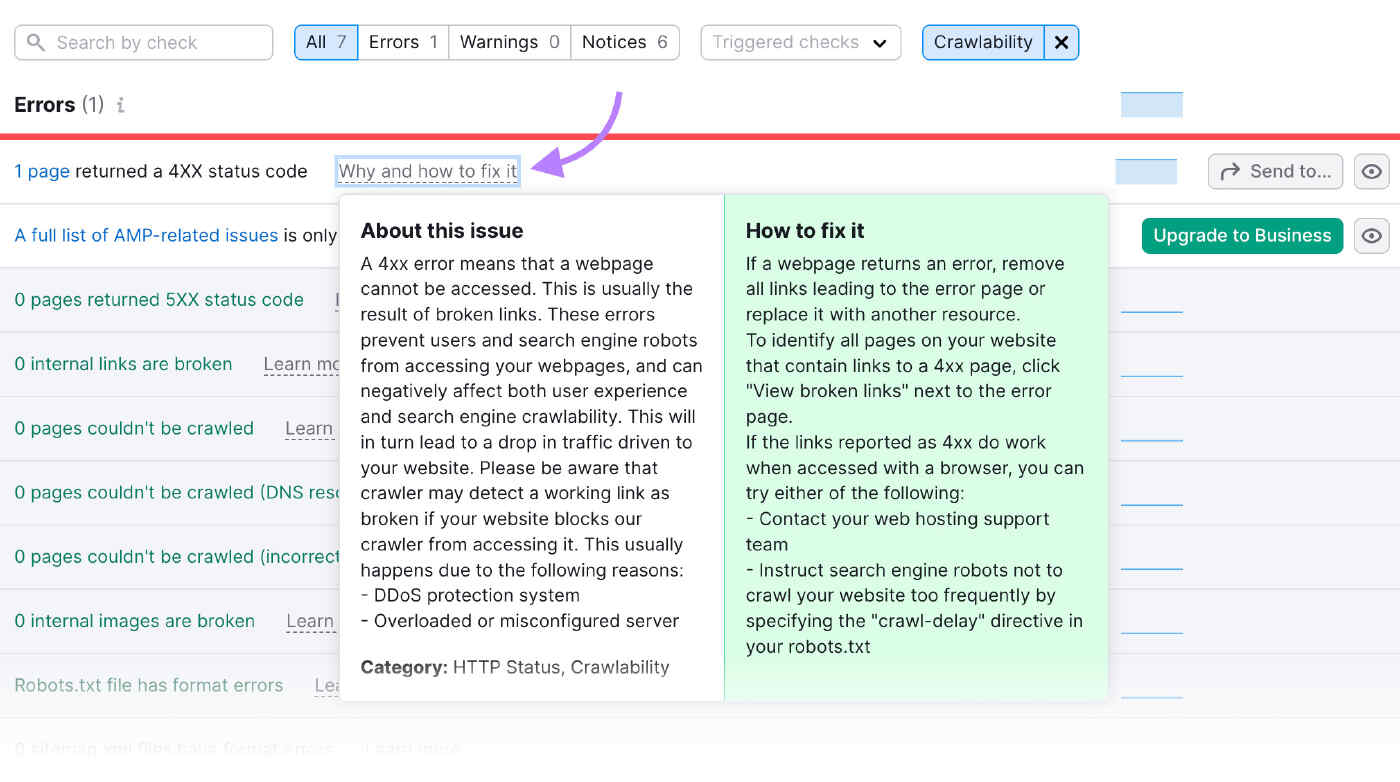

If you don’t know what an issue means or how to address it, click on “Why and how to fix it” to learn more.

Run an audit like this on a monthly basis. And iron out any issues that pop up.

You need to make sure Google and other search engines can crawl and index your webpages in order to rank them.