Websites need to be fast to maintain competitiveness and provide a good user experience to maintain high pageviews per visitor, reduce bounce rate or shopping cart abandoning rate, and improve search engine ranking.

You might have read a lot of articles focusing on making a website faster, which WordPress plugins might help and which might not, and a lot of similar tips that will improve your site speed. You might have also achieved a 100/100 PageSpeed score on both mobile and desktop devices.

But the important question is, is the 100/100 PageSpeed score enough? Does it mean absolute maximum performance? Here is what Google's Gary Illyes recently said:

This article will explain why a 100 score with the PageSpeed tool might not be enough. If you are a performance enthusiast, you will find several interesting optimizations techniques to apply to your websites. If you are not, these optimizations suggested below might help you stay ahead of your competition in terms of website performance. Let’s start with the introduction of the PageSpeed tool first.

What is Google PageSpeed? and How it Works

Google PageSpeed Insights measures the performance of a webpage on mobile and desktop and provides actionable guidance on what can be done to improve the speed of the website. The tool then ranks the website separately for mobile and desktop on the score of 0 to 100, where 100 means that the criteria for performance scoring are well satisfied.

The criteria includes first contentful paint, largest contentful paint, speed index, total blocking time, time to interactive, and cumulative layout shift.

There are a lot of articles everywhere that can help you learn more about how to best understand the working of the PageSpeed tool, and how to use the guidance provided by the tool and actually apply it to your website.

However, since you are here, I assume that you have already well past that point and ready to learn more about the next steps you can take to make your website even faster.

Doesn’t the PageSpeed tool guide you to the absolute maximum performance?

No.

Do I still need to worry about performance?

Yes.

PageSpeed tool is good and always makes your site faster, but it doesn’t mean that the 100 score means there is nothing left to do. In most cases, you still have the opportunity to save big time with page weight and page load time.

So, let’s have a look at the extra steps that can be done once you have achieved 100/100 score with the PageSpeed tool.

1. Use a Competitor’s Browser Cache

If you have a competitor that ranks a little higher than you for your keywords, chances are that the user visits its website before it clicks yours, if ever. However, if the user has been on another site, you can reuse the visitor's browser cache by checking the source code of the site to see if it uses any public CDN, and if so, for which library.

If you both use the same library(ies), for example, jQuery 3.2.1, you can load it from the same public CDN your competitor uses.

As you might guess, this trick works very rarely and, in some cases, might result in a benefit for your competitor if the visitor visits your website first and then your competition afterward.

Although this trick is less likely to work against your competitors, this may generally work. Loading all open source resources from public CDN helps you reuse public resources from the browser cache of third party websites that the user visited any time in the past.

Some free WordPress plugins like Easy Speedup and CommonWP help automatically link to open source themes, plugins, and libraries hosted on public CDN. Such plugins make it possible and easy to reuse browser cache of other websites at scale without much effort.

This approach might not work for some visitors but might work for the others. The benefit is that this can reduce the page load time for even the first time visitors. The added benefit is these open-source assets get delivered from CDN without any bandwidth charges.

2. Combine Files, But With Caution

Several performance testing tools might suggest you combine multiple CSS and JavaScript files into one to improve speed. This used to be true long ago, but now the time has changed.

In this popular blog post written on Google Chrome V8 blog titled ‘ The Cost of JavaScript in 2019’, Addy Osmani offers some guidance on improving the download time of JavaScript. He suggests keeping JavaScript bundles small, and if a bundle size exceeds 50-100KB, split it into smaller bundles. He also noted a general rule of thumb that if a script is larger than 1 KB, avoid inlining it.

Overall it is not worth the effort to bundle smaller files into one or more bundles because it doesn’t improve the page loading speed anymore — it does the contrary and affects the speed on mobile devices. This is because, with HTTP/2 multiplexing, multiple requests and responses can be in flight at the same time, reducing the overhead of additional requests.

So, does your website code need any refactoring to do things the old way again? You might want to consider disabling plugins that enable this feature on WordPress sites. This will reduce plugin bloat and will speed up your site.

However, it doesn’t mean splitting the entire JavaScript codebase into many smaller files will not harm you. You will need to test your use case carefully.

3. Toggle Between Image Formats

Images contribute to a big portion of a page load. So, they need much more consideration than the common image optimization techniques commonly used.

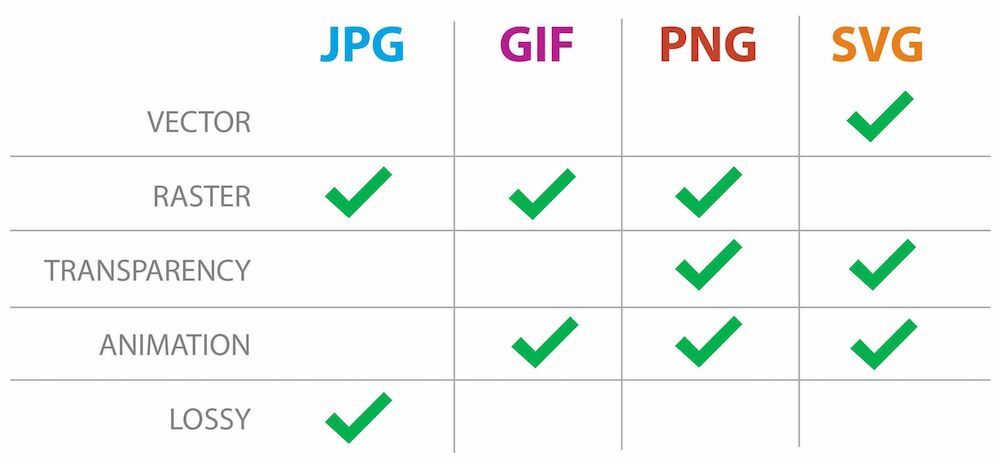

If your site uses images, and I am sure it does, you might need to learn about which image format suits your needs. Some image formats are more suited to vectors, while others work best with photographs.

The above chart may help you choose between the right formats for your image needs. JPEG supports lossy compression for photographs, while SVG is a text-based format that can be compressed on the fly and may, in most cases, reduce the file size to less than that of PNG.

Another consideration is new image formats.

PageSpeed recommends, “Serve images in next-gen formats”. The only widely supported next-gen format WebP is supported by only 77% of the browsers with no support from the Safari browser. Consequently, WebP is used by no more than 4% of the images on the web.

So, using WebP as the primary image format is still a big NO. But the WebP format offers such a tremendous reduction in file size that makes it a very attractive option for performance optimization. And, most of the time, it is a good option to serve images to the browsers based on their compatibility.

This may sound complicated, but it isn’t. There are solutions that automate this on-the-fly image conversion. Once you are ready to use WebP at your site, you have multiple options:

- You can use Easy Speedup WordPress plugin that uses cloud-based image optimization and conversion service that works equally well for WordPress and non-WordPress websites and serves the most optimal image format that browsers can support. There is also a PHP library available to do this job automatically for non-WordPress websites without increasing storage usage. The library is so good at doing this job that for one of my clients, I have seen it convert and optimize a PNG image to WebP and reduce its size by 95% of the original image. This doesn’t always happen, but it does sometimes. Your mileage may vary.

- For on-site optimization and conversion, WebP Express converts images to WebP and maintains multiple copies for every image on the server to deliver the right image to the right browser. The downside is that it bloats the file system, increases the backup size, and makes migration difficult. They also put a load on the hosting as converting and optimizing images is an expensive task.

Converting images to WebP pays off. The saving is huge and, in most cases, the overall page size is reduced to half or even less.

Side note: During Apple's June 22, 2020, WWDC Keynote, they noted that they have added WebP image support for the first time in Safari.

There is even more.

Another important thing is to keep an eye on an exciting upcoming image format JPEG XL. It is in the final stage of standardization and may soon finalize and start to see some browser support. It is based on a combination of Google’s PIK and Cloudinary’s FUIF image formats research. JPEG XL’s format set the following two design requirements that make it the image format of the future for the web:

- High quality: visually lossless at reasonable bitrates;

- Decoding speed: multithreaded decoding should be able to reach around 400 Megapixel/s on large images.

JPEG XL is expected to reduce image size to 1/3 of the original size without loss of quality. It is going to be a universal format to support photography and no-photography images efficiently. So, those of you who want to make your websites even faster in the future might want to keep an eye on JPEG XL browser support. The advantage for early movers is significant.

4. Differentiate Dynamic vs. Static Compression

This might speed up your site big time with minimum efforts and is probably the easiest of the techniques I am going to suggest. Let me explain.

Websites usually use a piece of code from the web to .htaccess or NGINX config file to enable Gzip or Brotli compression for all compressible resources. This works but leaves room for more optimizations. Webpages can save more than 50 KB extra on account of just better Brotli compression. Want to learn the trick? It is simple. Differentiate dynamic compression from static compression.

The compressible content your web server hosts can be differentiated in two types, dynamic and static. Dynamic content is generated on the fly and cannot be compressed in advance. HTML pages are an example of this. The static content, like CSS and JS, stays there unchanged for some time.

You can pre-compress these assets and configure your server to serve those pre-compressed files on the fly. Pre-compression allows you to use a higher compression level, like Brotli:11, that is usually too slow for on-the-fly compression. You can also use a 3rd party service like PageCDN to do this seamlessly for you if pre-compressing resources are difficult for you every time a file changes.

5. Reduce DNS Lookup Time

DNS lookup time is the time spent on resolving the domain name to its IP address. This IP address is then used to find the location of the server on the Internet.

When a user visits a page, the first high-latency task that browsers perform is the DNS lookup for the domain name. Unless a browser gets an IP address from a DNS query, it cannot proceed to connect to the server and make an HTTP request.

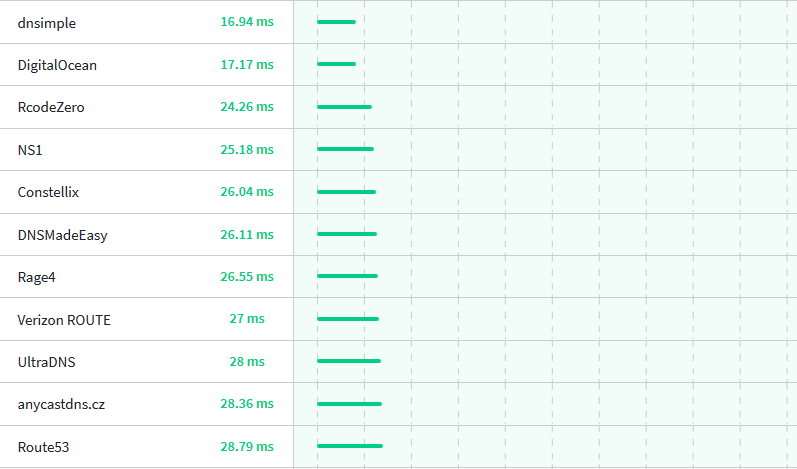

Ideally, reducing the DNS lookup time should be part of the Time-to-First-Byte optimization strategy, but it seems like the PageSpeed tool does not consider it because, in many cases, it is not possible for webmasters to optimize on this front. Resultantly, a huge number of webmasters do not bother the performance of their DNS provider.

There are few ways in which DNS lookup time can be reduced for individual users:

- By using a fast DNS provider. As listed in the above screenshot taken from DNS benchmarking service DNSPerf.com, several services offer global average latency of less than 30ms that is sufficient to make the DNS lookup process imperceptible.

- Cache DNS responses by using higher TTL. DNS recursive resolvers can cache these responses near to the end-users globally and can significantly reduce the DNS lookup time further for all the users that they serve.

6. Leverage Browser Caching for Fonts

You may achieve 95 or 98 score instead of 100 due to the Google fonts that a plugin or theme uses on your website, and you might think that there is not much that you can do to improve the score on this front; there is more than one solution to this problem.

Google fonts CSS is served with just 1-day expiry. This is a feature rather than a bug, as this allows Google fonts team to propagate changes to end-users much faster. But, the problem is that the PageSpeed tool expects you to use a 30days cache with your static assets.

If you are interested in solving this browser cache issue, you first need to go through an excellent and detailed comparison of self-hosting vs. third-party hosting of fonts.

One solution is to use a tool that downloads fonts and CSS for you. You just need to select the families, and your backward compatibility preference and the downloadable file is ready that you can extract in a directory inside your project and use the CSS in your HTML to serve fonts. If you use WordPress, OMGF plugin is there to do exactly all this for you.

You can also use Easy Fonts that is a Google fonts clone, but with some added CSS utility classes that make using fonts much easier and facilitates rapid prototyping. Luckily, this CDN hosted library also fixes the browser caching issue.

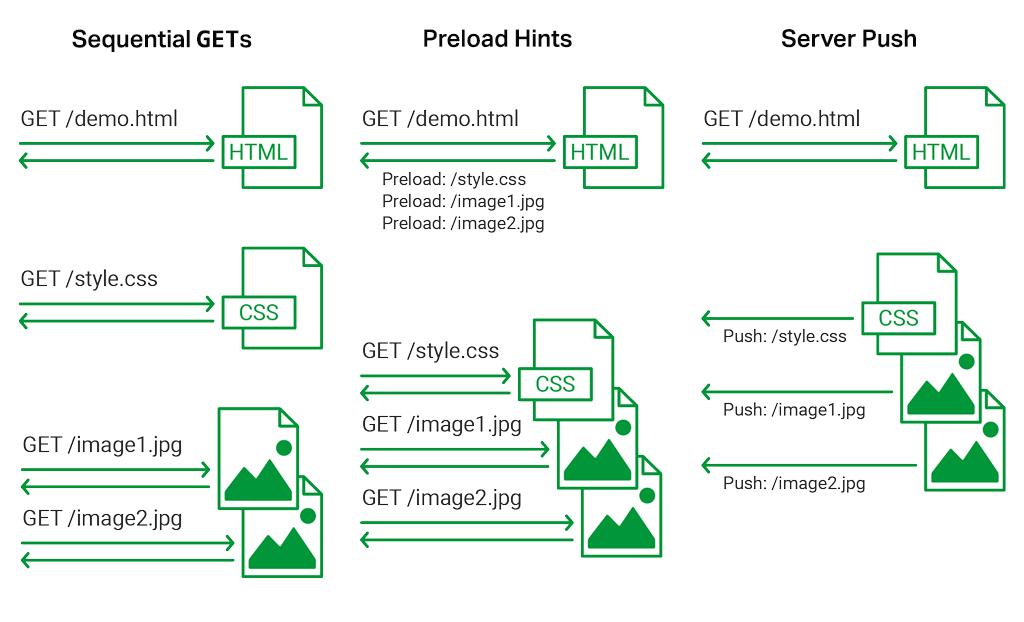

7. Use HTTP/2 Server Push

Server Push is a feature of HTTP/2 protocol that allows web servers to pre-emptively push files to browsers before they even request such files, in anticipation that browsers will soon need to request these files.

So, when a server receives a request for a file, it will send this file plus one or more additional files that it is configured to send with the file that triggers Server Push. This removes the round-trip time for subsequent requests and makes the resources immediately available to the browser.

Server Push is a big performance feature that you might want to try. However, one important thing to note is not to aggressively push files on every request. Browsers might already have a cached copy of a resource for you, and there must be room for the browser to use that cache. Aggressively pushing too many unwanted resources can waste a user’s bandwidth that might be on a metered connection.

If you want to try this, this article explains in detail how to setup Server Push for NGINX. This post on CSS-Tricks helps set up a cache-aware Server Push setup. This blog post has a lot of recommendations and insights on using Server Push. For WordPress sites, the same Easy Speedup plugin offers this Server Push feature.

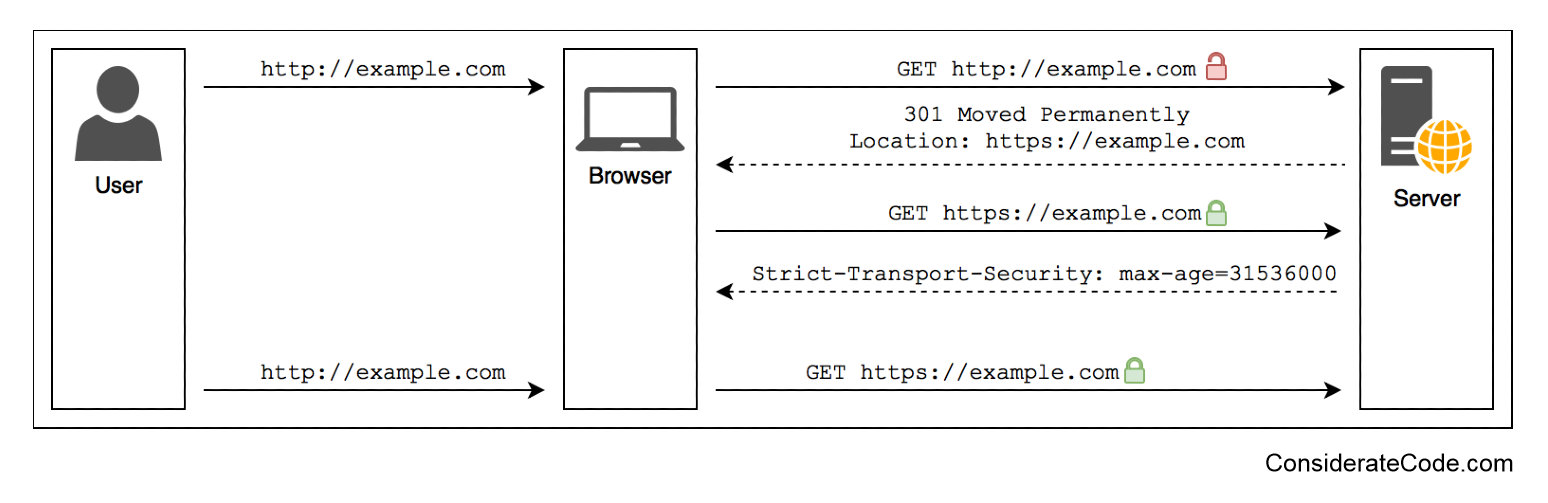

8. HSTS Preload Your Host

HSTS, or HTTP Strict Transport Security, is a way to enforce HTTPS to make sure intruders do not get a chance to alter a request or response if the user uses plain old HTTP to request a page.

HSTS Preload is a list maintained by the Google Chrome team to create a directory of such websites that endorse HTTPS for the entire domain and its sub-domains. This list of domains is used by most major browser vendors including, Chrome, Firefox, Opera, Safari, Edge, and IE. If a website is in this list of HSTS Preloaded sites, browsers always make a secure connection to it. Even if a user tries to open such a site over an unencrypted HTTP, the browser will look up the website on the list and will change the request to HTTPS.

This technique has several requirements, as mentioned on the HSTS Preload website, but offers improved security. However, security is not the only benefit. Having a website in this list saves users from unnecessary redirection from HTTP to HTTPS in case a website has several old backlinks. The security and performance benefit of HSTS justify its use, provided you can fulfill its requirements.

9. Use Immutable Caching

‘Cache-Control’ is an HTTP header that tells the browser about how long the file can be stored in the browser. For user-specific dynamic content like HTML pages, this header is used to tell the browser not to cache this file ever. But for static content like images, CSS and JS, this header becomes a single most important source of significant bandwidth saving for repeated visits of the same user.

However, there is no single cache-control configuration that fits all static assets. Some of the static assets change a lot, while others do not change at all. For example, if a resource named jquery-3.4.1.min,js exists on your site and you are sure you will never edit this file, you need some way to tell the browser that this file never changes.

Fortunately, with the new ‘immutable’ directive, you now have a way to tell the browser exactly the same. If a browser sees ‘cache-control: immutable’ header set for an asset, this tells the browser that the file never changes, and the browser should not try to validate the freshness file with the conditional ‘If-Modified-Since’ request.

This saves a roundtrip time and makes the cached asset available for use without revalidation. However, you need to make sure this header is not used with a file that mostly stays unchanged but may get a few edits once or twice a year because, in such a case, the edits may not reach some of the users without proper cache invalidation. The best fit for this header directive is open source libraries with version numbers added as part of the URL, user-uploaded images with unique id or name, and CSS and JS bundles that get a unique URL after every change.

10. Leverage Host Consolidation

Host Consolidation is an approach introduced by PageCDN to speedup websites of their clients by removing classical and incidental domain sharding overhead. You can use this approach for your own website easily. With this approach, PageCDN just leverages multiplexing capabilities of HTTP/2 protocol but does it in an innovative way.

Host Consolidation is the opposite of domain sharding technique. It depends on HTTP/2 for parallel delivery of requested resources, and proxies all the external resources through a single host (or domain) to avoid unnecessary DNS lookups and connection cost. This saved connection and DNS lookup overhead can speed up websites for mobile and desktop users.

PageCDN automates the host consolidation and lists several ways it maximizes the use of this approach. We can achieve the same behavior on any website by using the same principle.

- Avoid too many open-source CDNs. If your site loads multiple open-source libraries from several different CDNs, try loading all of them from a single CDN.

- Download and self-host Google Fonts. For WordPress websites, the OMGF plugin can do the job for you in just a few clicks.

- Leave the old domain sharding technique behind and load all assets from a single host instead of creating shards for parallel content delivery. Let HTTP/2 do the parallelization for you.

- If your website uses static content from multiple hosts, consider proxying all the content through a single host.

11. Avoid CSS Sprites

‘CSS sprites’ is a technique to combine multiple images into one big image and using CSS to display appropriate pixels from it at different places on a webpage. This used to be a very common technique a few years ago.

The downside is that this makes the overall page structure complicated and less flexible. Also, the big image needs to be loaded even if only an arrow needs to be used from it, adding to the overall weight of the page. Adding another icon through sprites is time-consuming as you have to first modify the image and make sure it doesn’t use any pixel ever used elsewhere on the site. This can lead to several bugs in the design of the website. Similarly, changing the size of one HTML element that displays an icon from the big image will require you to resize the icons on the big image and reposition all icons on the image that appear after that one.

This is complicated and yields almost no benefits since HTTP/2 is there to do almost the same job for you. HTTP/2 can multiplex several requests and responses on the same connection. Another benefit of not using CSS sprites is that only such images or icons load on a page that is absolutely necessary for the page. Browsers can even prioritize above the fold icons and leave them below the fold images for later, further speeding up the page load.

So, if you are using ‘CSS Sprites’ for your small images, it's better to let them load freely and let the browser decide how to prioritize their requests and also which one to request at one time.

12. Update Often

WordPress 5.5 will soon be released and will add support for native lazy loading of images. So, the sites relying on plugins to use this feature will now have a native lazy load solution built right into the WordPress core.

Lazy loading is an important performance optimization, and in this case, it will be available to millions of websites by just a click to update WordPress to the next version.

Updating the CMS, plugins, theme, tools, server software, language runtime, and even operating system periodically bring noticeable performance improvements. For example, every release of a new PHP version has brought considerable performance improvement, and this trend seems to continue in the foreseeable future with PHP 8 on the horizon that will ship with a new JIT compiler. The same is the case with Node.js that benefits from new speed and memory optimizations that come with every new release of the V8 JavaScript engine.

The same applies to server software like NGINX or Apache. Server developers at the moment are working on the next major update of HTTP named HTTP/3. HTTP/3 promises to make the internet connections faster, more reliable and more secure. Be sure to apply this update once your favorite server ships this feature.

The performance of early versions of software is usually not very well optimized because, at this stage, speed is usually not the first preference. But as the product gets mature, the developer may start to put more effort into making it faster to fit even more users and compete better with alternative options. So, as a new version of every piece of software is made available, it’s a good practice to update to avoid bugs, avail security fixes, and to make your stack faster.

Conclusion

While the PageSpeed tool does a really good job in suggesting possible performance pitfalls, it leaves some optimization that can be used to get the max of available tools and technologies. I have tried to cover all such optimizations that I know of. I have also covered a few additional things that you might need to know. All this will help you keep your site ahead of your competition.