A technical SEO audit analyzes the technical aspects of a website related to search engine optimization. It ensures search engines like Google can crawl, index, and rank pages on your site.

In a technical SEO audit, you'll look at (and fix) things that could:

- Slow down your site

- Make it difficult for search engines to understand your content

- Make it hard for your pages to appear in search results

- Affect how users interact with your site on different devices

- Impact your site's security

- Create duplicate content issues

- Cause navigation problems for users and search engines

- Prevent important pages from being found

Identifying and fixing such technical issues help search engines better understand and rank your content. Which can mean improved organic search visibility and traffic over time.

How to Perform a Technical SEO Audit

You’ll need two main tools for a technical site audit:

- Google Search Console

- A crawl-based tool, like Semrush’s Site Audit

If you haven't used Search Console before, check out our beginner's guide. We’ll discuss the tool’s various reports below.

And if you’re new to Site Audit, sign up for free account to follow along with this guide.

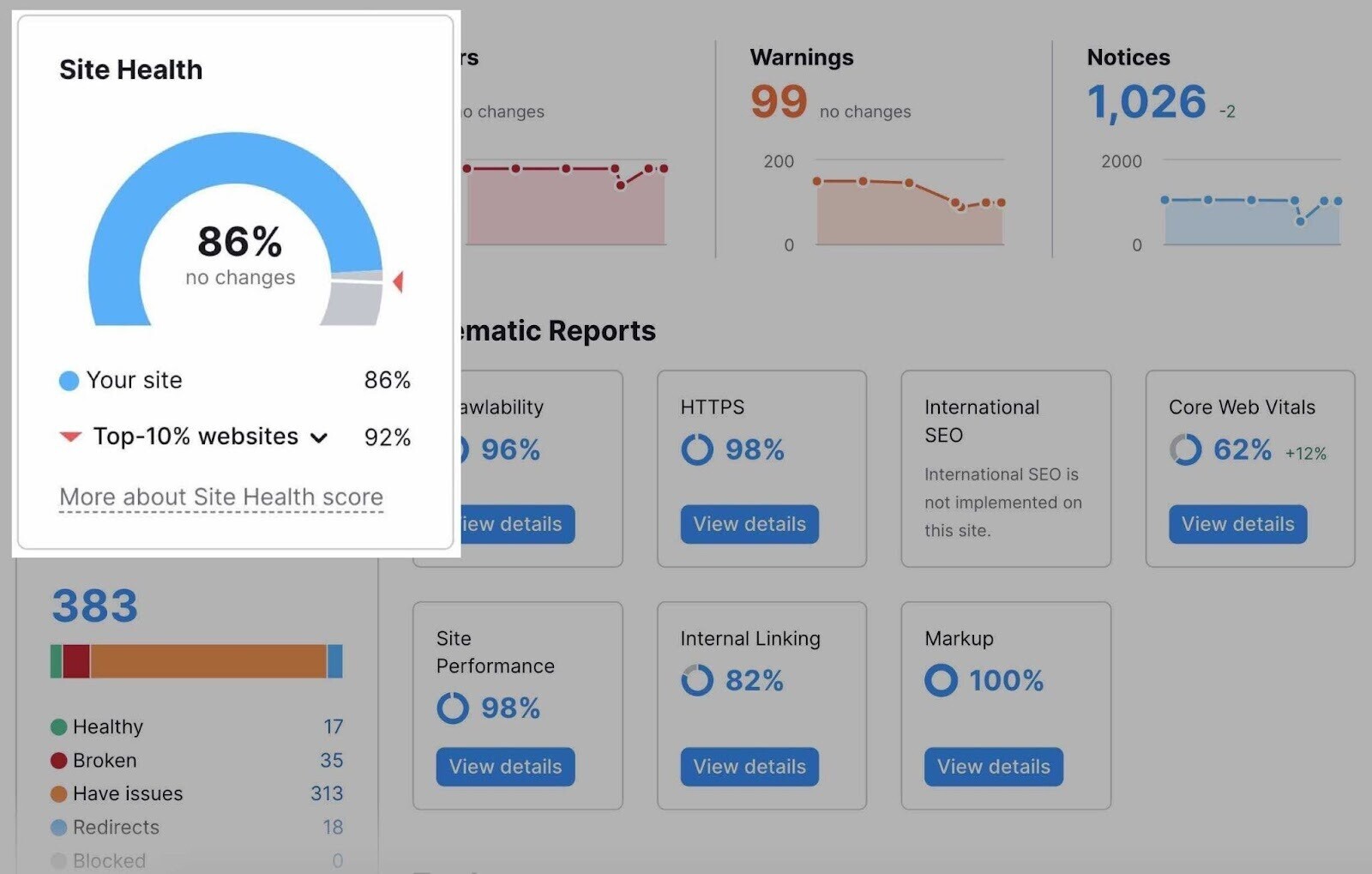

The Site Audit tool scans your website and provides data about each page it crawls. The report it generates shows you a variety of technical SEO issues.

In a dashboard like this:

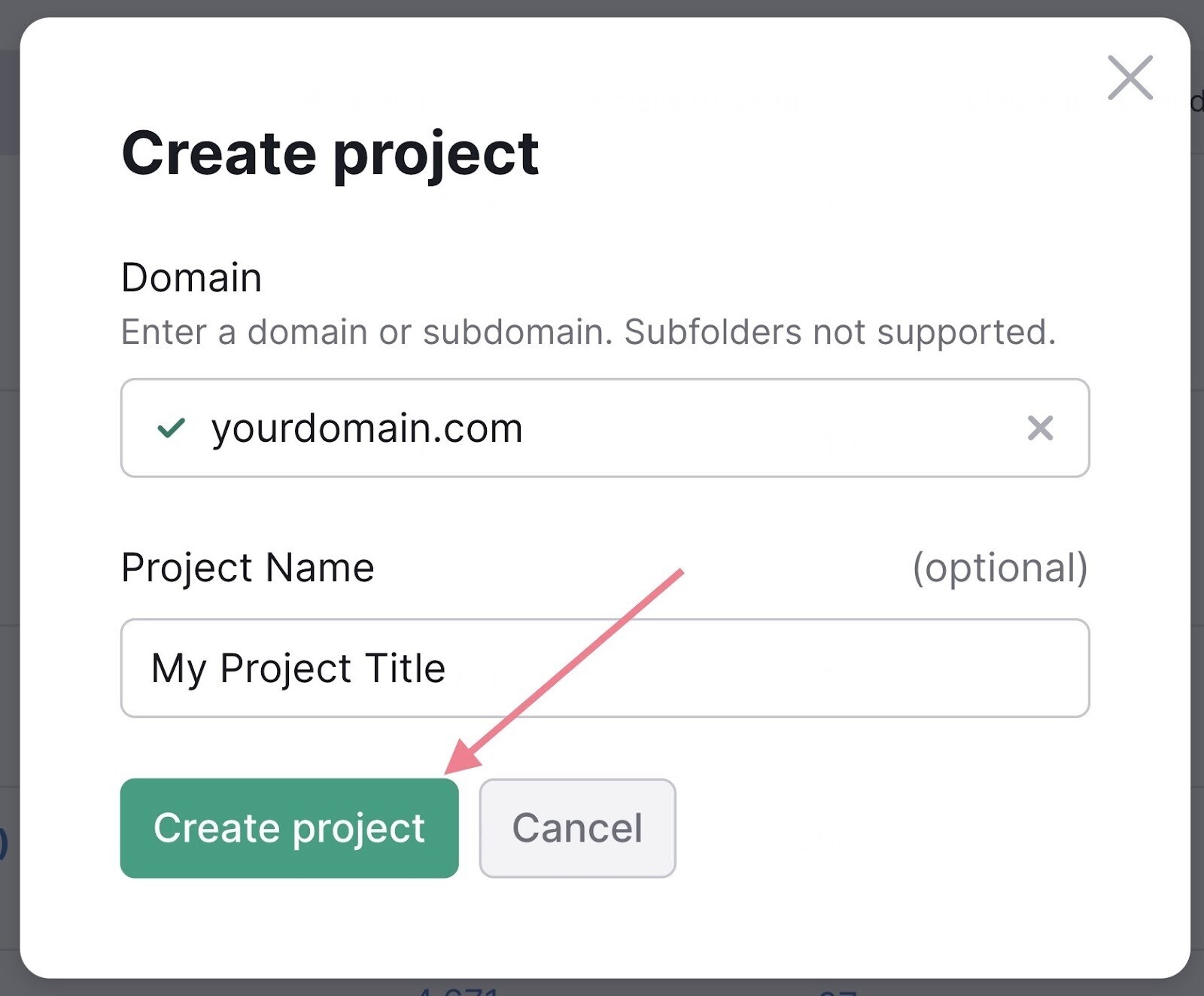

To set up your first crawl, create a project.

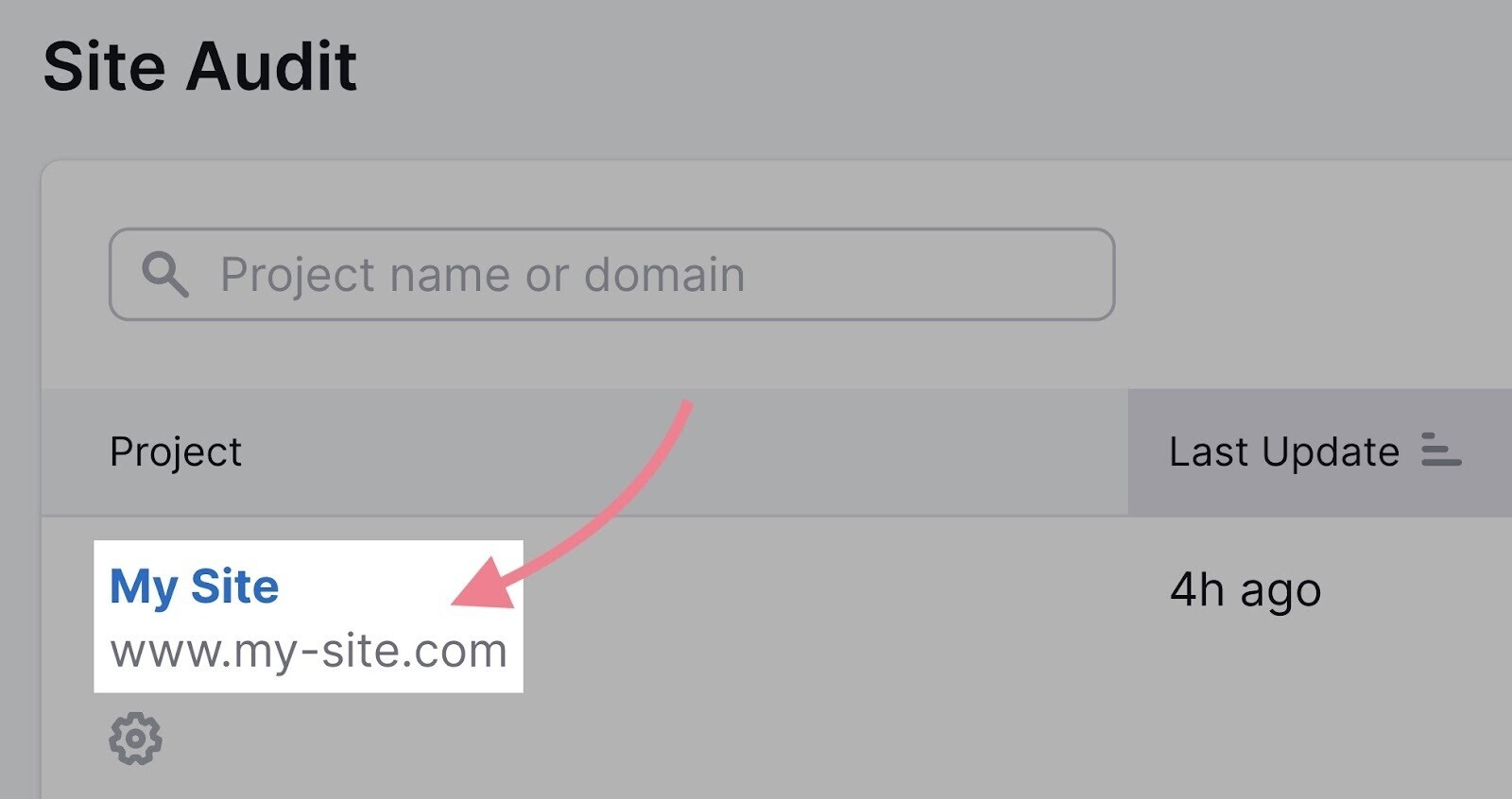

Next, head to the Site Audit tool and select your domain.

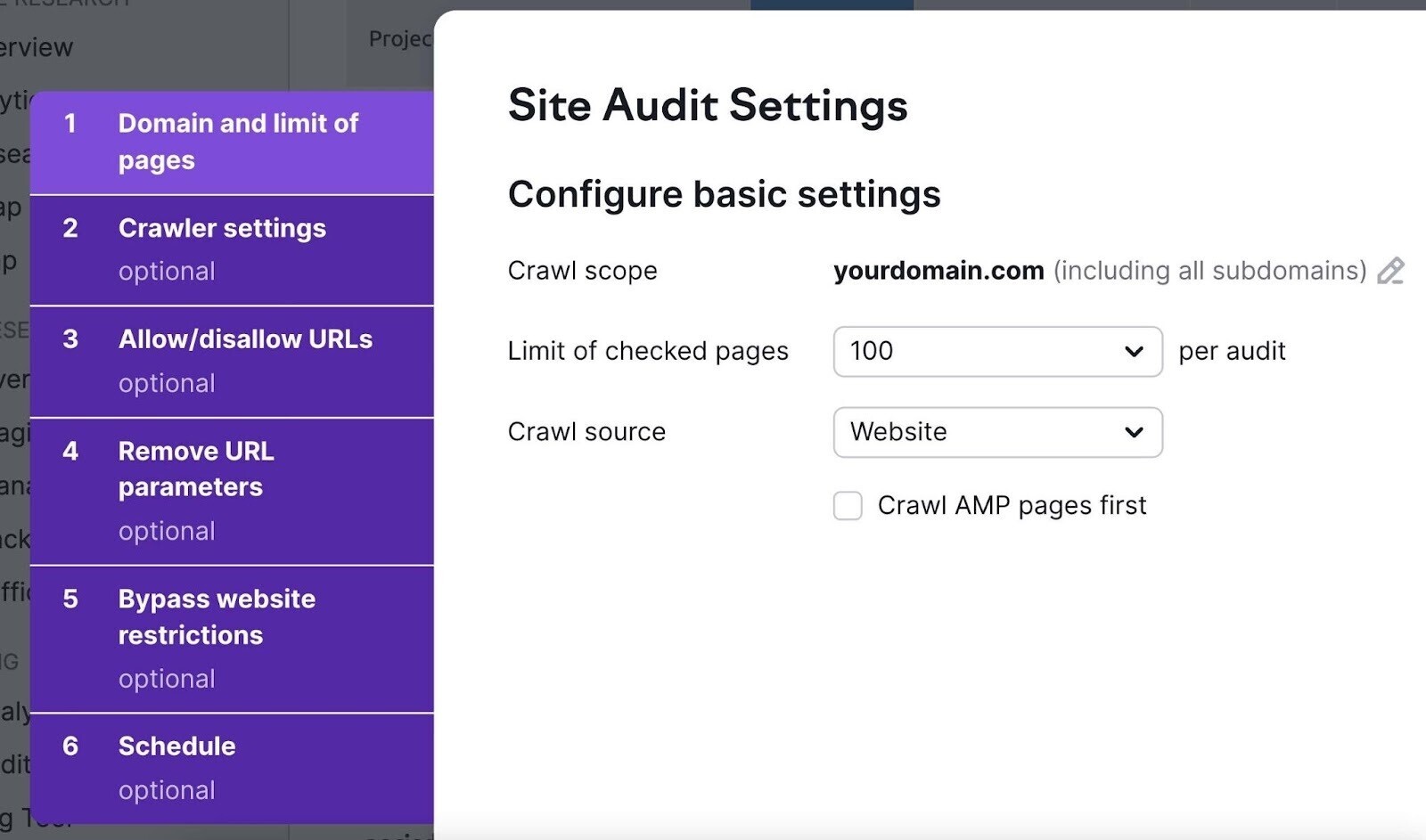

The “Site Audit Settings” window will pop up. Here, configure the basics of your first crawl. Follow this detailed setup guide for help.

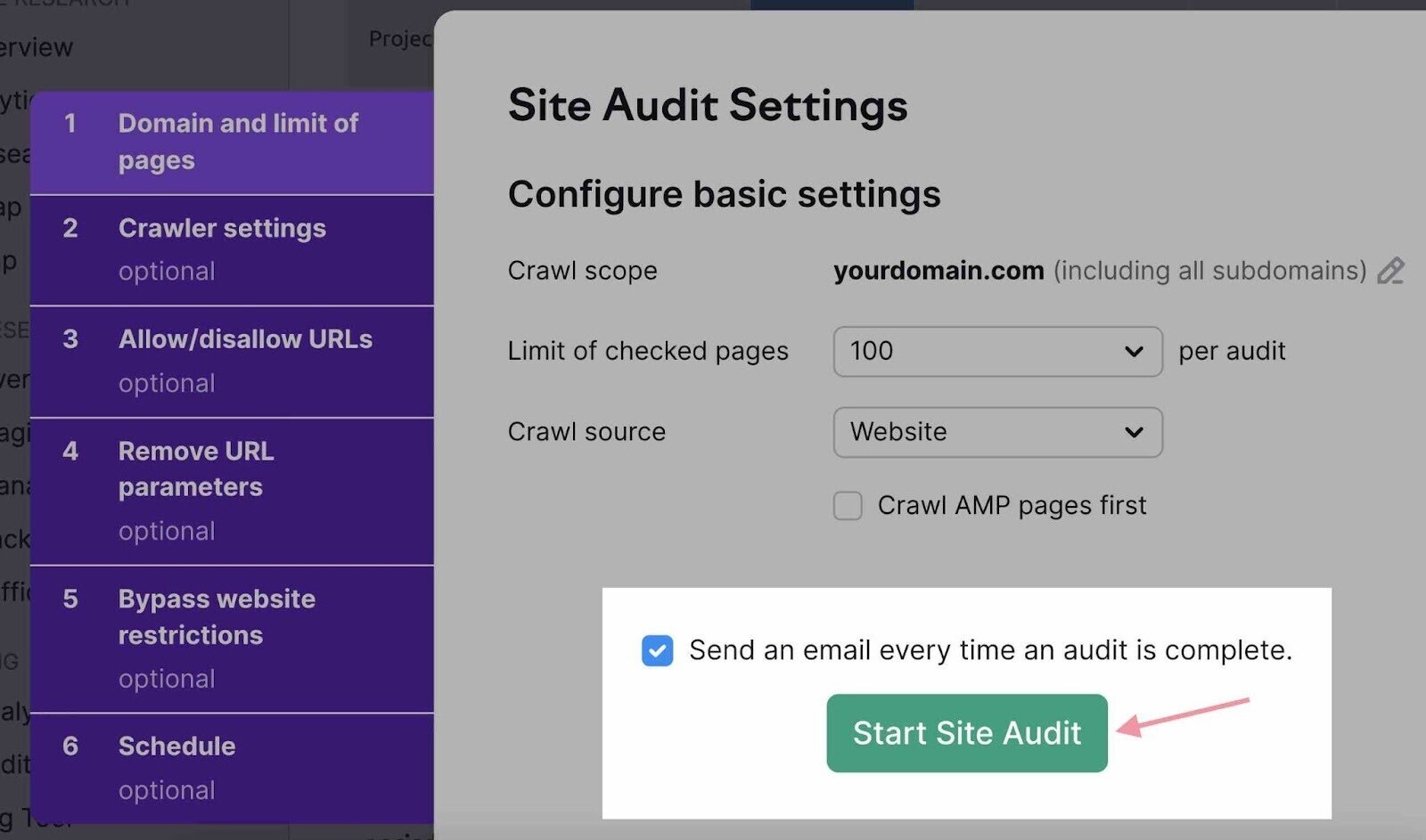

Finally, click “Start Site Audit.”

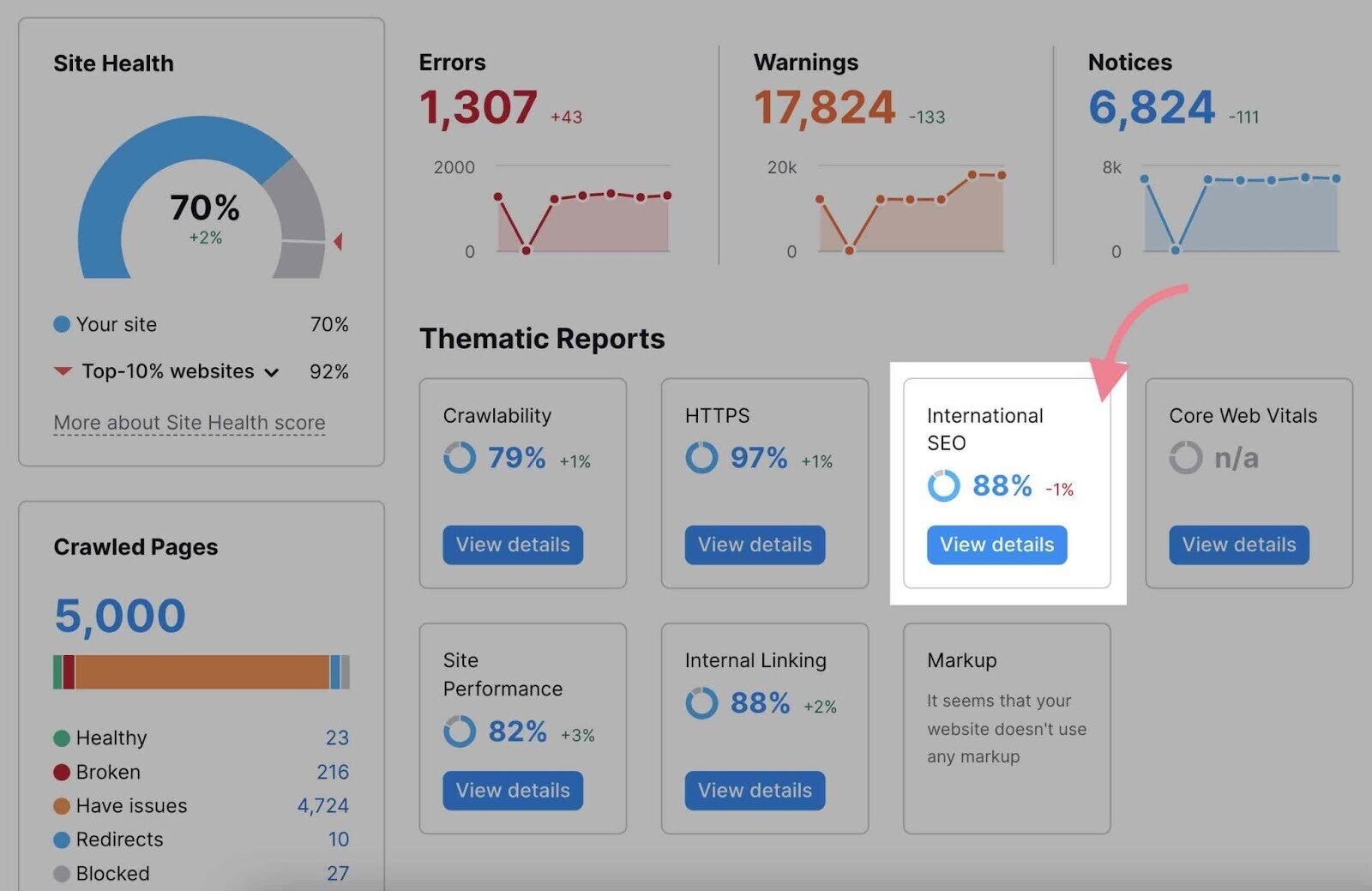

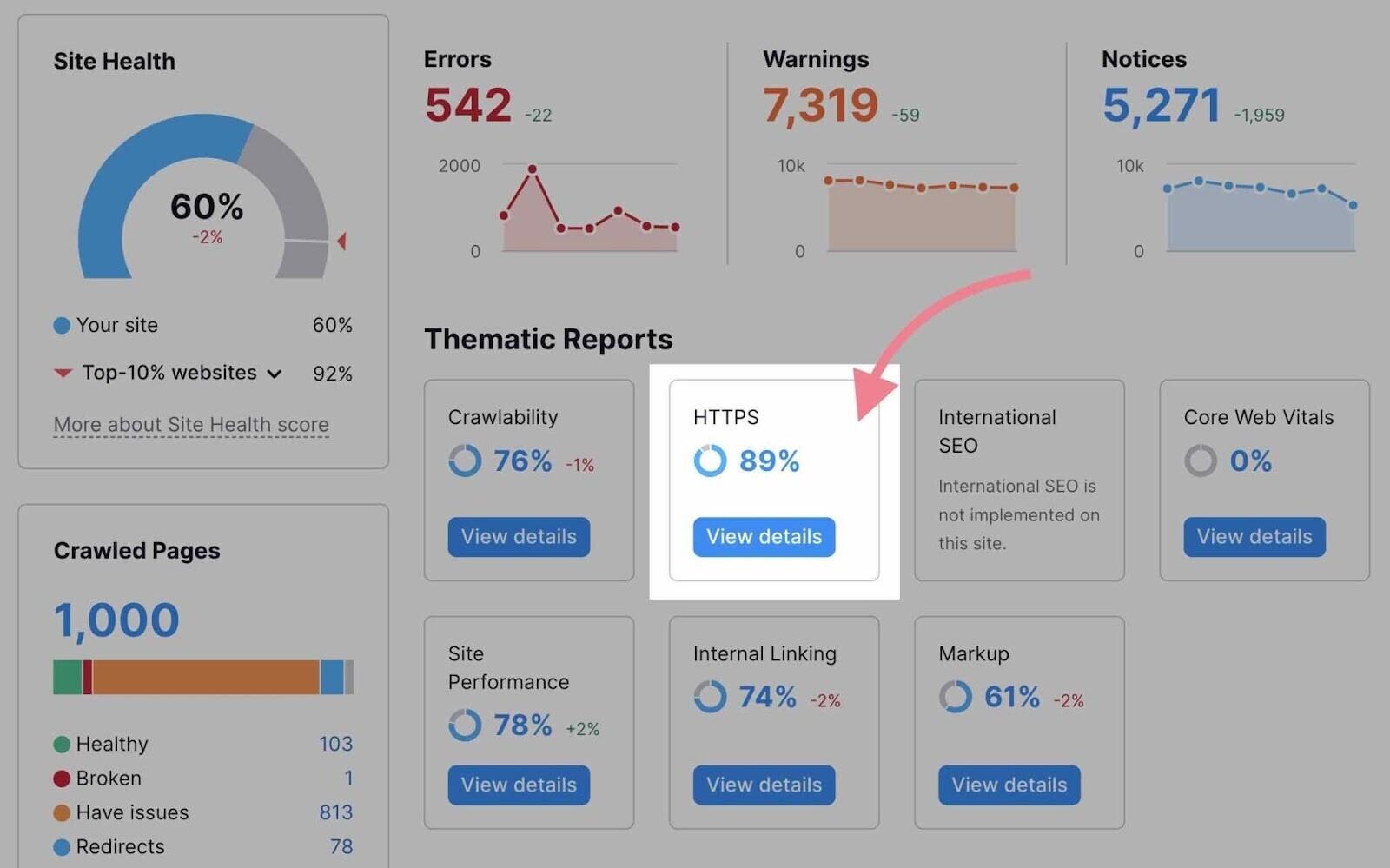

After the tool crawls your site, it generates an overview of your site's health.

This metric grades your website health on a scale from 0 to 100. And how you compare with other sites in your industry.

Your site issues are ordered by severity through the “Errors,” “Warnings,” and “Notices” categories. Or focus on specific areas of technical SEO with “Thematic Reports.”

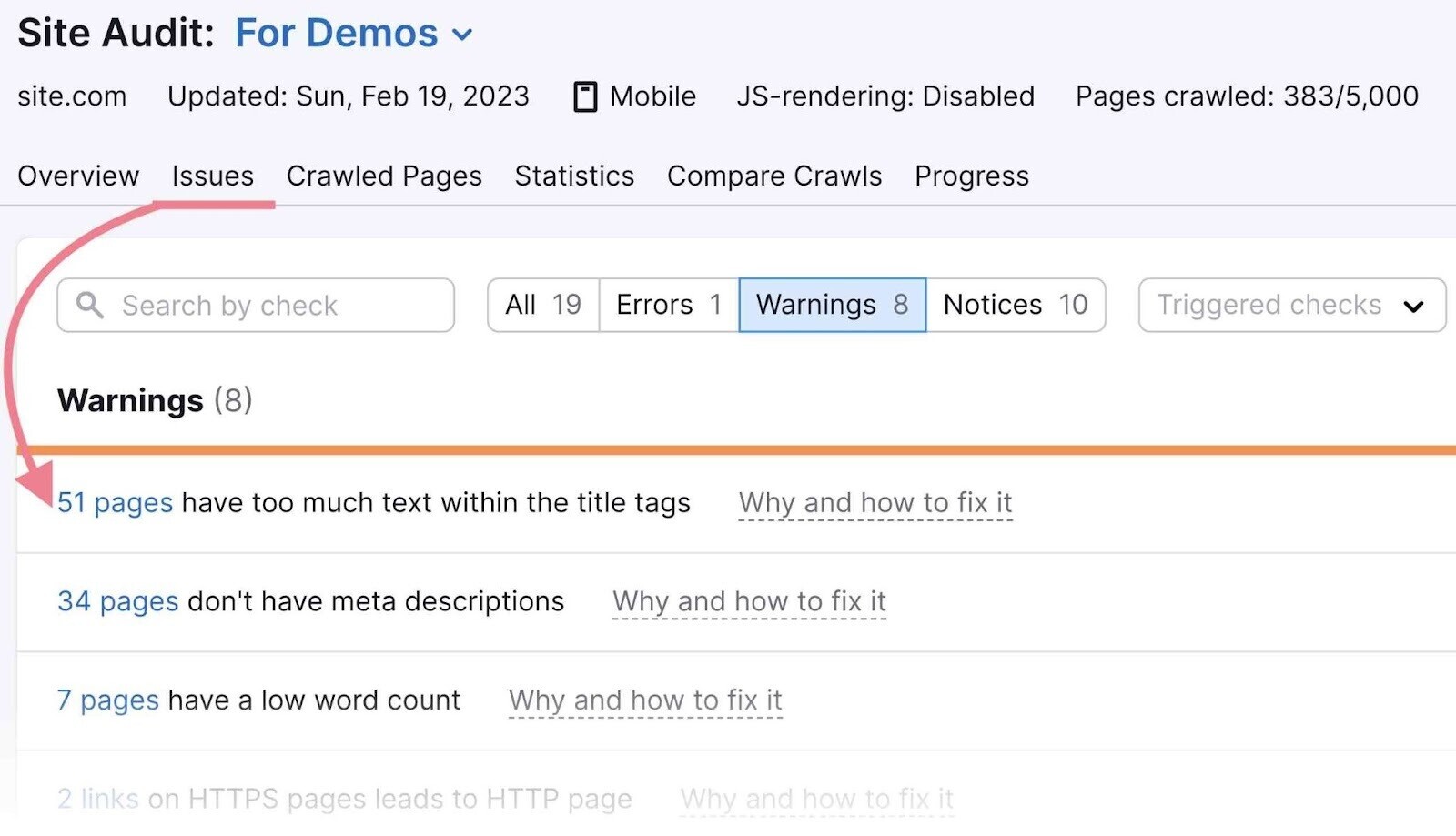

Toggle to the “Issues” tab to see a complete list of all site issues. Along with the number of affected pages.

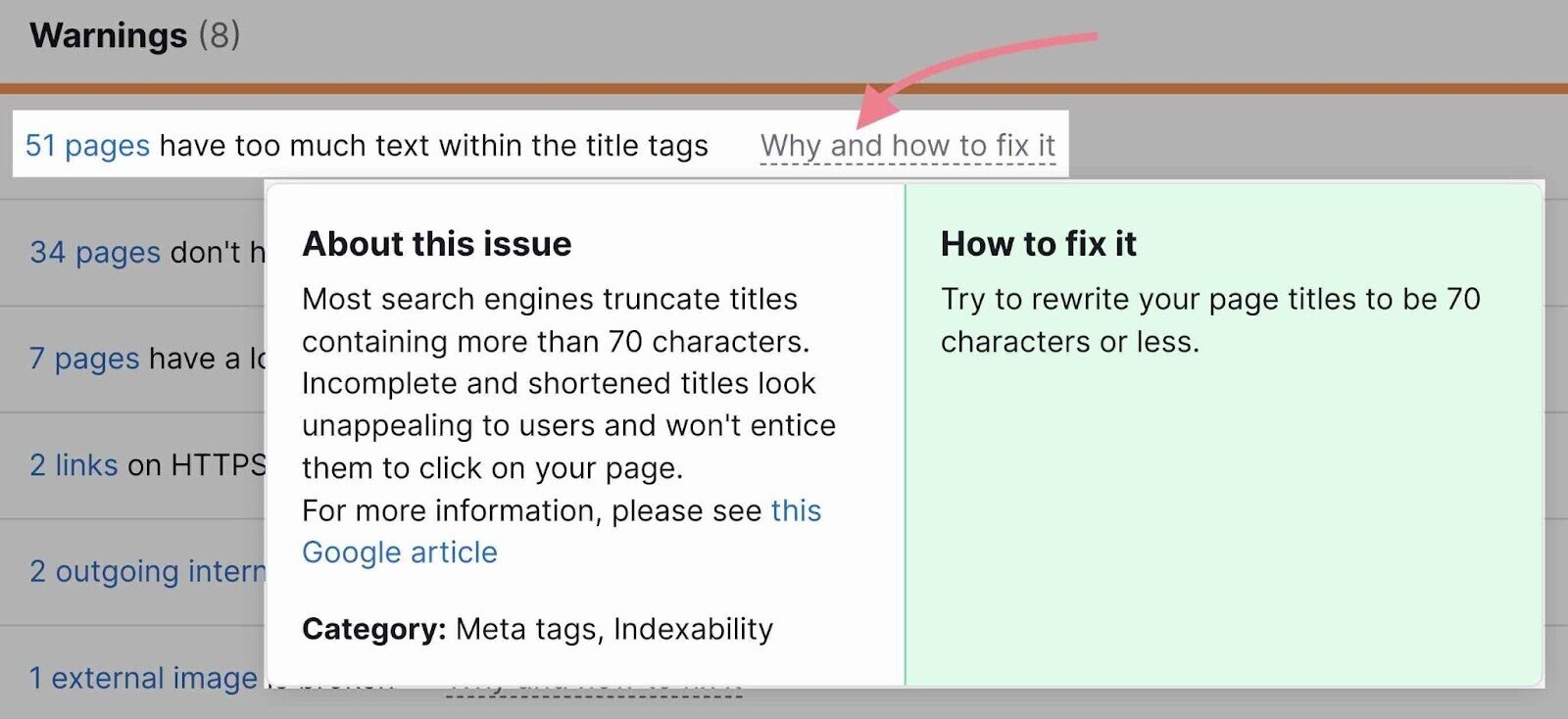

Each issue includes a “Why and how to fix it” link.

The issues you find here will fit into one of two categories, depending on your skill level:

- Issues you can fix on your own

- Issues a developer or system administrator might need to help you fix

Conduct a technical SEO audit on any new site you work with. Then, audit your site at least once per quarter (ideally monthly). Or whenever you see a decline in rankings.

1. Spot and Fix Crawlability and Indexability Issues

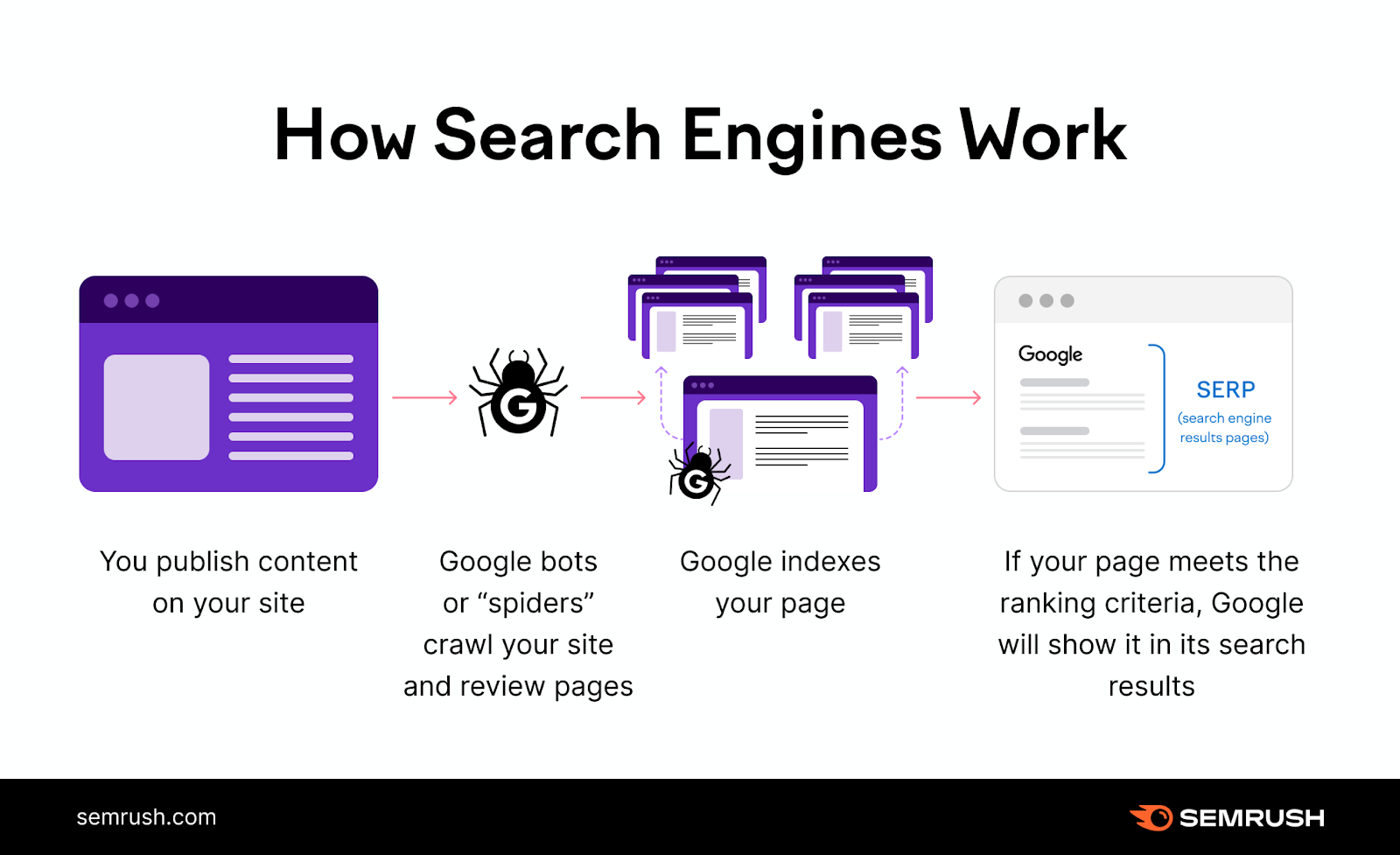

Crawlability and indexability are a crucial aspect of SEO. Because Google and other search engines must be able to crawl and index your webpages in order to rank them.

Google's bots crawl your site by following links to find pages. They read your content and code to understand each page.

Google then stores this information in its index—a massive database of web content.

When someone performs a Google search, Google checks its index to return relevant results.

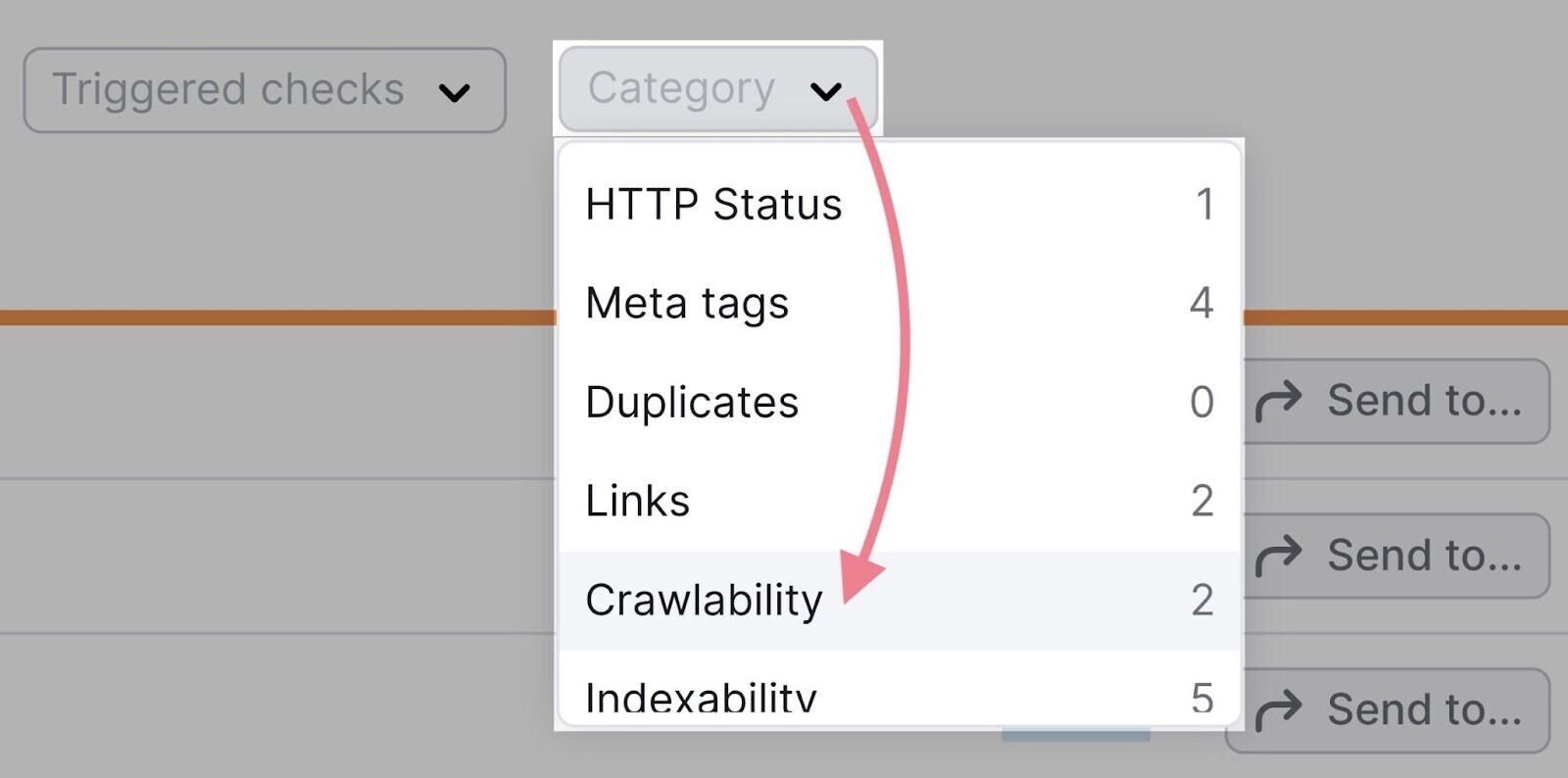

To check if your site has any crawlability or indexability issues, go to the “Issues” tab in Site Audit.

Then, click “Category” and select “Crawlability.”

Repeat this process with the “Indexability” category.

Issues connected to crawlability and indexability will often be at the top of the results in the “Errors” section. Because they’re often more serious. We'll cover several of these issues.

Now, let’s look at two important website files—robots.txt and sitemap.xml—that have a huge impact on how search engines discover your site.

Spot and Fix Robots.txt Issues

Robots.txt is a website text file that tells search engines which pages they should or shouldn’t crawl. It can usually be found in the root folder of the site: https://domain.com/robots.txt.

A robots.txt file helps you:

- Point search engine bots away from private folders

- Keep bots from overwhelming server resources

- Specify the location of your sitemap

A single line of code in robots.txt can prevent search engines from crawling your entire site. Make sure your robots.txt file doesn't disallow any folder or page you want to appear in search results.

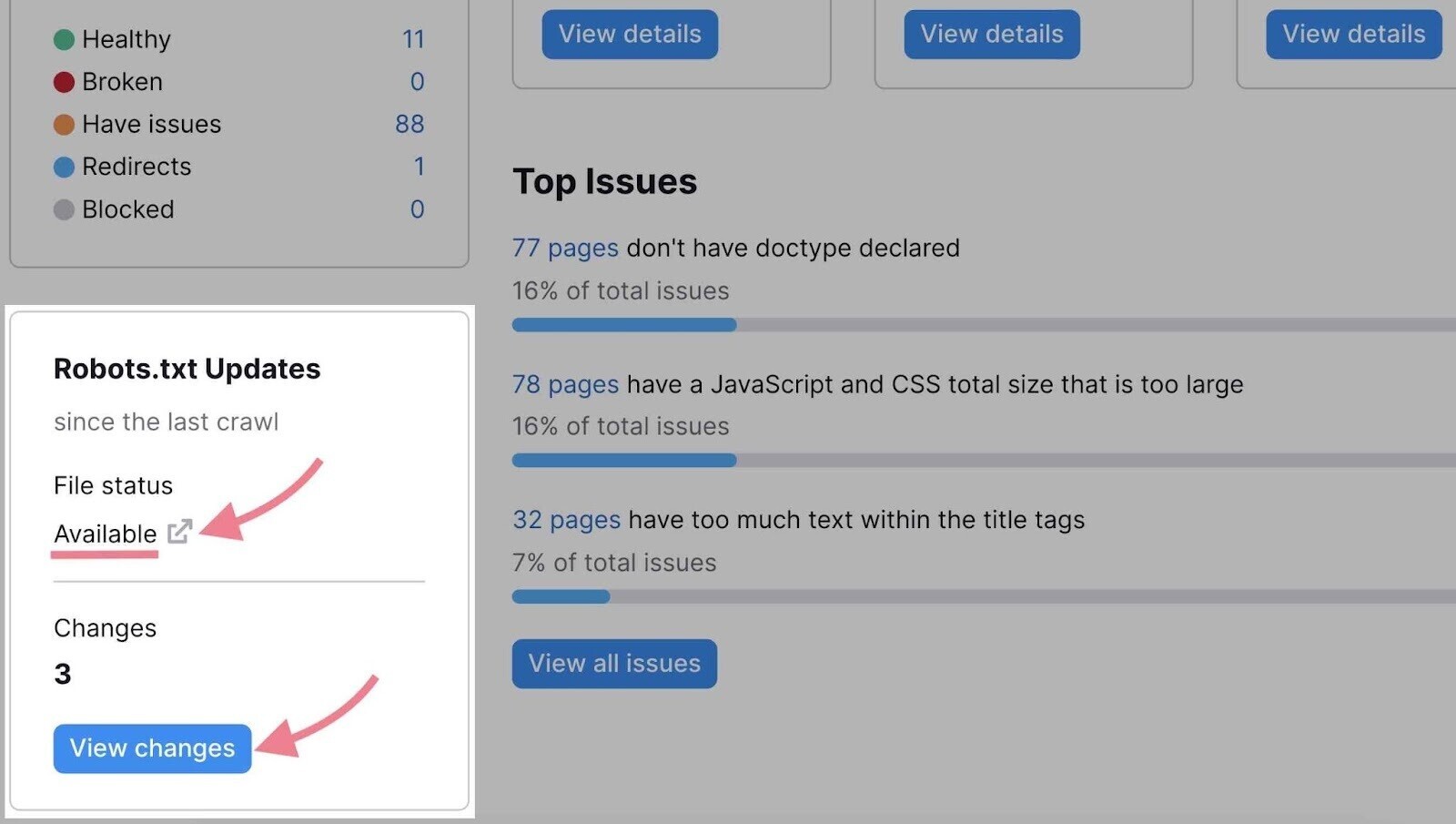

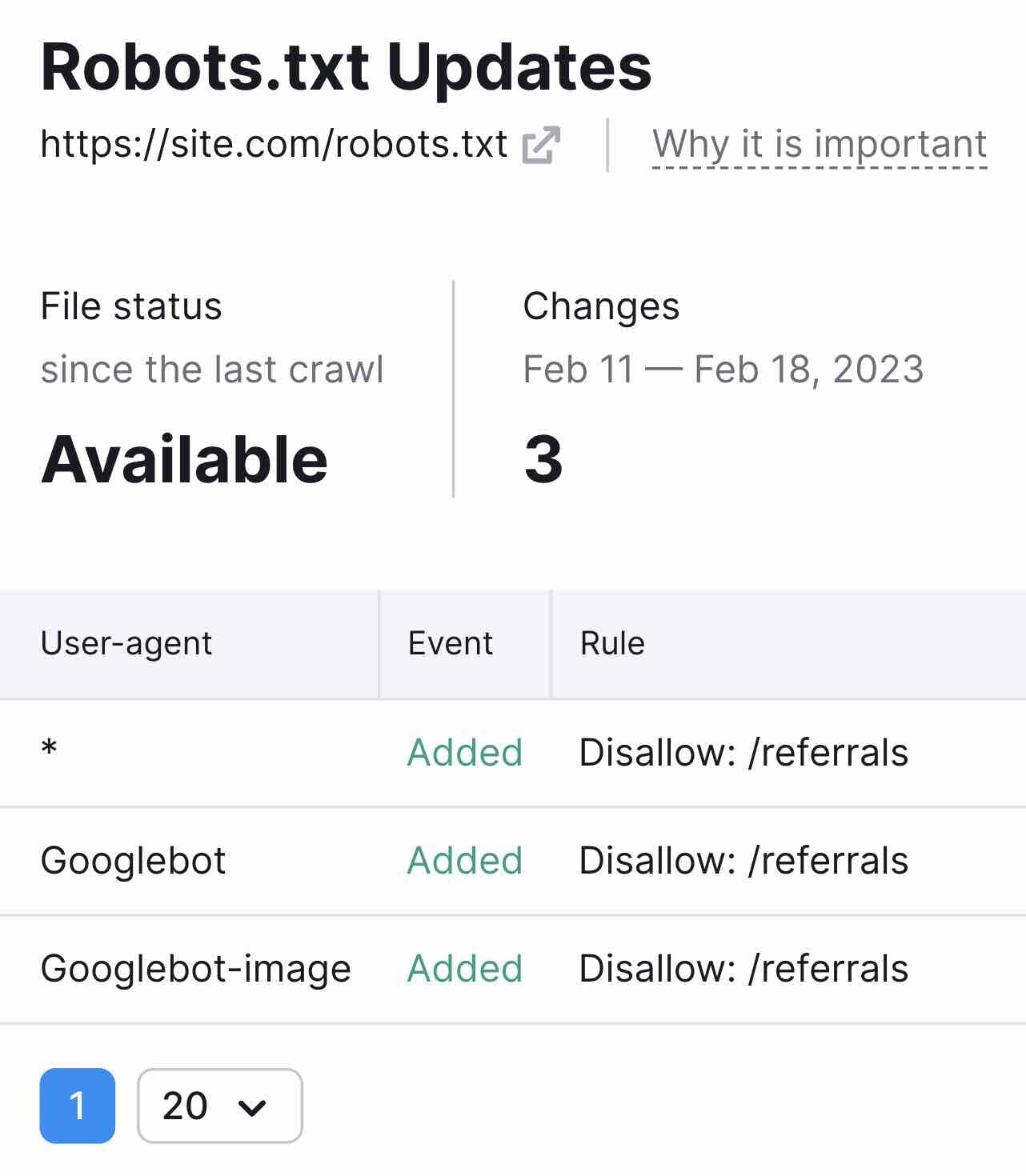

To check your robots.txt file, open Site Audit and scroll down to the “Robots.txt Updates” box at the bottom.

Here, you'll see if the crawler has detected the robots.txt file on your website.

If the file status is “Available,” review your robots.txt file by clicking the link icon next to it.

Or, focus only on the robots.txt file changes since the last crawl by clicking the “View changes” button.

Further reading: Reviewing and fixing the robots.txt file requires technical knowledge. Always follow Google's robots.txt guidelines. Read our guide to robots.txt to learn about its syntax and best practices.

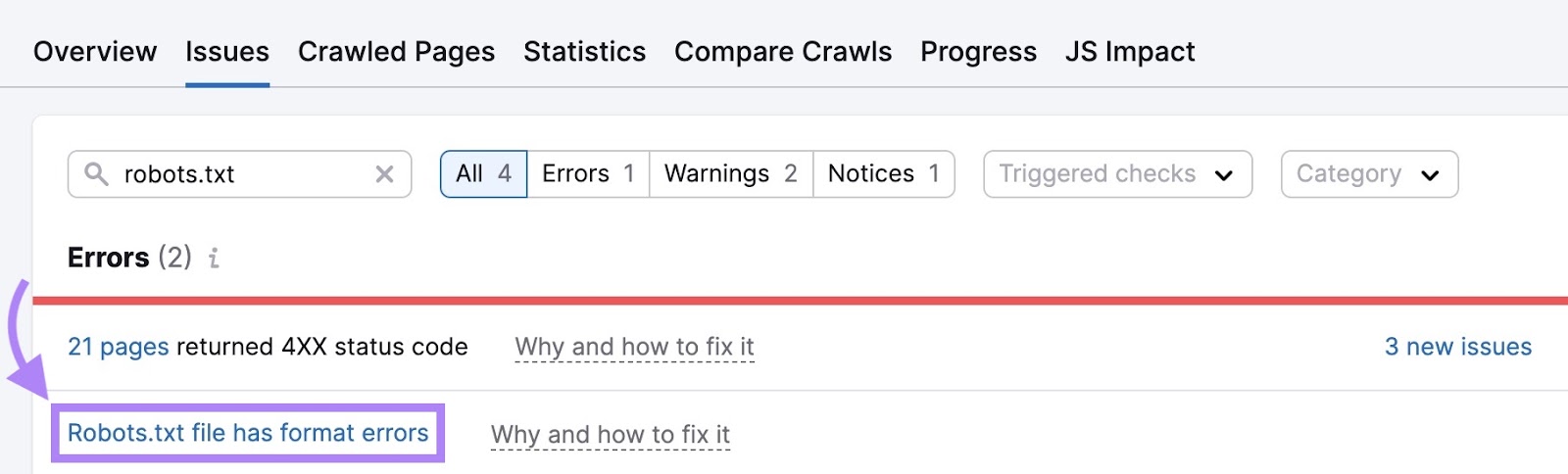

To find further issues, open the “Issues” tab and search “robots.txt.”

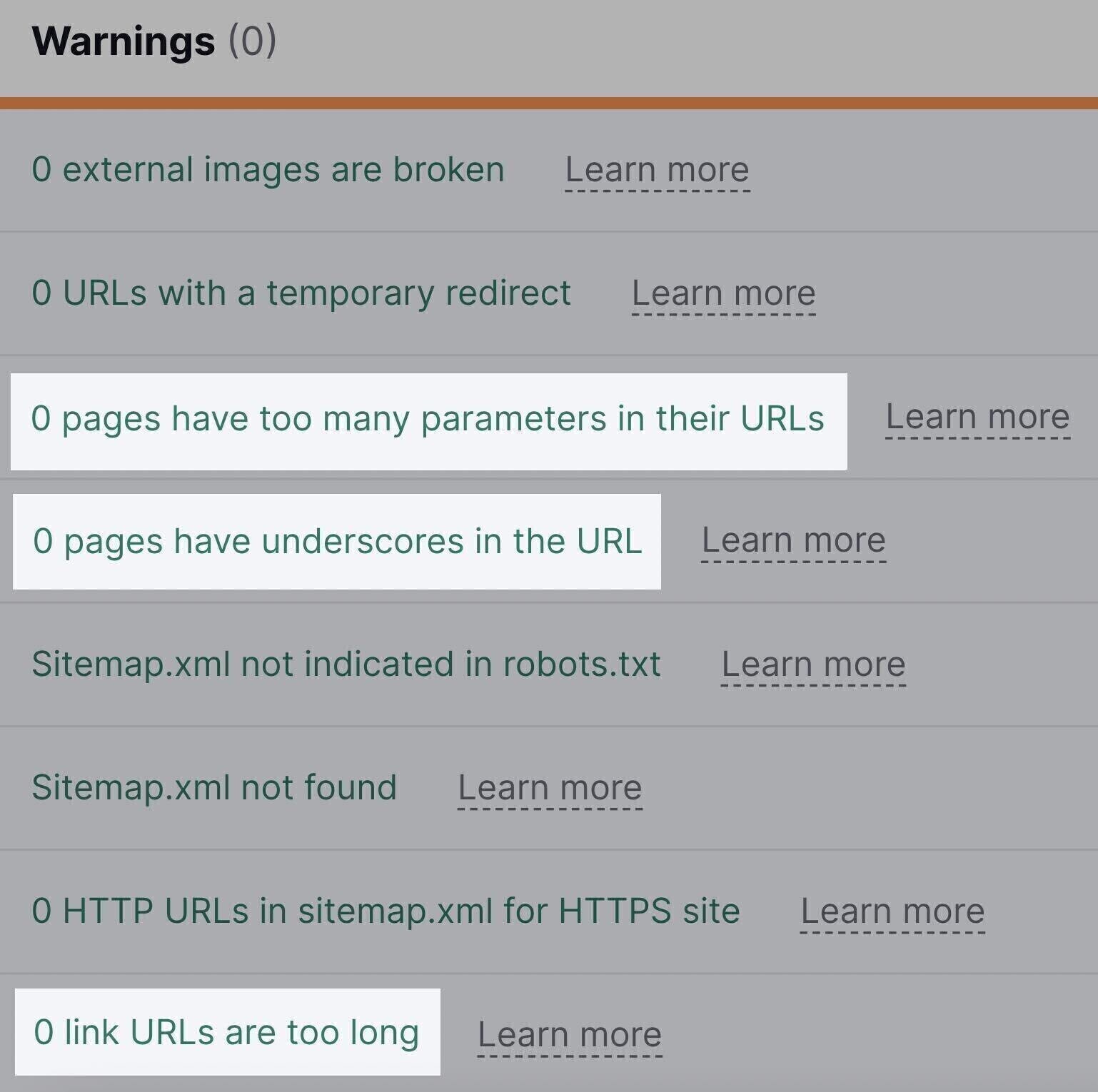

Some issues include:

- Robots.txt file has format errors: Your robots.txt file might have mistakes in its setup. This could accidentally block important pages from search engines or allow access to private content you don't want shown.

- Sitemap.xml not indicated in robots.txt: Your robots.txt file doesn't mention where to find your sitemap. Adding this information helps search engines find and understand your site structure more easily.

- Blocked internal resources in robots.txt: You might be blocking important files (like CSS or JavaScript) that search engines need to properly view and understand your pages. This can hurt your search rankings.

- Blocked external resources in robots.txt: Resources from other websites that your site uses (like CSS, JavaScript, and image files) might be blocked. This can prevent search engines from fully understanding your content.

Click the link highlighting the found issues.

Inspect them in detail to learn how to fix them.

Further reading: Besides the robot.txt file, there are two other ways to provide instructions for search engine crawlers: the robots meta tag and x-robots tag. Site Audit will alert you of issues related to these tags. Learn how to use them in our guide to robots meta tags.

Spot and Fix XML Sitemap Issues

An XML sitemap is a file that lists all the pages you want search engines to index and rank.

Review your XML sitemap during every technical SEO audit to ensure it includes all pages you want to rank.

Also check that the sitemap doesn’t include pages you don’t want in the SERPs. Like login pages, customer account pages, or gated content.

Next, check whether your sitemap works correctly.

The Site Audit tool can detect common sitemap-related issues, such as:

- Format errors: Your sitemap has mistakes in its setup. This could confuse search engines, causing them to ignore your sitemap entirely.

- Incorrect pages found: You've included pages in your sitemap that shouldn't be there, like duplicate content or error pages. This can waste your crawl budget and confuse search engines.

- File is too large: Your sitemap is bigger than search engines prefer. This might lead to incomplete crawling of your site.

- HTTP URLs in sitemap.xml for HTTPS site: Your sitemap lists unsecure versions of your pages on a secure site. This mismatch could mislead search engines.

- Orphaned pages: You've included pages in your sitemap that aren't linked from anywhere else on your site. This could waste the crawl budget on potentially outdated or unimportant pages.

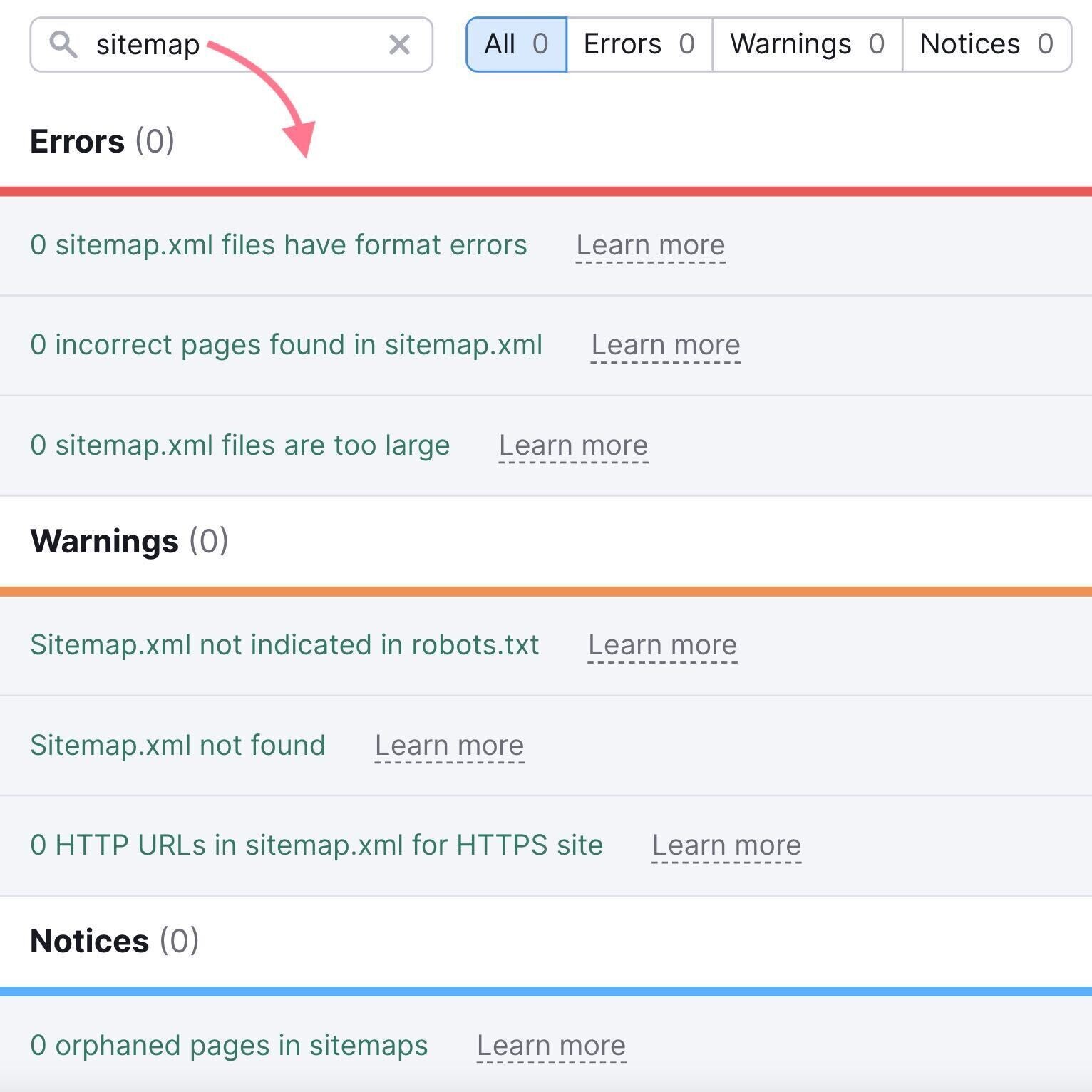

To find and fix these issues, go to the “Issues” tab and type “sitemap” in the search field:

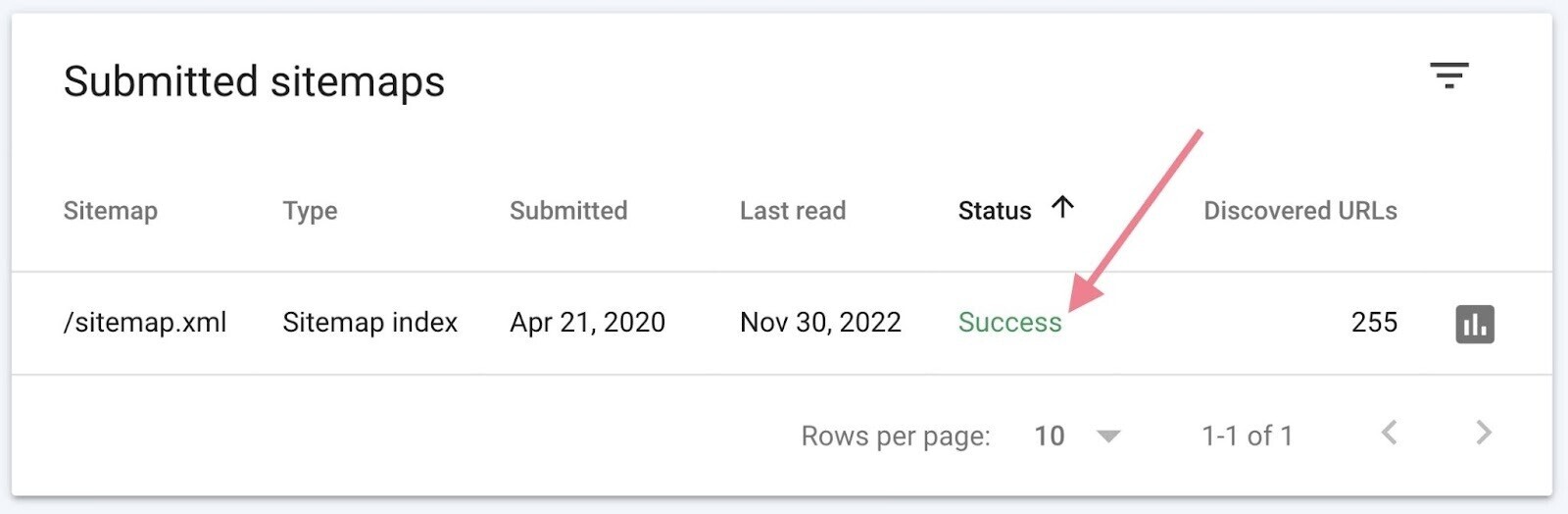

You can also use Google Search Console to identify sitemap issues.

Visit the “Sitemaps” report to submit your sitemap to Google, view your submission history, and review any errors.

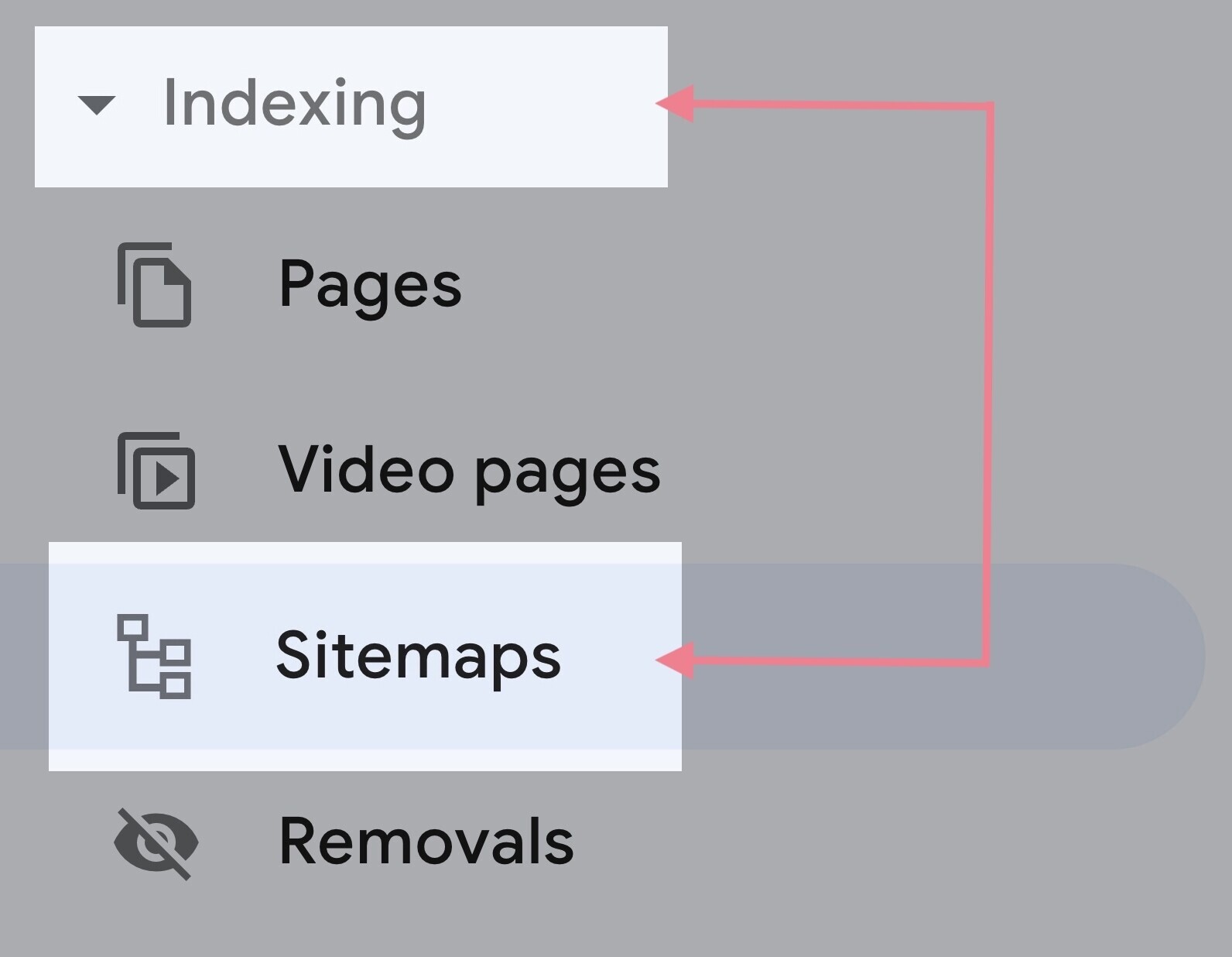

Find it by clicking “Sitemaps” under the “Indexing” section.

If you see “Success” listed next to your sitemap, there are no errors. But the other two statuses—“Has errors” and “Couldn’t fetch”—indicate a problem.

If there are issues, the report will flag them individually. Follow Google's troubleshooting guide to fix them.

Further reading: If your site doesn't have a sitemap.xml file, read our guide on how to create an XML sitemap.

2. Audit Your Site Architecture

Site architecture refers to the hierarchy of your webpages and how they are connected through links. Organize your website so it’s logical for users and easy to maintain as your website grows.

Good site architecture is important for two reasons:

- It helps search engines crawl and understand the relationships between your pages

- It helps users navigate your site

Let's consider three key aspects of site architecture. And how to analyze them with the technical SEO audit tool.

Site Hierarchy

Site hierarchy (or site structure) is how your pages are organized into subfolders.

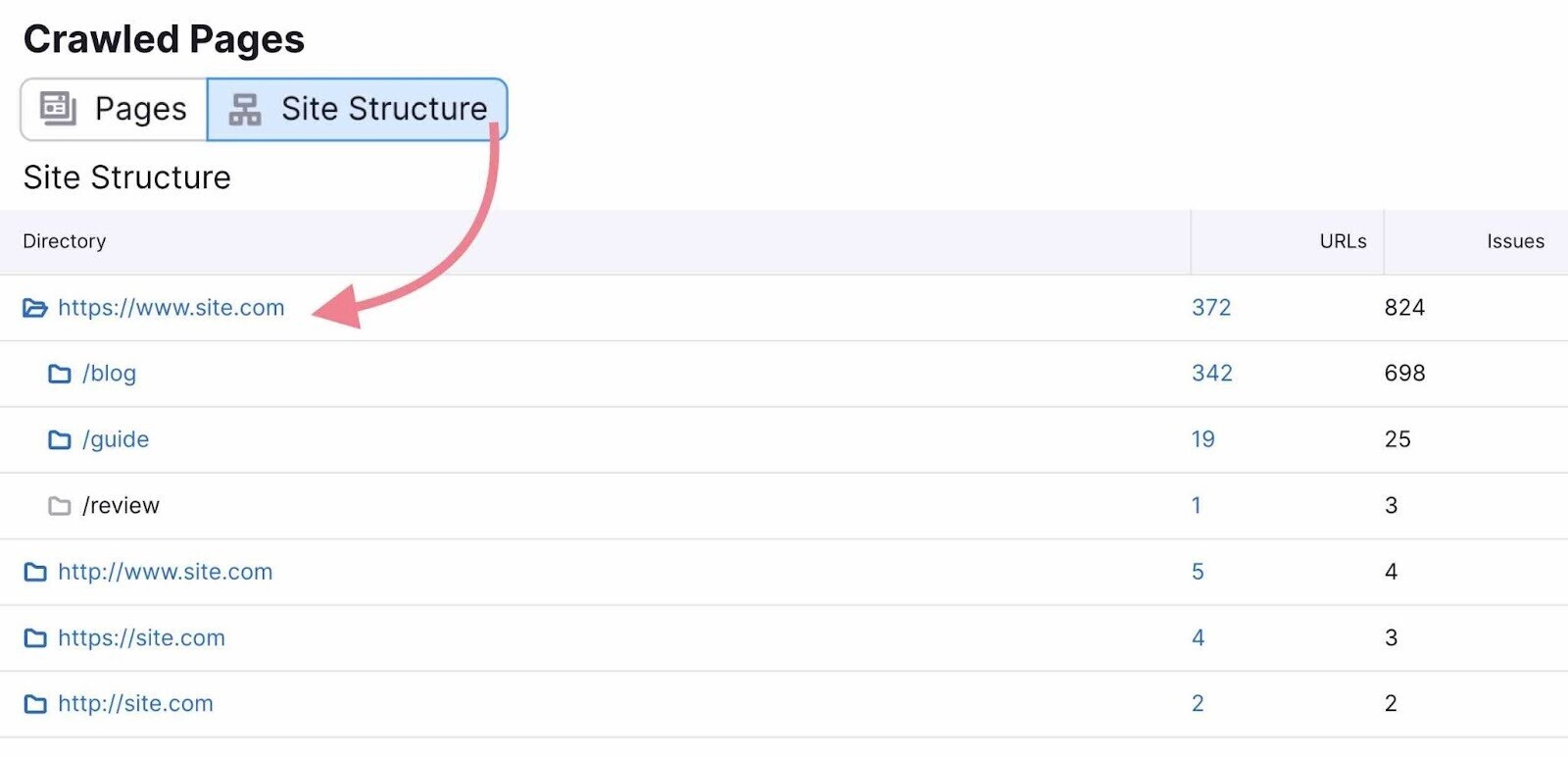

To understand site's hierarchy, navigate to the “Crawled Pages” tab in Site Audit.

Then, switch the view to “Site Structure.”

You’ll see your website’s subdomains and subfolders. Review them to make sure the hierarchy is organized and logical.

Aim for a flat site architecture, which looks like this:

Ideally, it should only take a user three clicks to find the page they want from your homepage.

When it takes more than three clicks to navigate your site, its hierarchy is too deep. Search engines consider pages deep in the hierarchy to be less important or relevant to a search query.

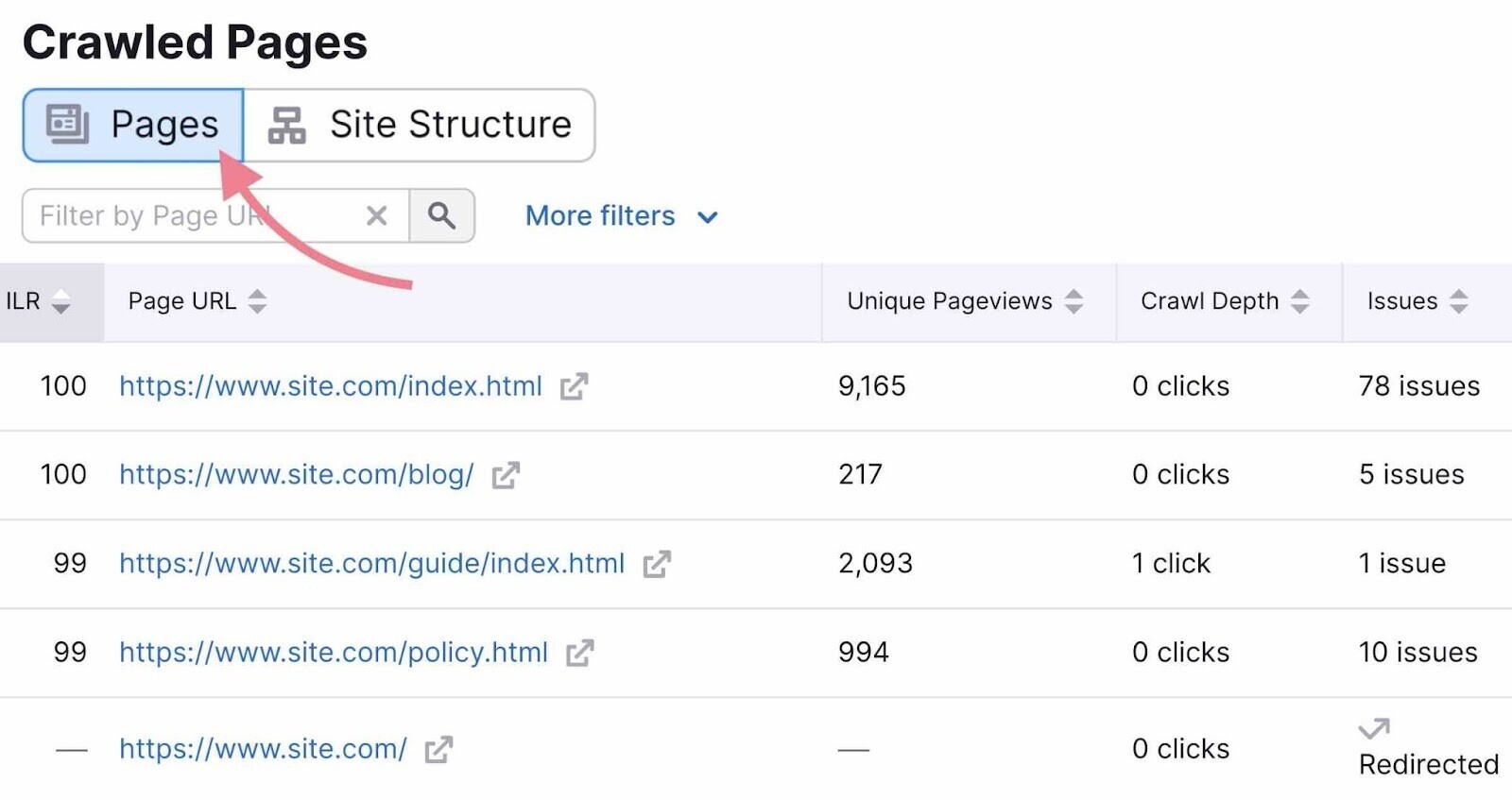

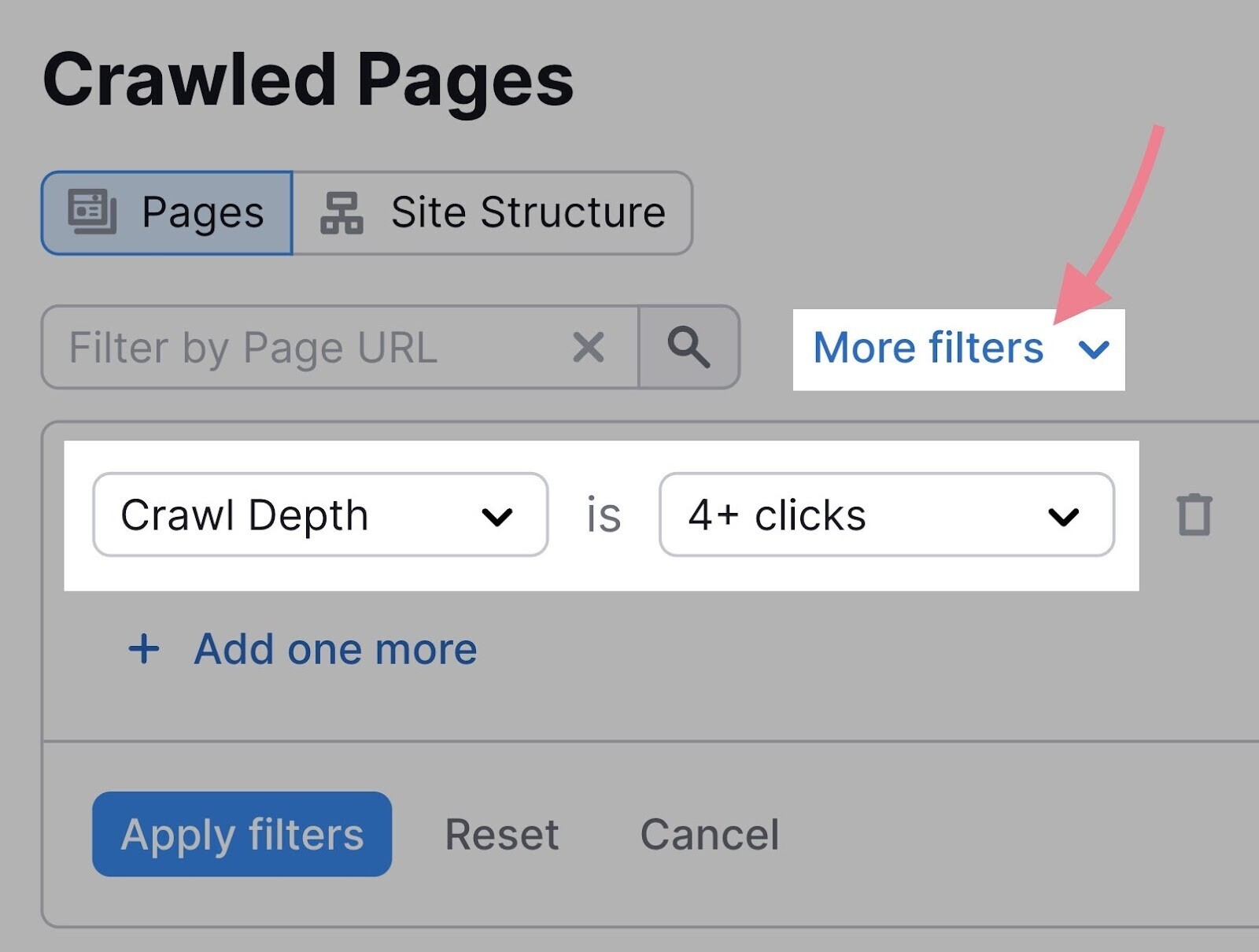

To ensure all your pages fulfill this requirement, stay within the “Crawled Pages” tab and switch back to the “Pages” view.

Then, click “More filters” and select the following parameters: “Crawl Depth” is “4+ clicks.”

To fix this issue, add internal links to pages that are too deep in the site’s structure.

Navigation

Your site's navigation (like menus, footer links, and breadcrumbs) should make it easier for users to navigate your site.

This is an important pillar of good website architecture.

Your navigation should be:

- Simple. Try to avoid mega menus or non-standard names for menu items (like “Idea Lab” instead of “Blog”)

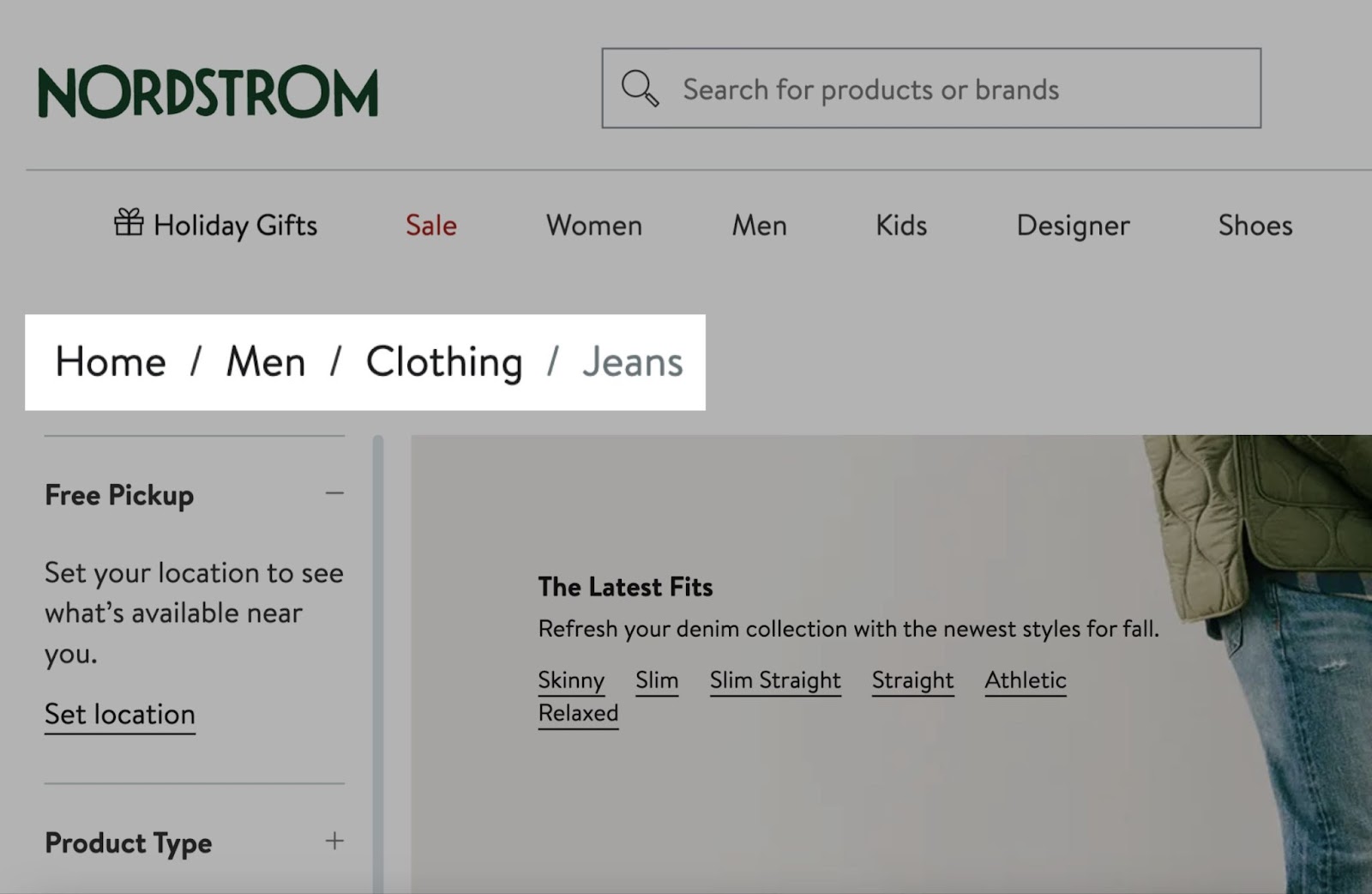

- Logical. It should reflect the hierarchy of your pages. A great way to achieve this is to use breadcrumbs.

Breadcrumbs are a secondary navigation that shows users their current location on your site. Often appearing as a row of links at the top of a page. Like this:

Breadcrumbs help users understand your site structure and easily move between levels. Improving both user experience and SEO.

No tool can help you create user-friendly menus. You need to review your website manually and follow UX best practices for navigation.

URL Structure

Like a website’s hierarchy, a site’s URL structure should be consistent and easy to follow.

Let's say a website visitor follows the menu navigation for girls’ shoes:

Homepage > Children > Girls > Footwear

The URL should mirror the architecture: domain.com/children/girls/footwear

Some sites should also consider using a URL structure that shows a page or website is relevant to a specific country. For example, a website for Canadian users of a product may use either “domain.com/ca” or “domain.ca.”

Lastly, make sure your URL slugs are user-friendly and follow best practices.

Site Audit identifies common issues with URLs, such as:

- Use of underscores in URLs: Using underscores (_) instead of hyphens (-) in your URLs can confuse search engines. They might see words connected by underscores as a single word, potentially affecting your rankings. For example, "blue_shoes" could be read as "blueshoes" instead of "blue shoes".

- Too many parameters in URLs: Parameters are URL elements that come after a question mark, like "?color=blue&size=large". They help with tracking. Having too many can make your URLs long and confusing, both for users and search engines.

- URLs that are too long: Some browsers might have trouble processing URLs that exceed 2,000 characters. Short URLs are also easier for users to remember and share.

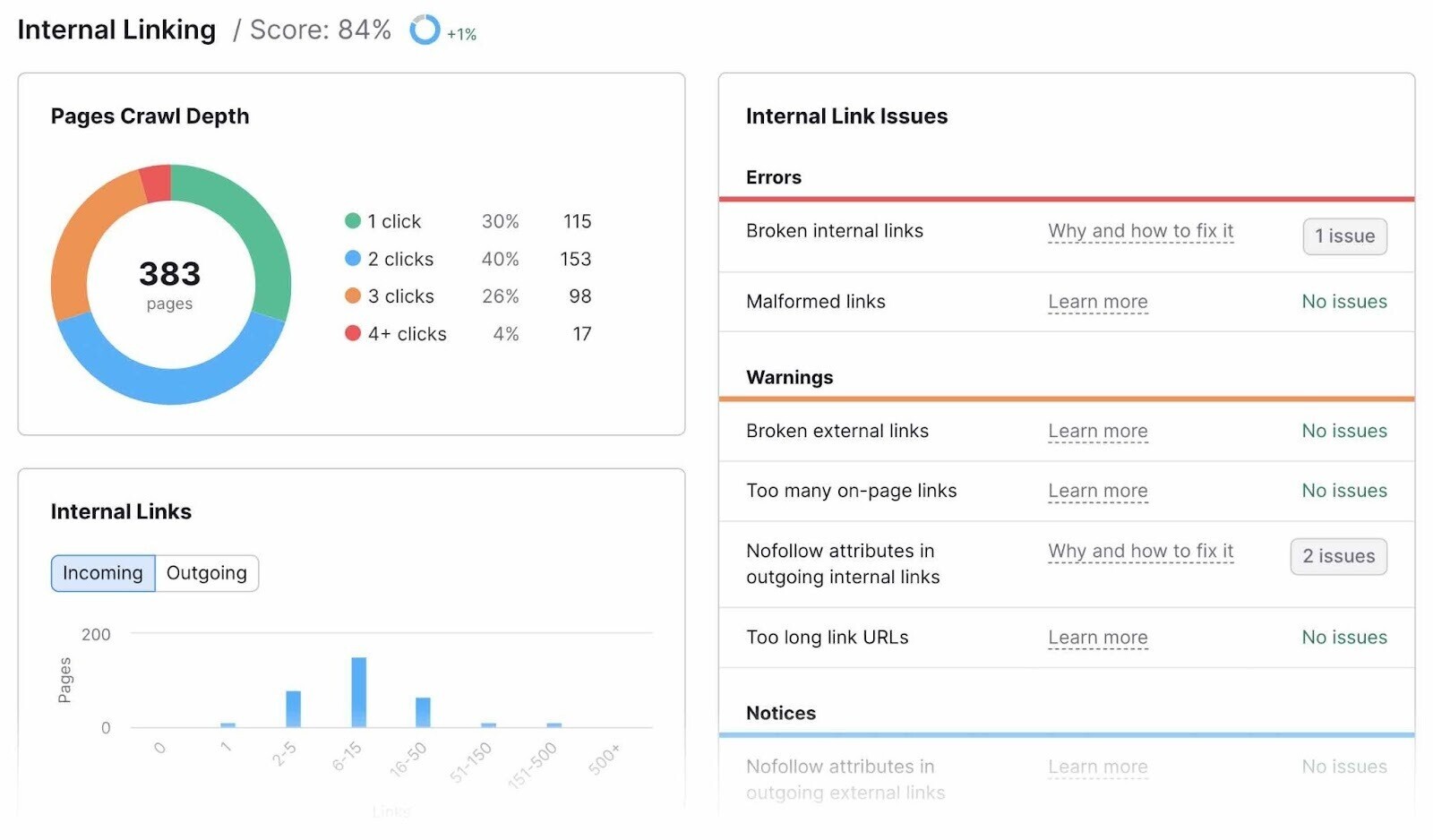

3. Fix Internal Linking Issues

Internal links point from one page to another within your domain.

Internal links are an essential part of a good website architecture. They distribute link equity (also known as “link juice” or “authority”) across your site. Which helps search engines identify important pages.

As you improve your site’s structure, check the health and status of its internal links.

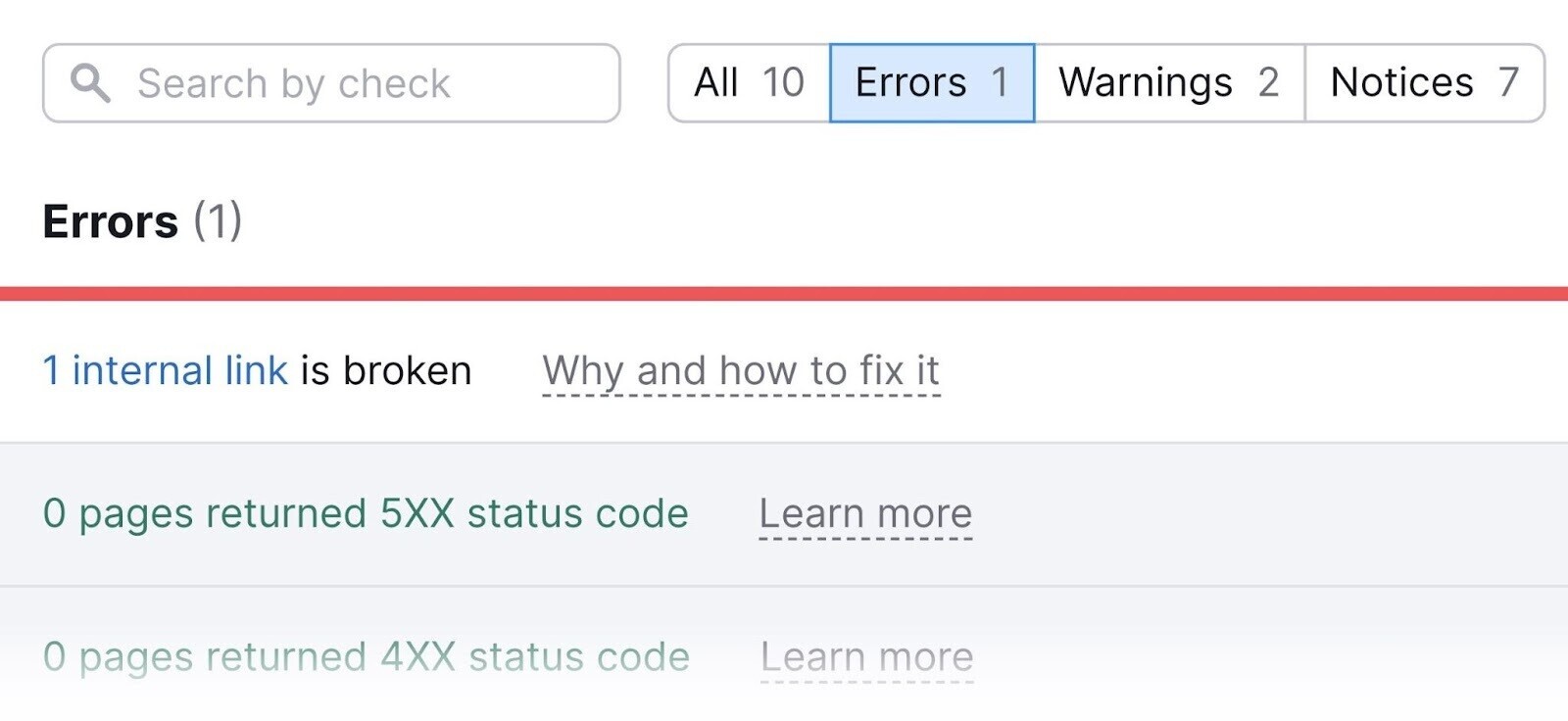

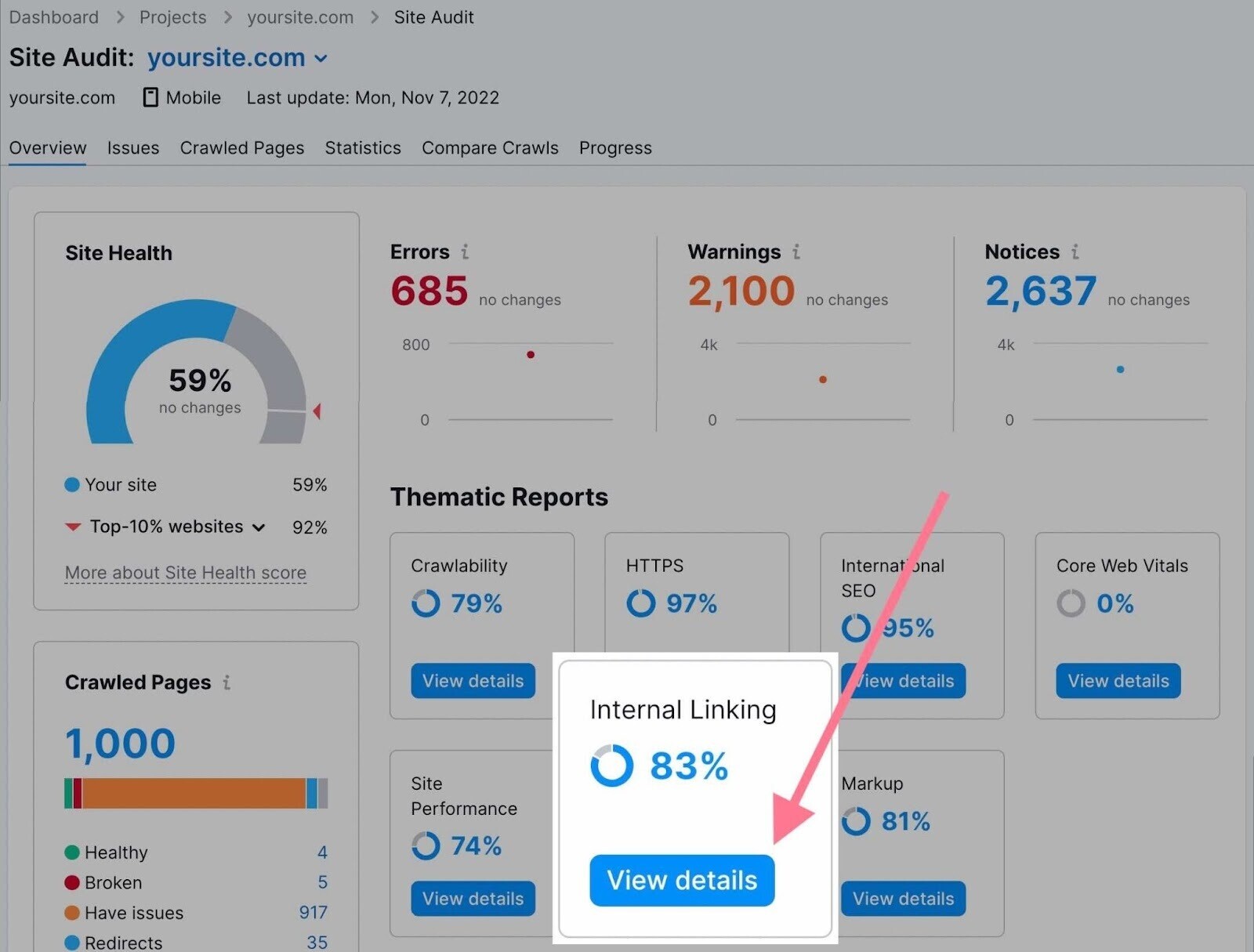

Refer back to the Site Audit report and click “View details” under your “Internal Linking” score.

In this report, you’ll see a breakdown of your site's internal link issues.

Broken internal links—links that point to pages that no longer exist—are a common internal linking mistake. And are fairly easy to fix.

Click the number of issues in the “Broken internal links” error on your “Internal Link Issues” report. And manually update the broken links in the list.

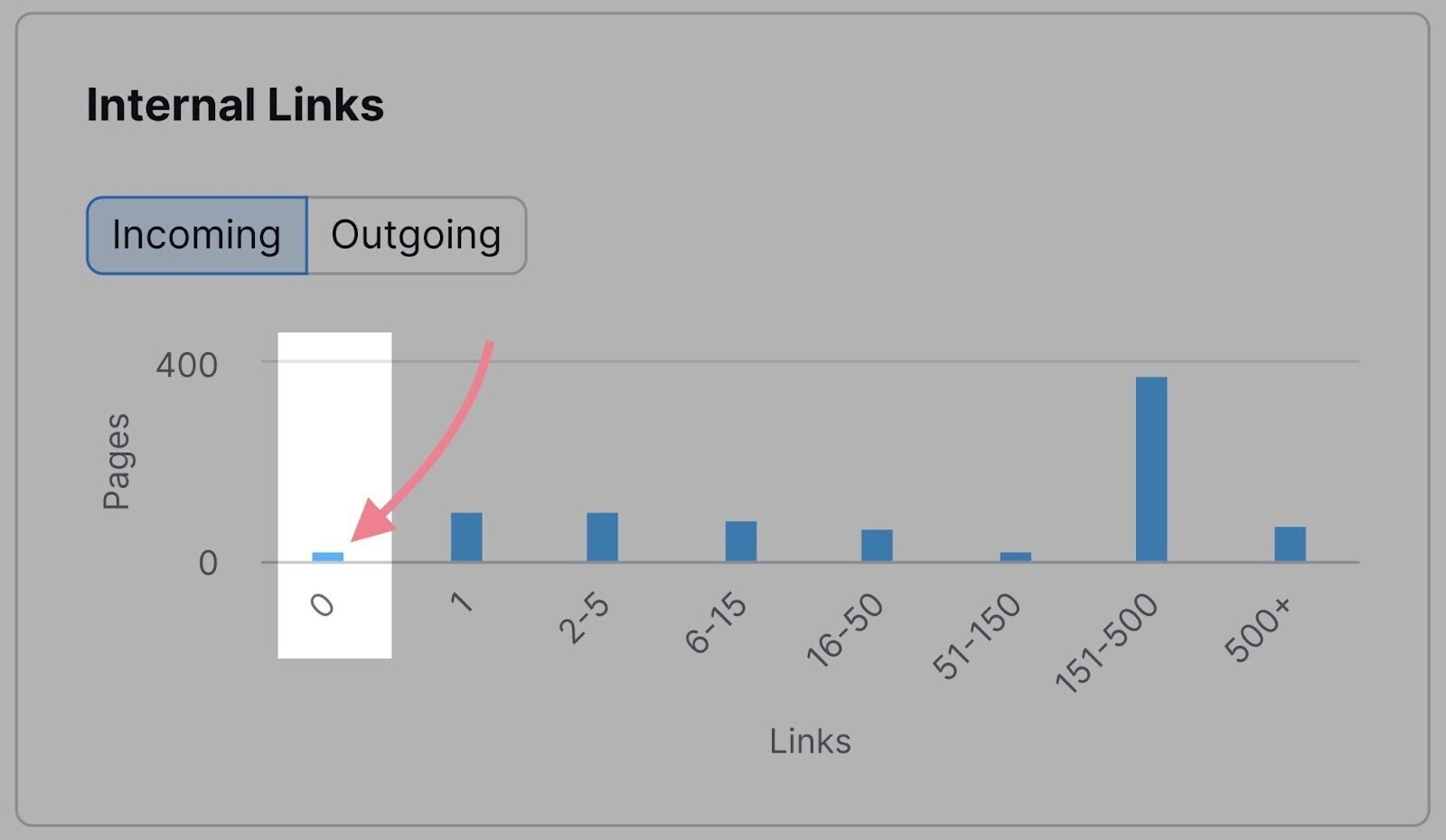

Another easy fix is orphaned pages. These are pages with no links pointing to them. Which means you can’t gain access to them via any other page on the same website.

Check the “Internal Links” bar graph to look for pages with zero links.

Add at least one internal link to each of these pages.

Use the “Internal Link Distribution” graph to see the distribution of your pages according to their Internal LinkRank (ILR).

ILR shows how strong a page is in terms of internal linking. The closer to 100, the stronger a page.

Use this metric to learn which pages could benefit from additional internal links. And which pages you can use to distribute more link equity across your domain.

But don’t continue fixing issues that could have been avoided. Follow these internal linking best practices to avoid issues in the future:

- Make internal linking part of your content creation strategy

- Every time you create a new page, link to it from existing pages

- Don’t link to URLs that have redirects (link to the redirect destination instead)

- Link to relevant pages and use relevant anchor text

- Use internal links to show search engines which pages are important

- Don't use too many internal links (use common sense here—a standard blog post likely doesn't need 50 internal links)

- Learn about nofollow attributes and use them correctly

4. Spot and Fix Duplicate Content Issues

Duplicate content means multiple webpages contain identical or nearly identical content.

It can lead to several problems, including:

- SERPs displaying an incorrect version of your page

- The most relevant pages not performing well in SERPs

- Indexing problems on your site

- Splitting your page authority between duplicate versions

- Increased difficulty in tracking your content's performance

Site Audit flags pages as duplicate content if their content is at least 85% identical.

Duplicate content can happen for two common reasons:

- There are multiple versions of URLs

- There are pages with different URL parameters

Multiple Versions of URLs

For example, a site may have:

- An HTTP version

- An HTTPS version

- A www version

- A non-www version

For Google, these are different versions of the site. So if your page runs on more than one of these URLs, Google considers it a duplicate.

To fix this issue, select a preferred version of your site and set up a sitewide 301 redirect. This will ensure only one version of each page is accessible.

URL Parameters

URL parameters are extra elements of a URL used to filter or sort website content. They're commonly used for product pages with slight changes (e.g., different color variations of the same product).

You can identify them because by the question mark and equal sign.

Because URLs with parameters have almost the same content as their counterparts without parameters, they can often be identified as duplicates.

Google usually groups these pages and tries to select the best one to use in search results. Google will typically identify the most relevant version of the page and display that in search results—while consolidating ranking signals from the duplicate versions.

Nevertheless, Google recommends these actions to reduce potential problems:

- Reduce unnecessary parameters

- Use canonical tags pointing to the URLs with no parameters

Avoid crawling pages with URL parameters when setting up your SEO audit. To ensure the Site Audit tool only crawls pages you want to analyze—not their versions with parameters.

Customize the “Remove URL parameters” section by listing all the parameters you want to ignore:

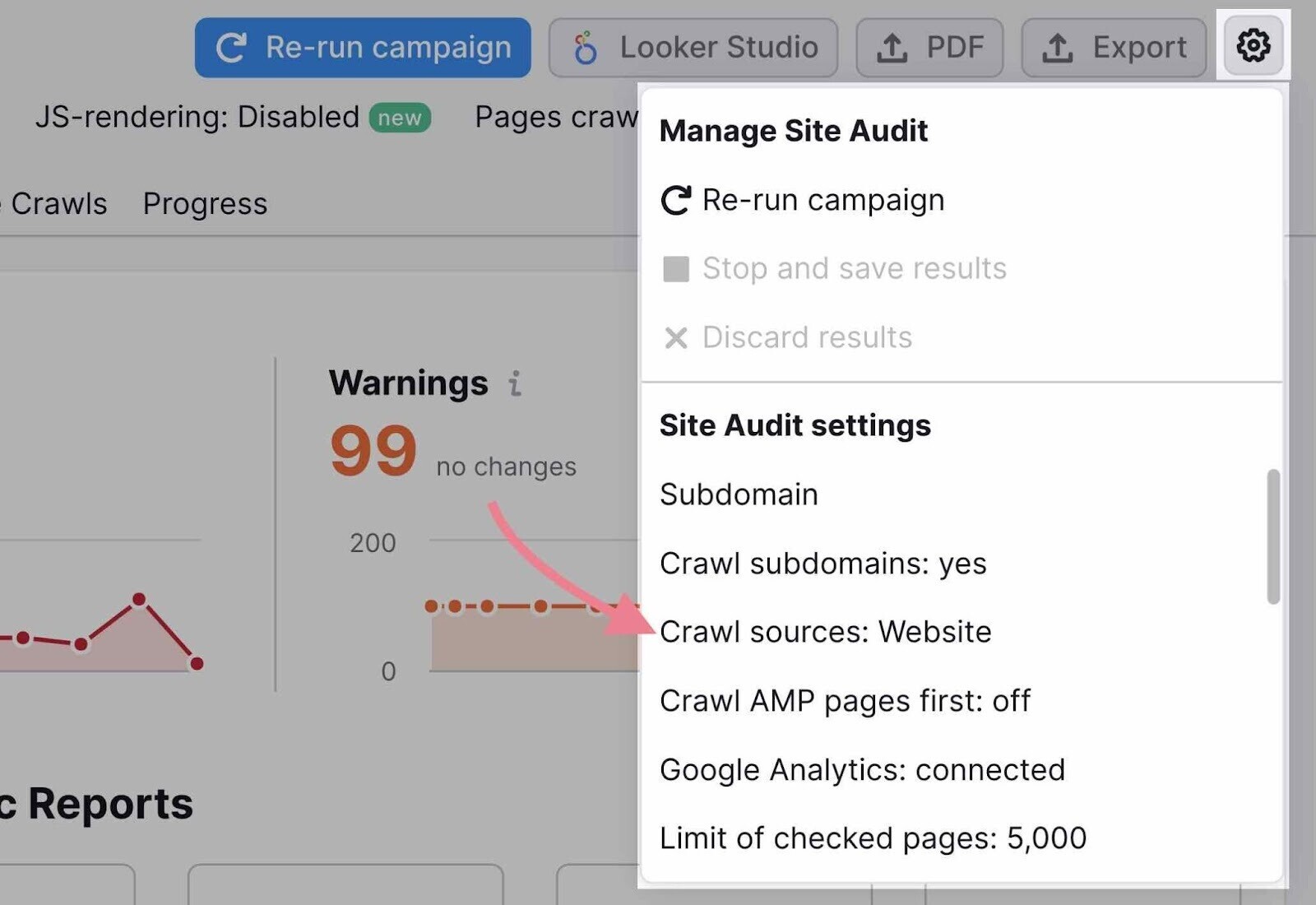

To access these settings later, click the settings (gear) icon in the top-right corner, then click “Crawl sources: Website” under the Site Audit settings.

5. Audit Your Site Performance

Site speed is a crucial aspect of the overall page experience and has long been a Google ranking factor.

When you audit a site for speed, consider two data points:

- Page speed: How long it takes one webpage to load

- Site speed: The average page speed for a sample set of page views on a site

Improve page speed, and your site speed improves.

This is such an important task that Google has a tool specifically made to address it: PageSpeed Insights.

A handful of metrics influence PageSpeed scores. The three most important ones are called Core Web Vitals.

They include:

- Largest Contentful Paint (LCP): measures how fast the main content of your page loads

- Interaction to Next Paint (INP): measures how quickly your page responds to user interactions

- Cumulative Layout Shift (CLS): measures how visually stable your page is

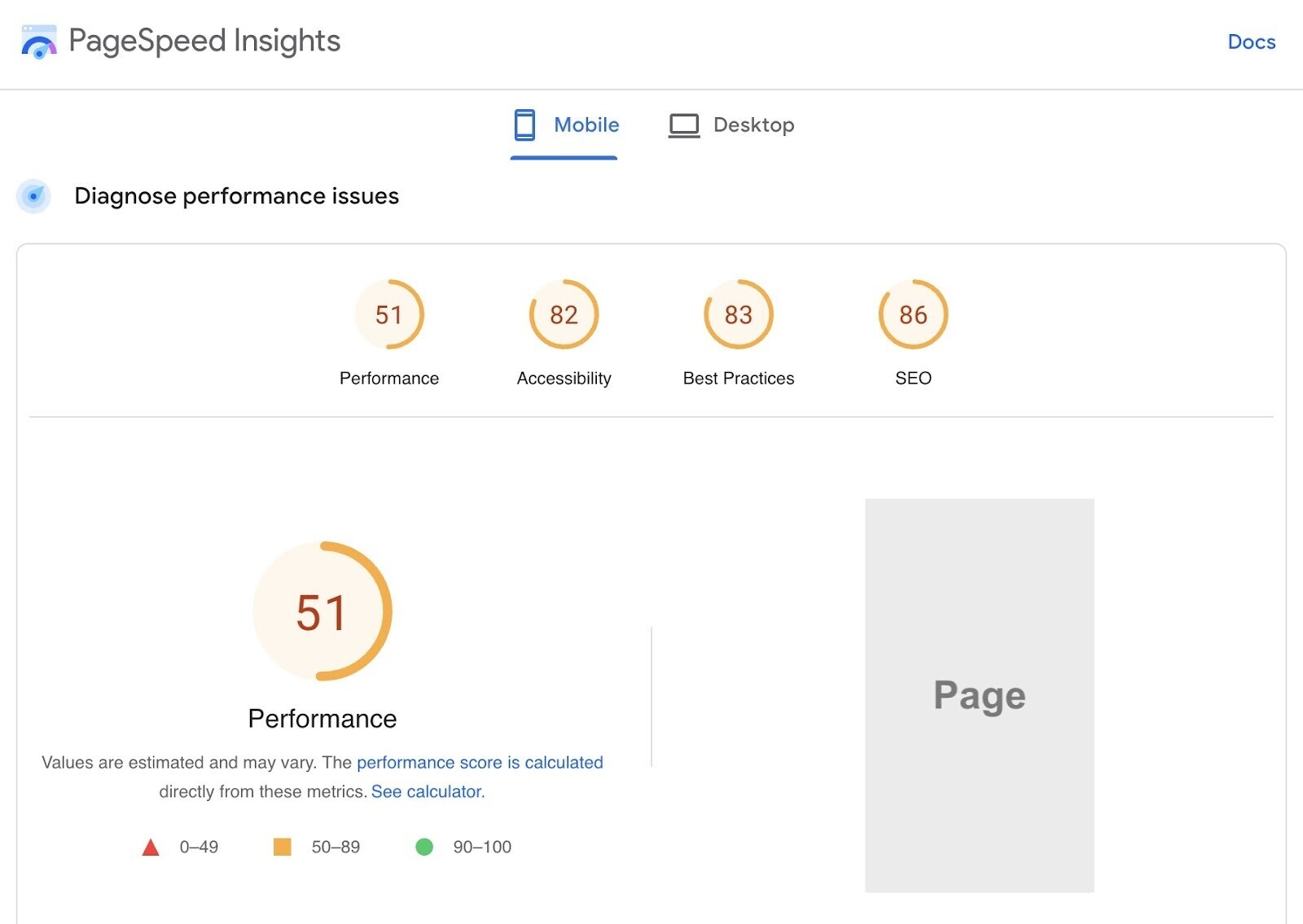

PageSpeed Insights provides details and opportunities to improve your page in four main areas:

- Performance

- Accessibility

- Best Practices

- SEO

But PageSpeed Insights can only analyze one URL at a time. To get the sitewide view, use Semrush's Site Audit.

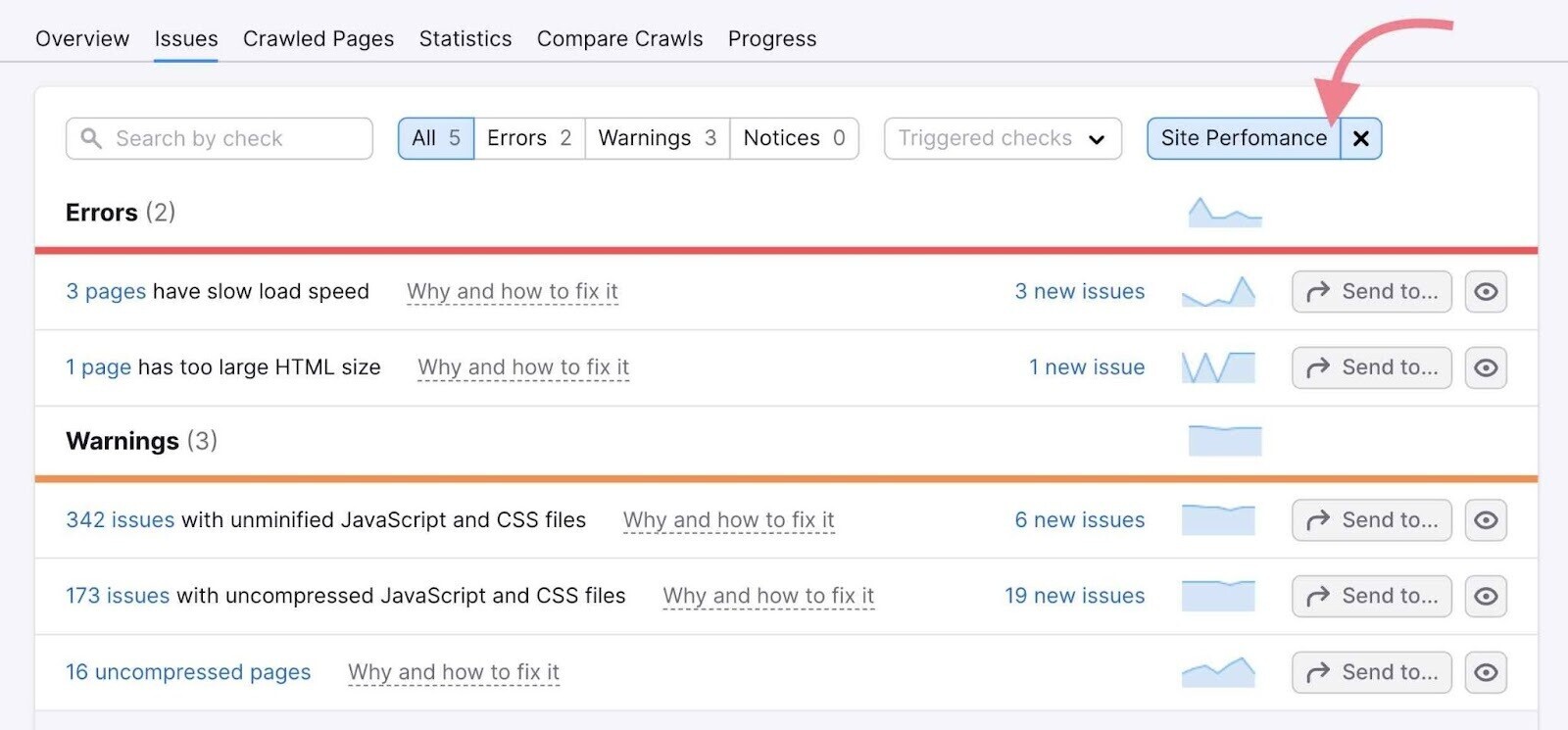

Head to the “Issues” tab and select the “Site Performance” category.

Here, you can see all the pages a specific issue affects—like slow load speed.

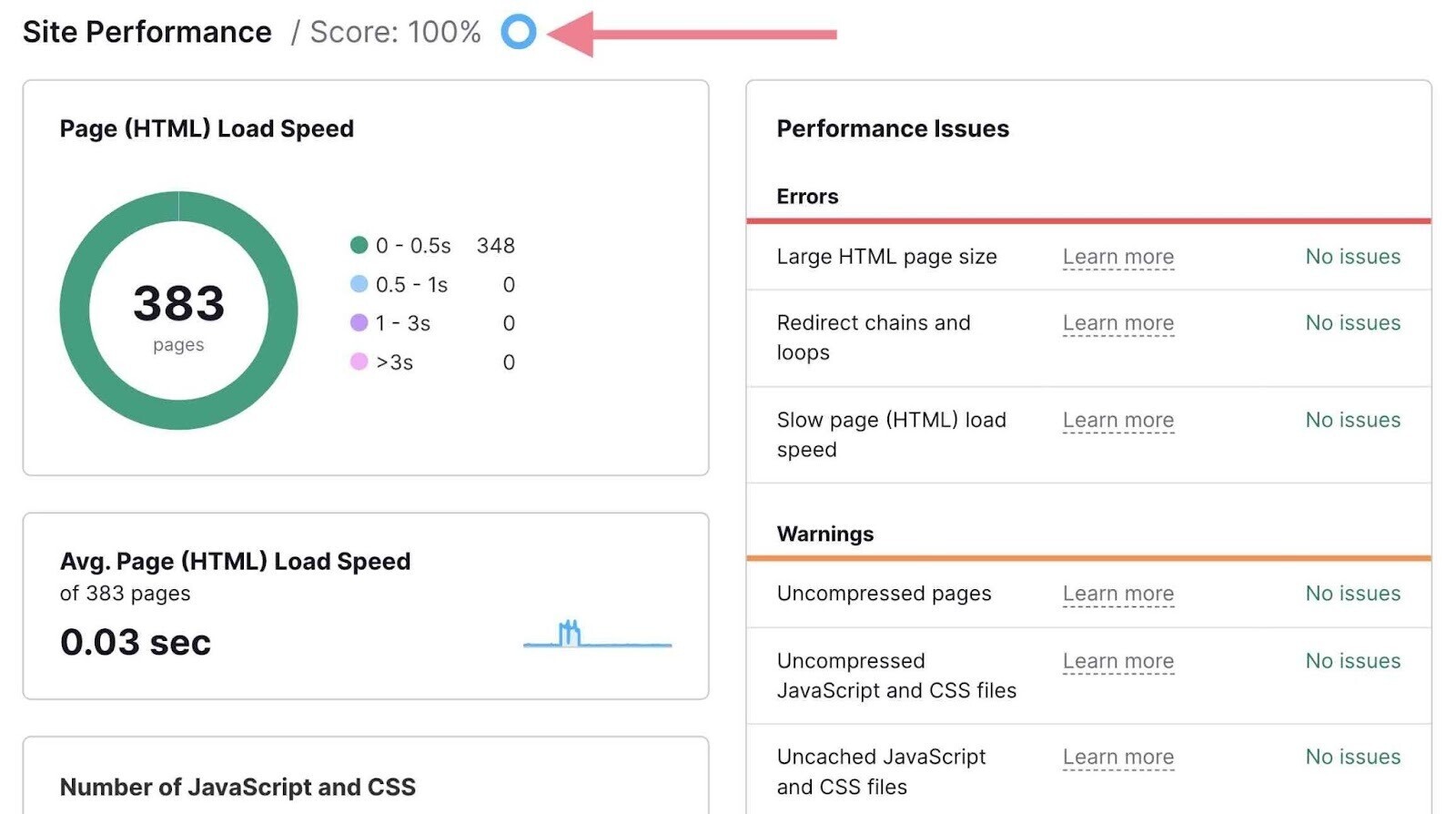

There are also two detailed reports dedicated to performance—the “Site Performance” report and the “Core Web Vitals” report.

Access both from the Site Audit Overview.

The “Site Performance” report provides an additional “Site Performance Score.” Or a breakdown of your pages by their load speed and other useful insights.

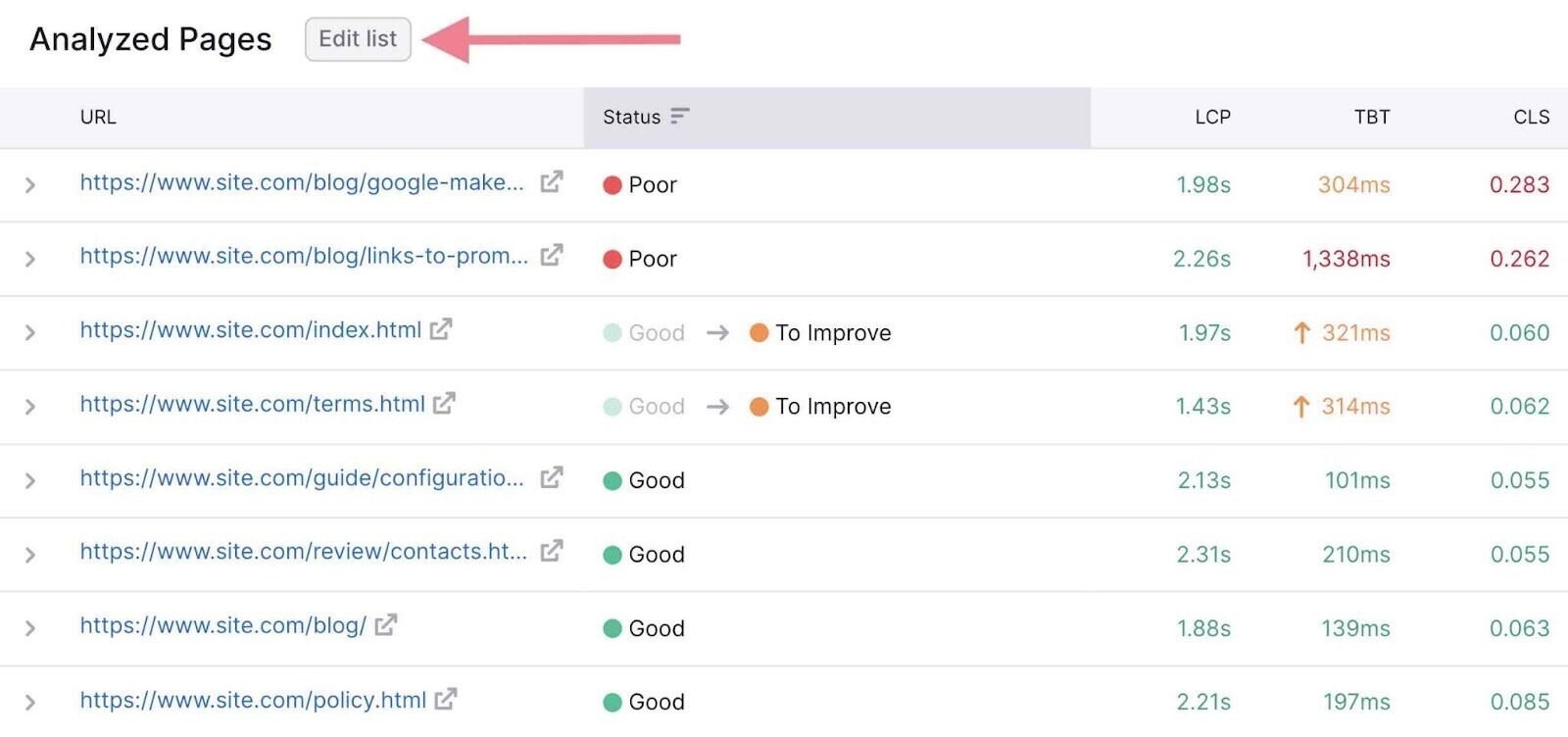

The Core Web Vitals report will break down your Core Web Vitals metrics based on 10 URLs. Track your performance over time with the “Historical Data” graph.

Or edit your list of analyzed pages so the report covers various types of pages on your site (e.g., a blog post, a landing page, and a product page).

Click “Edit list” in the “Analyzed Pages” section.

Further reading: Site performance is a broad topic and one of the most important aspects of technical SEO. To learn more about the topic, check out our page speed guide, as well as our detailed guide to Core Web Vitals.

6. Discover Mobile-Friendliness Issues

As of January 2024, more than half (60.08%) of web traffic happens on mobile devices.

And Google primarily indexes the mobile version of all websites over the desktop version. (Known as mobile-first indexing.)

So ensure your website works perfectly on mobile devices.

Use Google’s Mobile-Friendly Test to quickly check mobile usability for specific URLs.

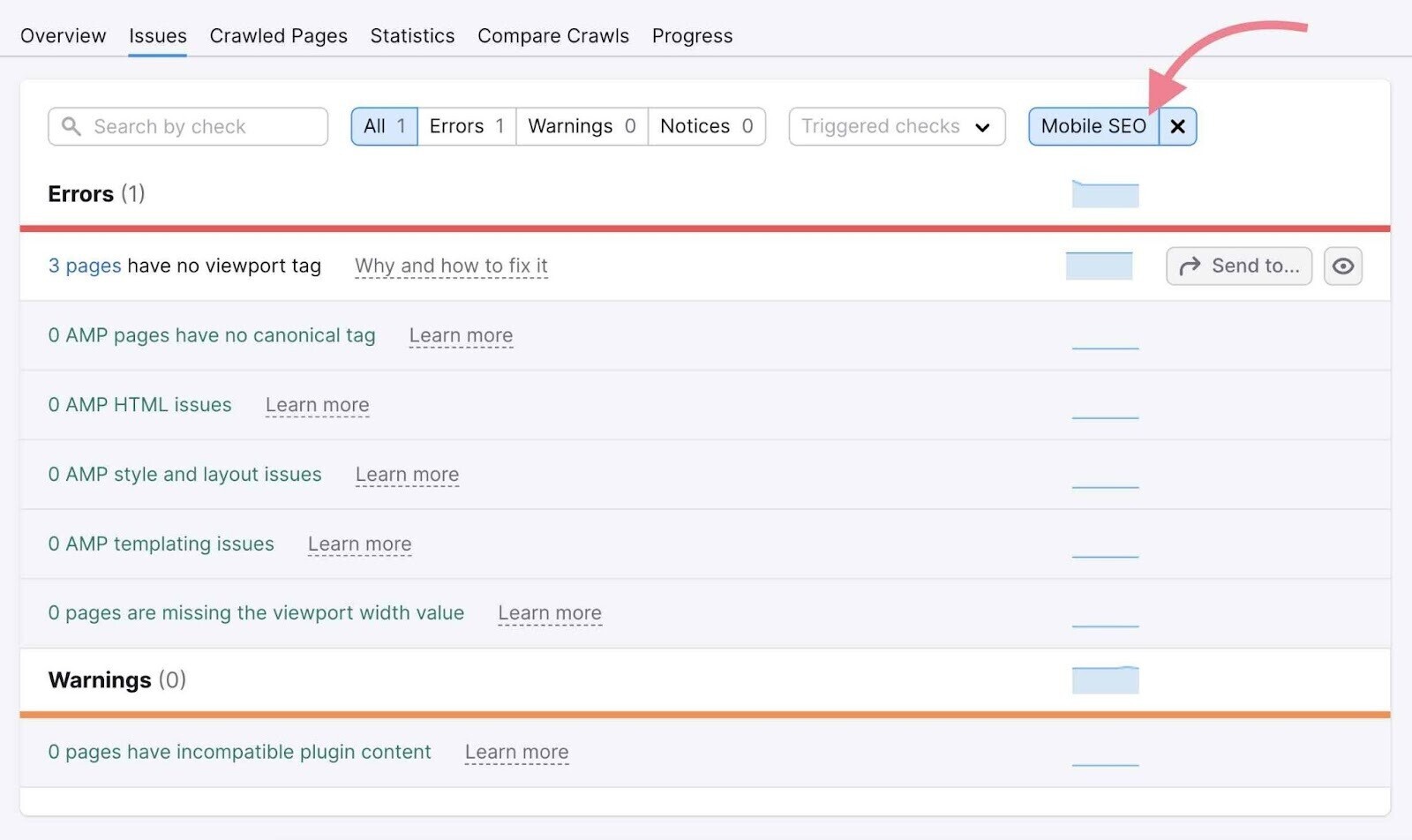

And use Semrush to check two important aspects of mobile SEO: viewport meta tag and AMPs.

Just select the “Mobile SEO” category in the “Issues” tab of the Site Audit tool.

A viewport meta tag is an HTML tag that helps you scale your page to different screen sizes. It automatically alters the page size based on the user’s device when you have a responsive design.

Another way to improve the site performance on mobile devices is to use Accelerated Mobile Pages (AMPs), which are stripped-down versions of your pages.

AMPs load quickly on mobile devices because Google runs them from its cache rather than sending requests to your server.

If you use AMPs, audit them regularly to make sure you’ve implemented them correctly to boost your mobile visibility.

Site Audit will test your AMPs for various issues divided into three categories:

- AMP HTML issues

- AMP style and layout issues

- AMP templating issues

7. Spot and Fix Code Issues

Regardless of what a webpage looks like to human eyes, search engines only see it as a bunch of code.

So, it’s important to use proper syntax. And relevant tags and attributes that help search engines understand your site.

During your technical SEO audit, monitor different parts of your website code and markup. Including HTML (which includes various tags and attributes), JavaScript, and structured data.

Let’s dig into these.

Meta Tag Issues

Meta tags are text snippets that provide search engine bots with additional data about a page’s content. These tags are present in your page’s header as a piece of HTML code.

We've already covered the robots meta tag (related to crawlability and indexability) and the viewport meta tag (related to mobile-friendliness).

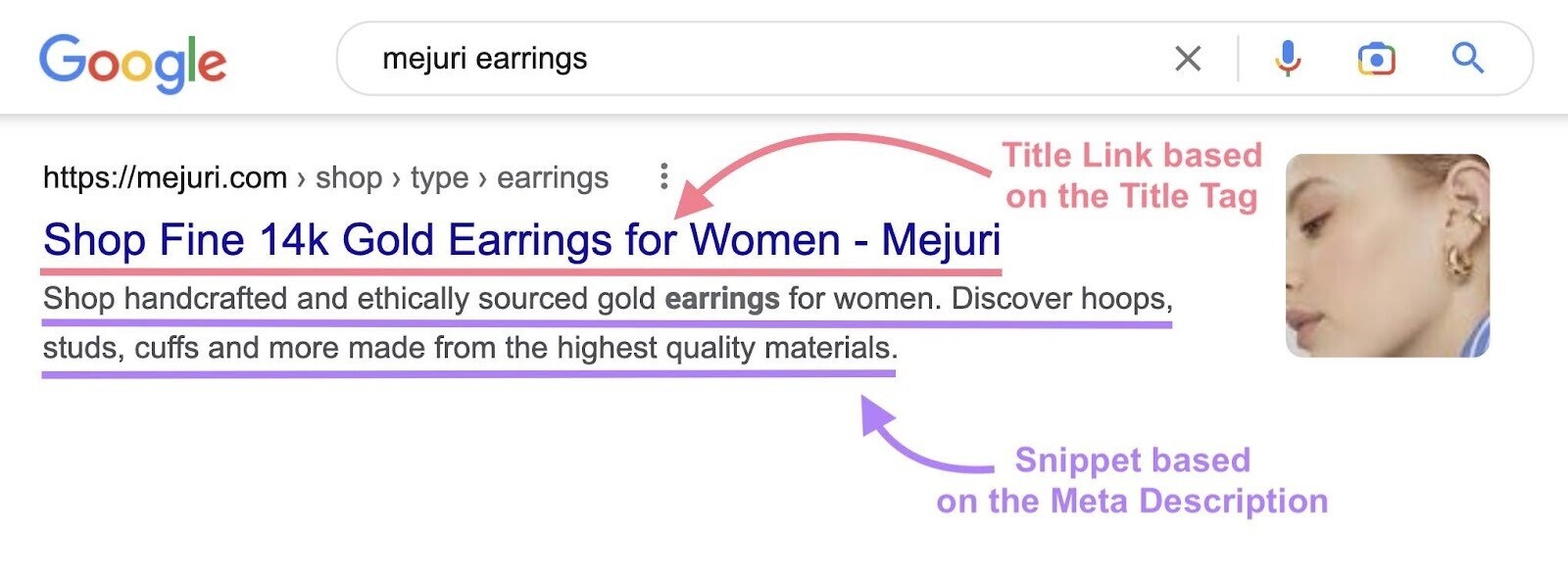

You should understand two other types of meta tags:

- Title tag: Indicates the title of a page. Search engines use title tags to form the clickable blue link in the search results. Read our guide to title tags to learn more.

- Meta description: A brief description of a page. Search engines use it to form the snippet of a page in the search results. Although not directly tied to Google’s ranking algorithm, a well-optimized meta description has other potential SEO benefits like improving click-through rates and making your search result stand out from competitors.

To see issues related to meta tags in your Site Audit report, select the “Meta tags” category in the “Issues” tab.

Here are some common meta tag issues you might find:

- Missing title tags: A page without a title tag may be seen as low quality by search engines. You're also missing an opportunity to tell users and search engines what your page is about.

- Duplicate title tags: When multiple pages have the same title, it's hard for search engines to determine which page is most relevant for a search query. This can hurt your rankings.

- Title tags that are too long: If your title exceeds 70 characters, it might get cut off in search results. This looks unappealing and might not convey your full message.

- Title tags that are too short: Titles with 10 characters or less don't provide enough information about your page. This limits your ability to rank for different keywords.

- Missing meta descriptions: Without a meta description, search engines might use random text from your page as the snippet in search results. This could be unappealing to users and reduce click-through rates.

- Duplicate meta descriptions: When multiple pages have the same meta description, you're missing chances to use relevant keywords and differentiate your pages. This can confuse both search engines and users.

- Pages with a meta refresh tag: This outdated technique can cause SEO and usability issues. Use proper redirects instead.

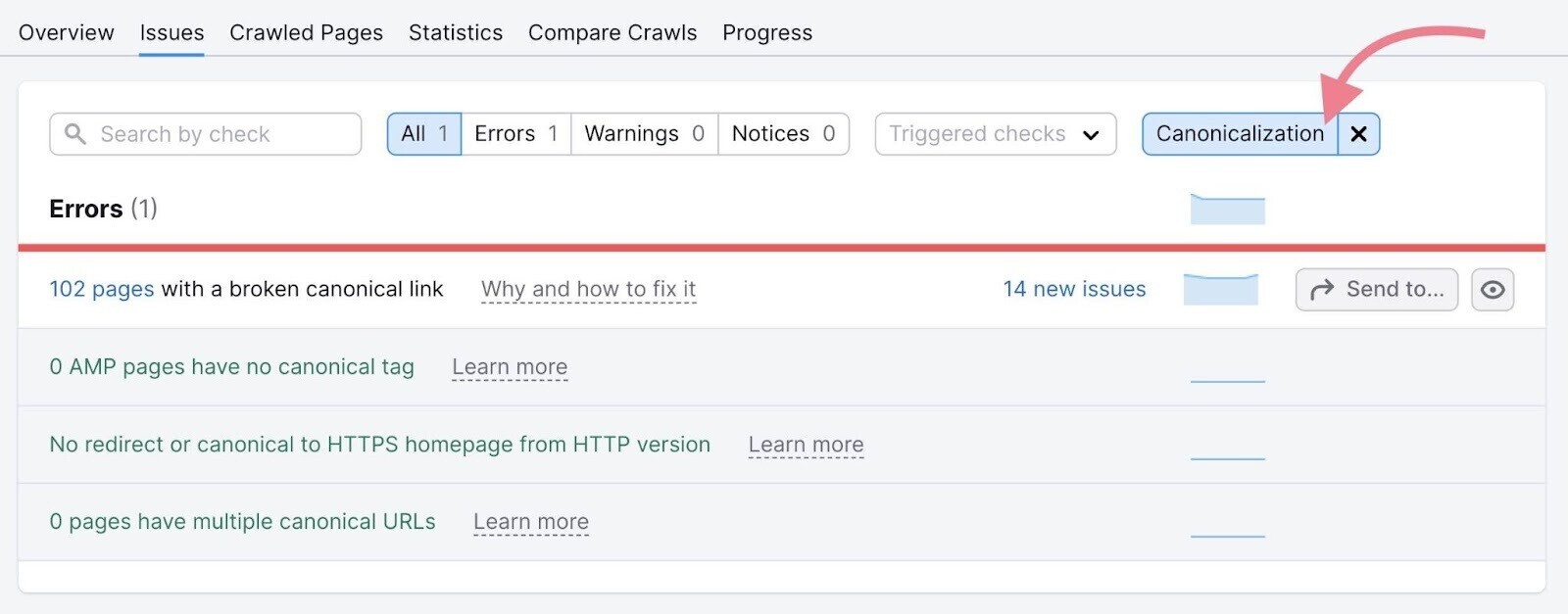

Canonical Tag Issues

Canonical tags are used to point out the “canonical” (or “main”) copy of a page. They tell search engines which page needs to be indexed in case there are multiple pages with duplicate or similar content.

A canonical URL tag is placed in the <head> section of a page's code and points to the “canonical” version.

It looks like this:

<link rel="canonical" href="https://www.domain.com/the-canonical-version-of-a-page/" />A common canonicalization issue is that a page has either no canonical tag or multiple canonical tags. Or, of course, a broken canonical tag.

The Site Audit tool can detect all of these issues. To only see the canonicalization issues, go to “Issues” and select the “Canonicalization” category in the top filter.

Common canonical tag issues include:

- AMPs with no canonical tag: If you have both AMP and non-AMP versions of a page, missing canonical tags can lead to duplicate content issues. This confuses search engines about which version to show in the results.

- No redirect or canonical to HTTPS homepage from HTTP version: When you have both HTTP and HTTPS versions of your homepage without proper direction, search engines struggle to know which one to prioritize. This can split your SEO efforts and hurt your rankings.

- Pages with a broken canonical link: If your canonical tag points to a non-existent page, you're wasting the crawl budget and confusing search engines.

- Pages with multiple canonical URLs: Having more than one canonical tag on a page gives conflicting directions. Search engines might ignore all of them or pick the wrong one, potentially hurting your SEO results.

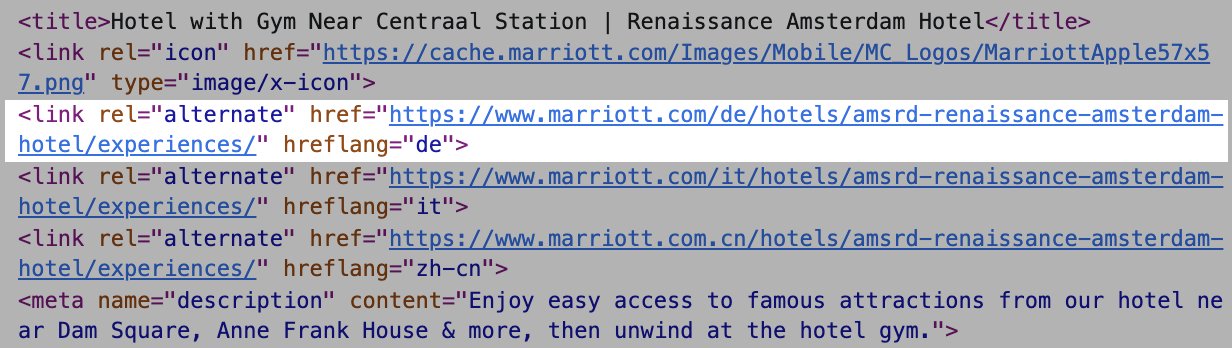

Hreflang Attribute Issues

The hreflang attribute denotes the target region and language of a page. It helps search engines serve the correct variation of a page based on the user’s location and language preferences.

If your site needs to reach audiences in more than one country, use hreflang attributes in <link> tags.

Like this:

To audit your hreflang annotations, go to the “International SEO” thematic report in Site Audit.

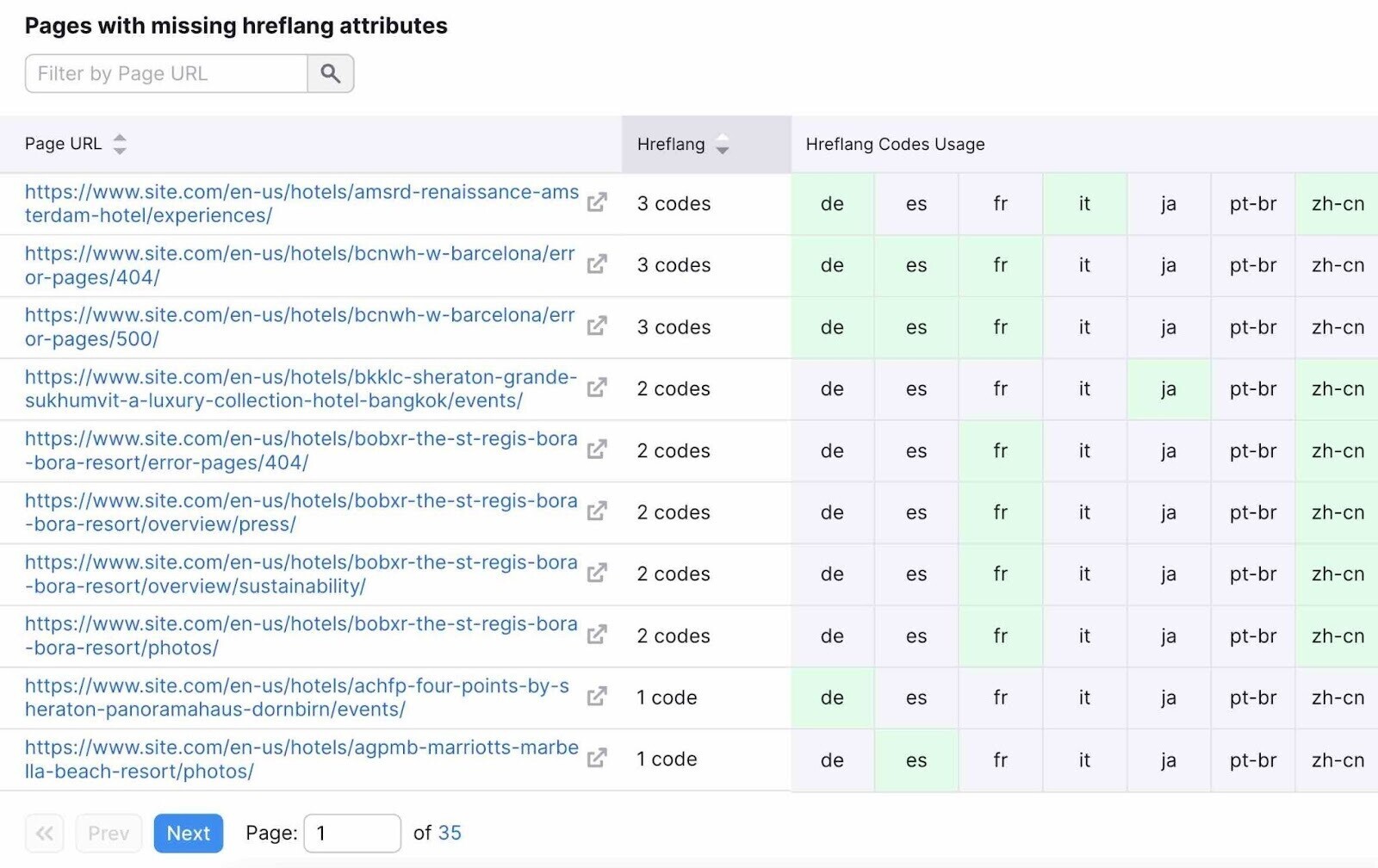

You’ll see a comprehensive overview of the hreflang issues on your site:

And a detailed list of pages with missing hreflang attributes on the total number of language versions your site has.

Common hreflang issues include:

- Pages with no hreflang and lang attributes: Without these, search engines can't determine the language of your content or which version to show users.

- Hreflang conflicts within page source code: Contradictory hreflang information confuses search engines. This can lead to the wrong language version appearing in search results.

- Issues with hreflang values: Incorrect country or language codes in your hreflang attributes prevent search engines from properly identifying the target audience for your content. This can lead to your pages being shown to the wrong users.

- Incorrect hreflang links: Broken or redirecting hreflang links make it difficult for search engines to understand your site's language structure. This can result in inefficient crawling and improper indexing of your multilingual content.

- Pages with hreflang language mismatch: When your hreflang tag doesn't match the actual language of the page, it's like false advertising. Users might land on pages they can't understand.

Fixing these issues helps ensure that your international audience sees the right content in search results. Which improves user experience and potentially boosts your global SEO ROI.

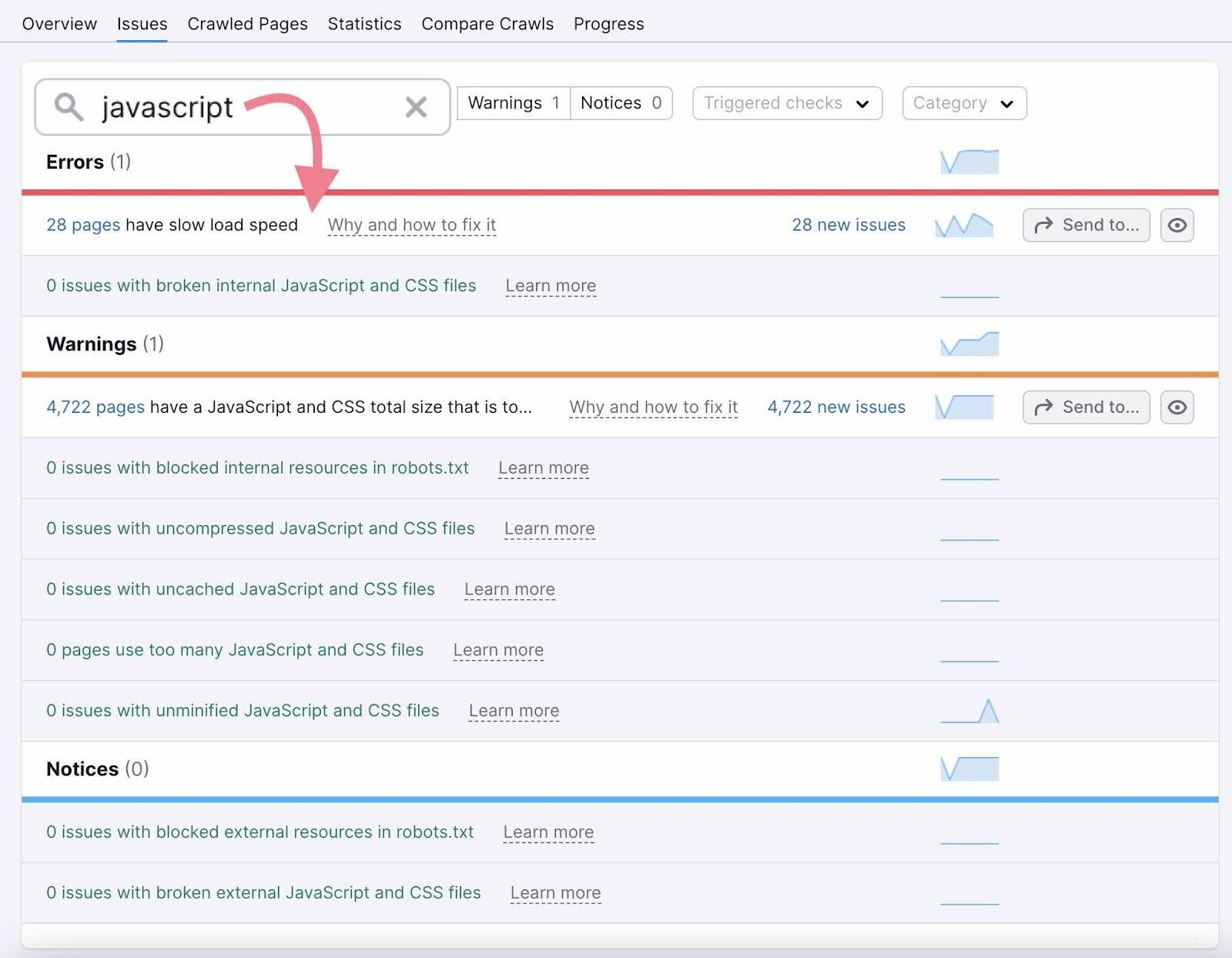

JavaScript Issues

JavaScript is a programming language used to create interactive elements on a page.

Search engines like Google use JavaScript files to render the page. If Google can’t get the files to render, it won’t index the page properly.

The Site Audit tool detects broken JavaScript files and flags the affected pages.

It can also show other JavaScript-related issues on your website. Including:

- Unminified JavaScript and CSS files: These files contain unnecessary code like comments and extra spaces. Minification removes this excess, reducing file size without changing functionality. Smaller files load faster.

- Uncompressed JavaScript and CSS files: Even after minification, these files can be compressed further. Compression reduces file size, making them quicker to download.

- Large total size of JavaScript and CSS: If your combined JS and CSS files exceed 2 MB after minification and compression, they can still slow down your page. This large size leads to poor UX and potentially lower search rankings.

- Uncached JavaScript and CSS files: Without caching, browsers must download these files every time a user visits your site. This increases load time and data usage for your visitors.

- Too many JavaScript and CSS files: Using more than 100 files increases the number of server requests, slowing down your page load time

- Broken external JavaScript and CSS files: When files hosted on other sites don't work, it can cause errors on your pages. This affects both user experience and search engine indexing.

Addressing these issues can improve your site's performance, user experience, and search engine visibility.

To check how Google renders a page that uses JavaScript, go to Google Search Console and use the “URL Inspection Tool.”

Enter your URL into the top search bar and hit enter.

Then, test the live version of the page by clicking “Test Live URL” in the top-right corner. The test may take a minute or two.

Now, you can see a screenshot of the page exactly how Google renders it. To check whether the search engine is reading the code correctly.

Just click the “View Tested Page” link and then the “Screenshot” tab.

Check for discrepancies and missing content to find out if anything is blocked, has an error, or times out.

Our JavaScript SEO guide can help you diagnose and fix JavaScript-specific problems.

Structured Data Issues

Structured data is data organized in a specific code format (markup) that provides search engines with additional information about your content.

One of the most popular shared collections of markup language among web developers is Schema.org.

Schema helps search engines index and categorize pages correctly. And help you capture SERP features (also known as rich results).

SERP features are special types of search results that stand out from the rest of the results due to their different formats. Examples include the following:

- Featured snippets

- Reviews

- FAQs

Use Google’s Rich Results Test tool to check whether your page is eligible for rich results.

Enter your URL to see all structured data items detected on your page.

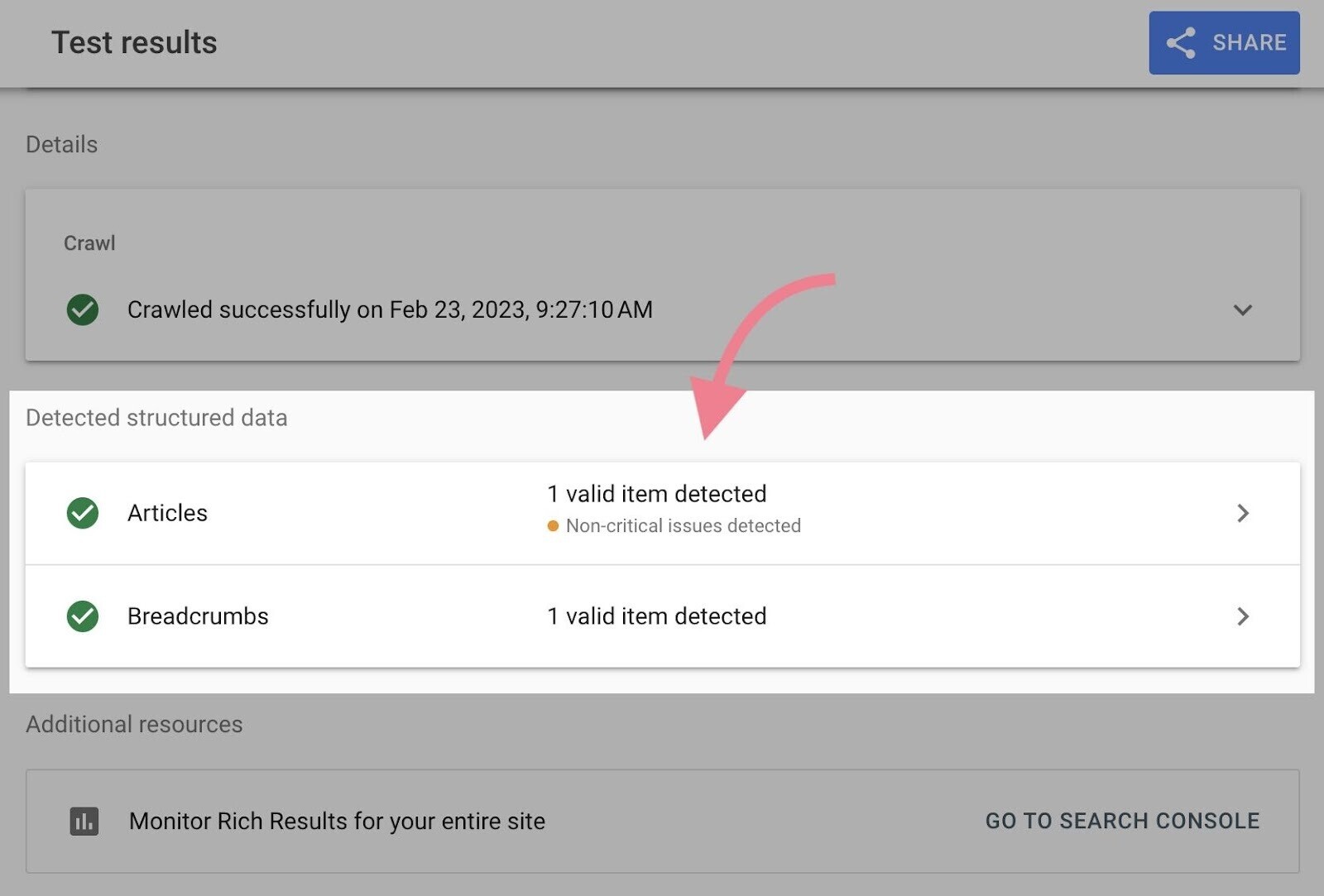

For example, this blog post uses “Articles” and “Breadcrumbs” structured data.

The tool will list any issues next to specific structured data items, along with links on how to address them.

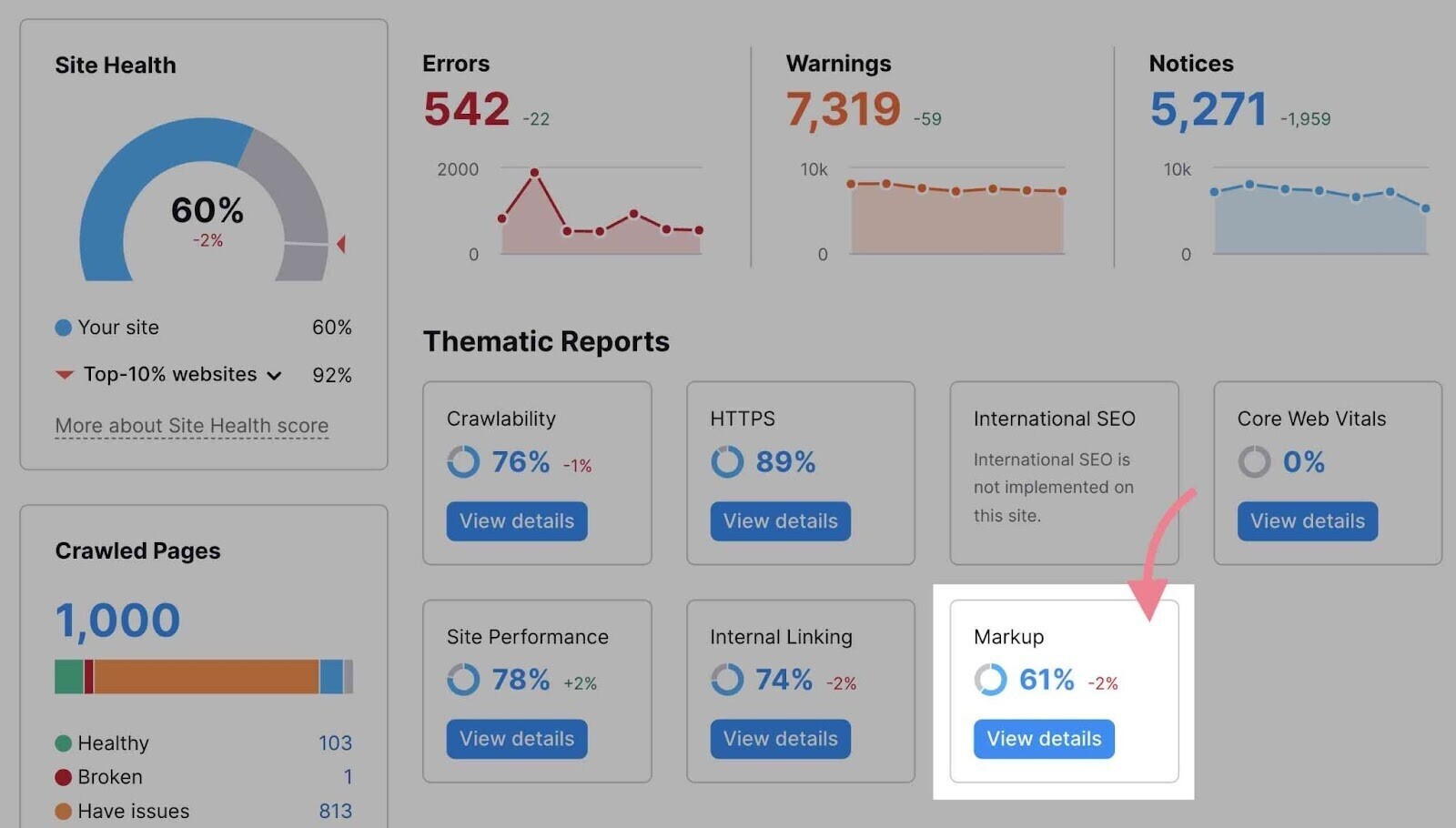

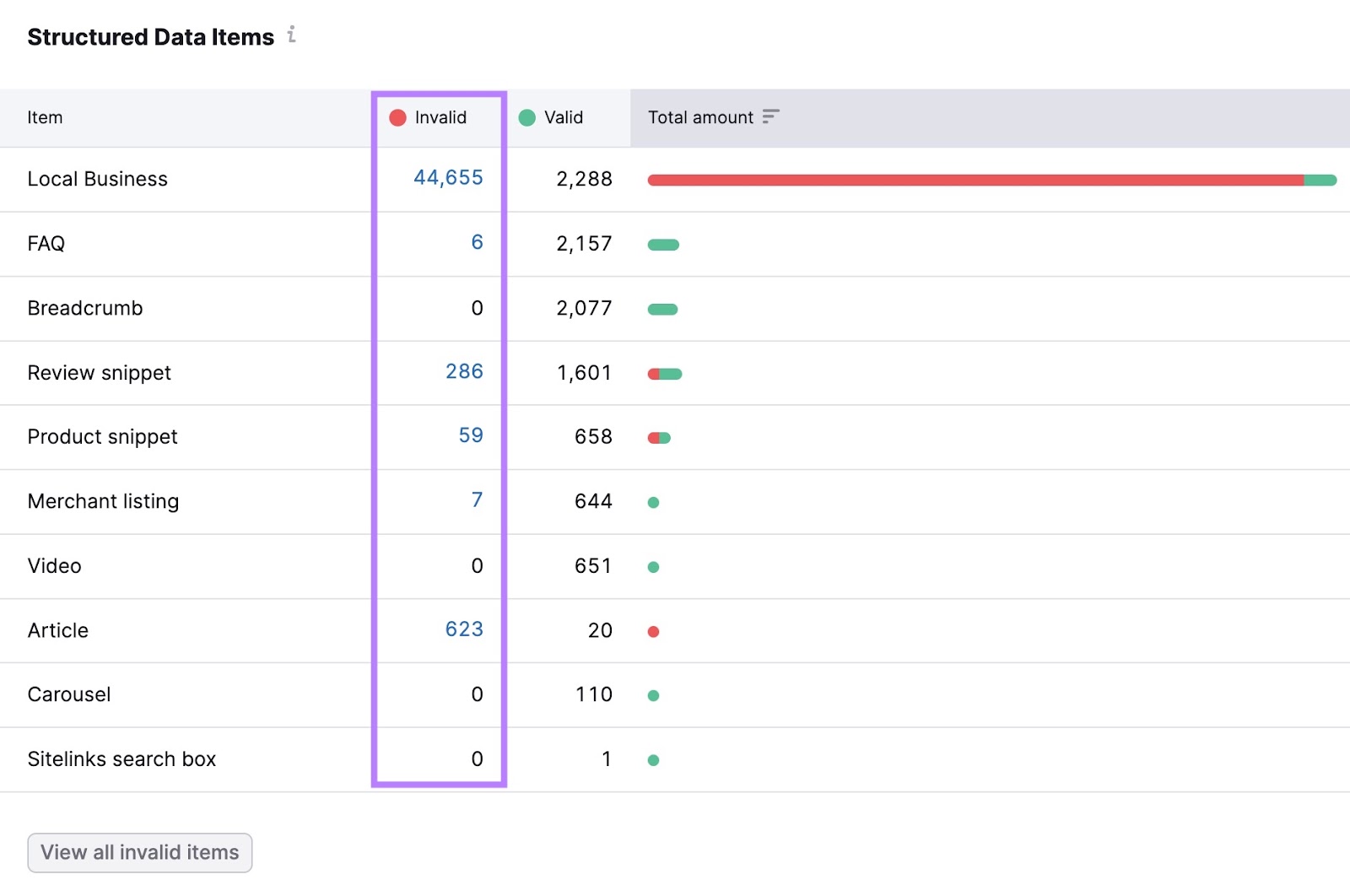

Or use the “Markup” thematic report in the Site Audit tool to identify structured data issues.

Just click “View details” in the “Markup” box in your audit overview.

The report will provide an overview of all the structured data types your site uses. And a list of any invalid items.

Invalid structured data occurs when your markup doesn't follow Google's guidelines. This can prevent your content from appearing in rich results.

Click on any item to see the pages affected.

Once you identify the pages with invalid structured data, use a validation tool like Google's Rich Results Test to fix any errors.

Further reading: Learn more about the “Markup” report and how to generate schema markup for your pages.

8. Check for and Fix HTTPS Issues

Your website should be using an HTTPS protocol (as opposed to HTTP, which is not encrypted).

This means your site runs on a secure server using an SSL certificate from a third-party vendor.

It confirms the site is legitimate and builds trust with users by showing a padlock next to the URL in the web browser:

HTTPS is a confirmed Google ranking signal.

Implementing HTTPS is not difficult. But it can bring about some issues. Here's how to address HTTPS issues during your technical SEO audit:

Open the “HTTPS” report in the Site Audit overview:

Here, you'll find a list of all issues connected to HTTPS. And advice on how to fix them.

Common issues include:

- Expired certificate: Your security certificate needs to be renewed

- Old security protocol version: Your website is running an old SSL or TLS (Transport Layer Security) protocol

- No server name indication: Lets you know if your server supports SNI (Server Name Indication). Which allows you to host multiple certificates at the same IP address to improve security

- Mixed content: Determines if your site contains any unsecure content, which can trigger a “not secure” warning in browsers

9. Find and Fix Problematic Status Codes

HTTP status codes indicate a website server’s response to the browser's request to load a page.

1XX statuses are informational. And 2XX statuses report a successful request. Don’t worry about these.

Let’s review the other three categories—3XX, 4XX, and 5XX statuses. And how to deal with them.

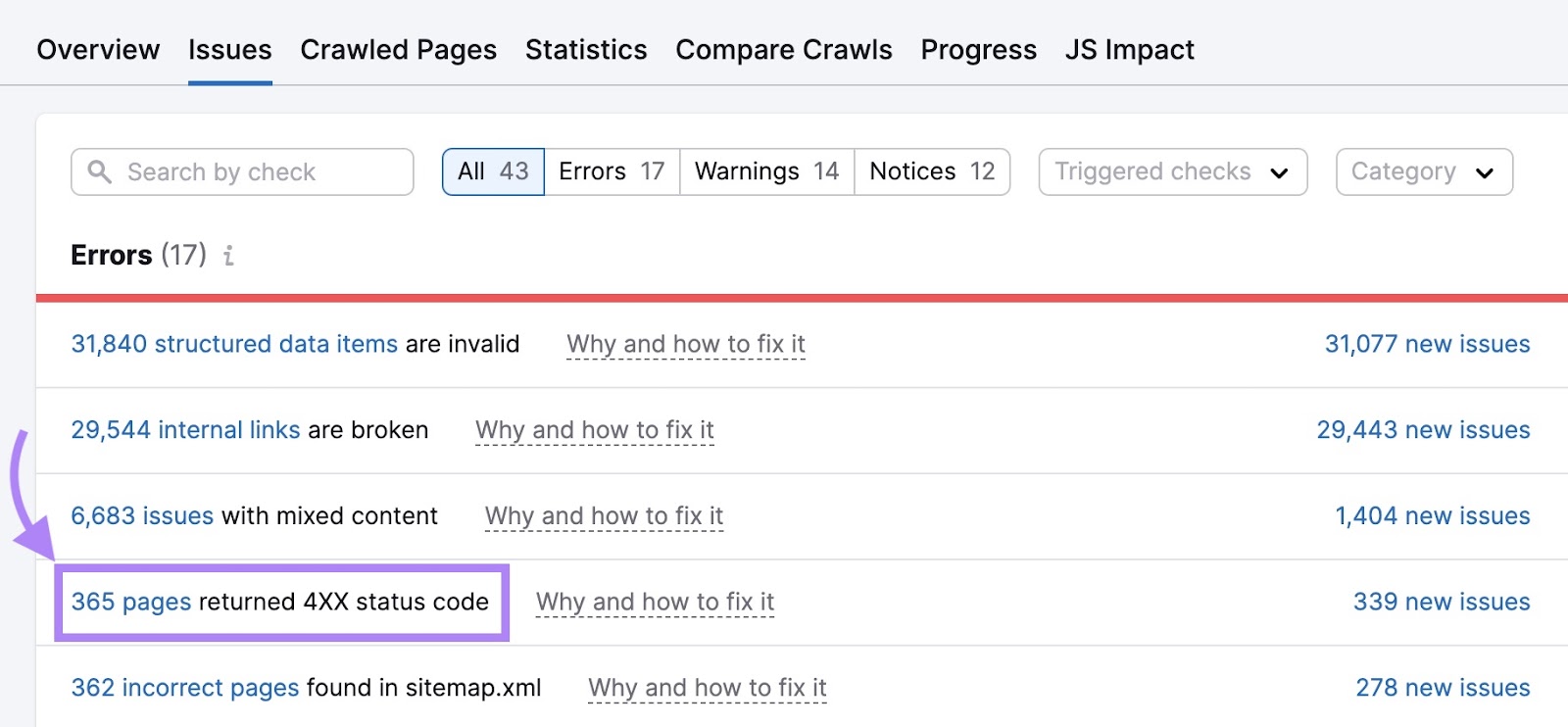

Open the “Issues” tab in Site Audit and select the “HTTP Status” category in the top filter.

To see all the HTTP status issues and warnings.

Click a specific issue to see the affected pages.

3XX Status Codes

3XX status codes indicate redirects—instances when users and search engine crawlers land on a page but are redirected to a new page.

Pages with 3XX status codes are not always problematic. However, you should always ensure they are used correctly to avoid any possible problems.

The Site Audit tool will detect all your redirects and flag any related issues.

The two most common redirect issues are as follows:

- Redirect chains: When multiple redirects exist between the original and final URL

- Redirect loops: When the original URL redirects to a second URL that redirects back to the original

Audit your redirects and follow the instructions provided within Site Audit to fix any errors.

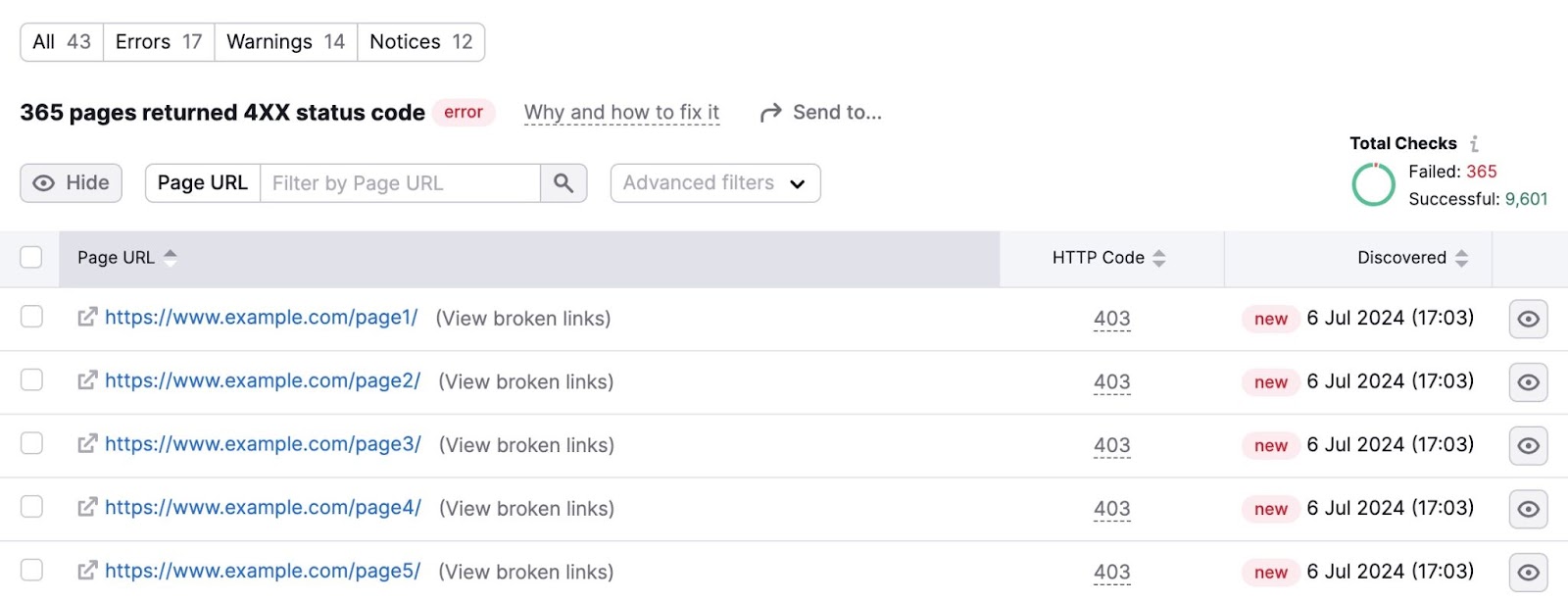

4XX Status Codes

4XX errors indicate that a requested page can’t be accessed. The most common 4XX error is the 404 error: Page not found.

If Site Audit finds pages with a 4XX status, remove all the internal links pointing to those pages.

First, open the specific issue by clicking on the corresponding number of pages with errors.

You'll see a list of all affected URLs.

Click “View broken links” in each line to see internal links that point to the 4XX pages listed in the report.

Remove the internal links pointing to the 4XX pages. Or replace the links with relevant alternatives.

5XX Status Codes

5XX errors are on the server side. They indicate that the server could not perform the request. These errors can happen for many reasons.

Such as:

- The server being temporarily down or unavailable

- Incorrect server configuration

- Server overload

Investigate why these errors occurred and fix them if possible. Check your server logs, review recent changes to your server configuration, and monitor your server's performance metrics.

10. Perform Log File Analysis

Your website’s log file records information about every user and bot that visits your site.

Log file analysis helps you look at your website from a web crawler's point of view. To understand what happens when a search engine crawls your site.

It’s impractical to analyze the log file manually. Instead, use Semrush’s Log File Analyzer.

You’ll need a copy of your access log file to begin your analysis. Access it on your server’s file manager in the control panel or via an FTP (FileTransfer Protocol) client.

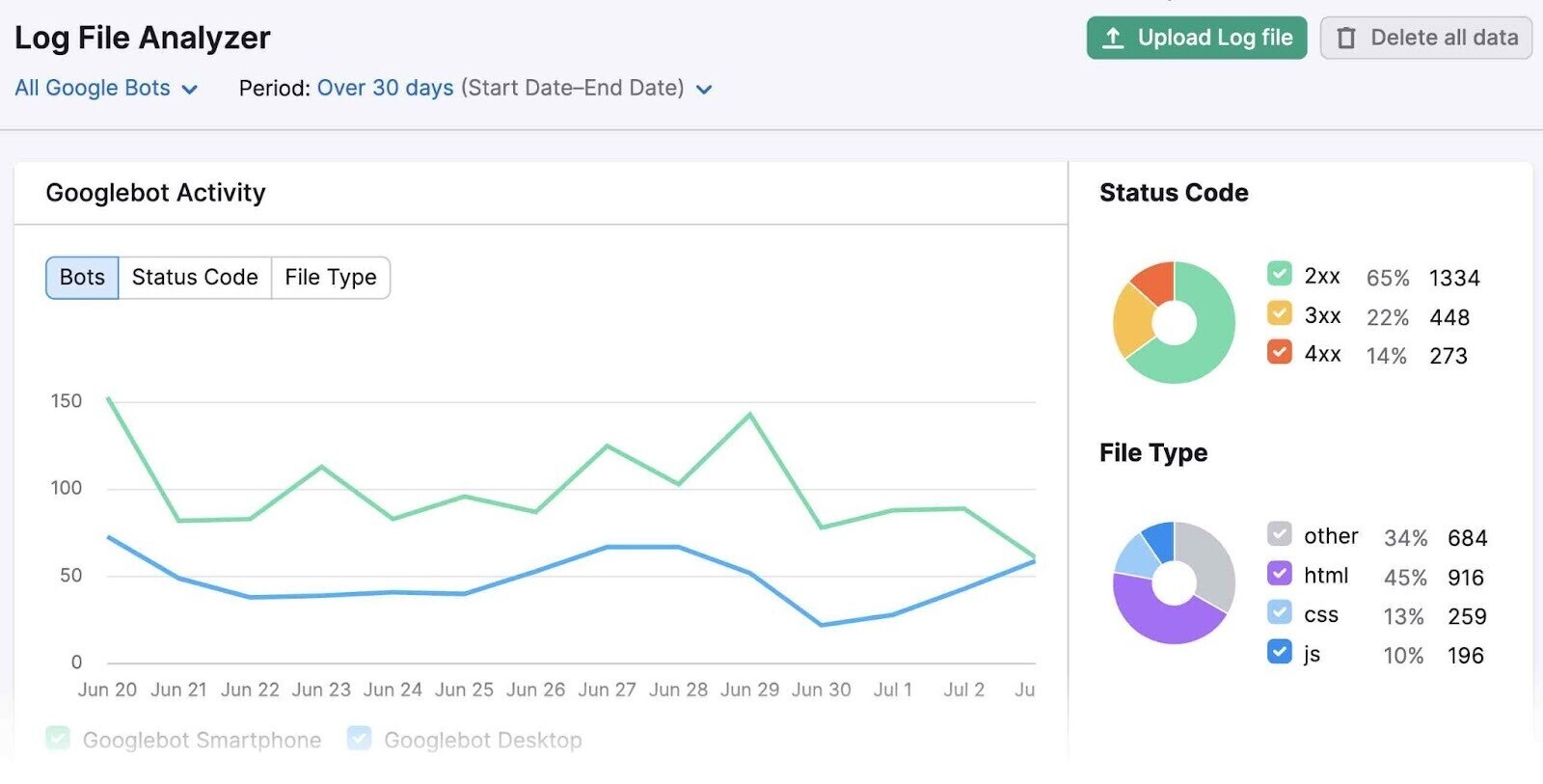

Then, upload the file to the tool and start the analysis. The tool will analyze Googlebot activity on your site and provide a report. That looks like this:

It can help you answer several questions about your website, including:

- Are errors preventing my website from being crawled fully?

- Which pages are crawled the most?

- Which pages are not being crawled?

- Do structural issues affect the accessibility of some pages?

- How efficiently is my crawl budget being spent?

These answers fuel your SEO strategy and help you resolve issues with the indexing or crawling of your webpages.

For example, if Log File Analyzer identifies errors that prevent Googlebot from fully crawling your website, you or a developer can work to resolve them.

To learn more about the tool, read our Log File Analyzer guide.

Boost Your Website’s Rankings with a Technical SEO Audit

A thorough technical SEO audit can positively affect your website's organic search ranking.

Now you know how to conduct a technical SEO audit, all you have to do is get started.

Use our Site Audit tool to identify and fix issues. And watch your performance improve over time.

This post was updated in 2024. Excerpts from the original article by A.J. Ghergich may remain.